Today, the Cloud Native Computing Foundation (CNCF) Technical Oversight Committee (TOC) voted to accept CNI (Container Networking Interface) as the 10th hosted project alongside Kubernetes, Prometheus, OpenTracing, Fluentd, Linkerd, gRPC, CoreDNS, containerd, and rkt.

Container-based applications are rapidly moving into production. Just as Kubernetes allows enterprise developers to run containers en masse across thousands of machines, containers at scale also need to be networked.

The CNI project is a network interface created by multiple companies and projects; including CoreOS, Red Hat OpenShift, Apache Mesos, Cloud Foundry, Kubernetes, Kurma and rkt. First proposed by CoreOS to define a common interface between the network plugins and container execution, CNI is designed to be a minimal specification concerned only with the network connectivity of containers and removing allocated resources when the container is deleted.

“The CNCF TOC wanted to tackle the basic primitives of cloud native and formed a working group around cloud native networking,” said Ken Owens, TOC project sponsor and CTO at Cisco. “CNI has become the defacto network interface today and has several interoperable solutions in production. Adopting CNI for the CNCF’s initial network interface for connectivity and portability is our primary order of business. With support from CNCF, our work group is in an excellent position to continue our work and look at models, patterns, and policy frameworks.”

“Interfaces really need to be as simple as possible. What CNI offers is a nearly trivial interface against which to develop new plugins. Hopefully this fosters new ideas and new ways of integrating containers and other network technologies,” said Tim Hockin, Principal Software Engineer at Google. “CNCF is a great place to nurture efforts like CNI, but CNI is still young, and it almost certainly needs fine-tuning to be as air-tight as it should be. At this level of the stack, networking is one of those technologies that should be ‘boring’ – it needs to work, and work well, in all environments.”

Used by companies like Ticketmaster, Concur, CDK Global, and BMW, CNI is now used for Kubernetes network plugins and has been adopted by the community and many product vendors for this use case. In the CNI repo there is a basic example for connecting Docker containers to CNI networks.

“CoreOS created CNI years ago to enable simple container networking interoperability across container solutions and compute environments. Today CNI has a thriving community of third-party networking solutions users can choose from that plug into the Kubernetes container infrastructure,” said Brandon Philips, CTO of CoreOS. “And since CoreOS Tectonic uses pure-upstream Kubernetes in an Enterprise Ready configuration we help customers deploy CNI-based networking solutions that are right for their environment whether on-prem or in the cloud.”

Automated Network Provisioning in Containerized Environments

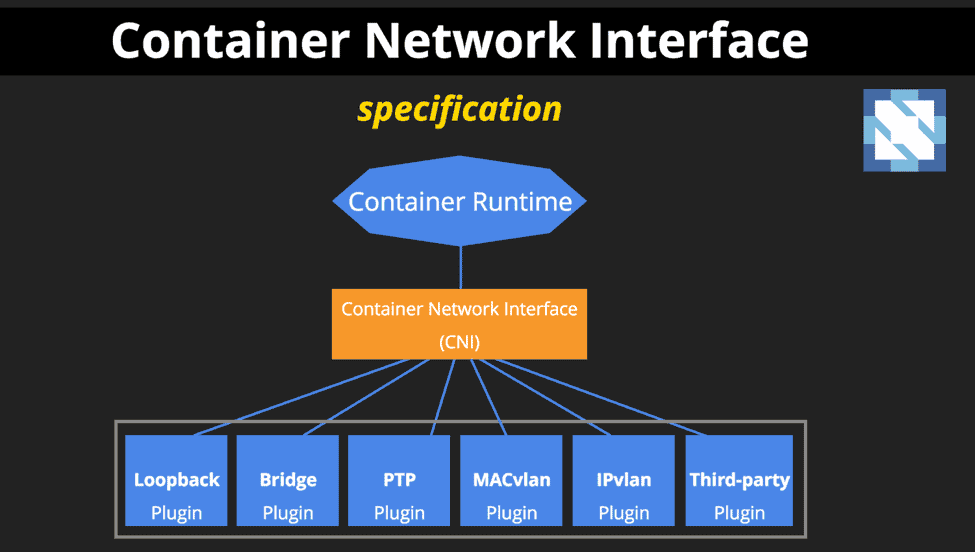

CNI has three main components:

- CNI Specification: defines an API between runtimes and network plugins for container network setup. No more, no less.

- Plugins: provide network setup for a variety of use-cases and serve as reference examples of plugins conforming to the CNI specification

- Library: provide a Go implementation of the CNI specification that runtimes can use to more easily consume CNI

CNI specification and libraries exist to write plugins to configure network interfaces in Linux containers. The plugins support the addition and removal of container network interfaces to and from networks. Defined by a JSON schema, its templated code makes it straight-forward to create a CNI plugin for an existing container networking project or a good framework for creating a new container networking project from scratch.

“As early supporters and contributors to the Kubernetes CNI design and implementation efforts, Red Hat is pleased that the Cloud Native Computing Foundation has decided to add CNI as a hosted project and to help extend CNI adoption. Once again, the power of cross-community, open source collaboration has delivered a specification that can help enable faster container innovation. Red Hat OpenShift Container Platform embraced CNI both to create a CNI plugin for its default OpenShift SDN solution based on Open vSwitch, and to allow for easier replacement by other third party CNI-compatible networking plugins. CNI is now the recommended way to enable networking solutions for OpenShift. Other projects like Open Virtual Networking (OVN) project have used CNI to integrate more cleanly and quickly with Kubernetes. As CNI gets widely adopted, the integration can automatically extend to other popular frameworks.” — Diane Mueller, Director, Community Development Red Hat OpenShift

Graphic courtesy of Lee Calcote, Sr. Director, Technology Strategy at SolarWinds

Notable Milestones:

- 56 Contributors

- 591 Github stars

- 17 releases

- 14 plugins

Adopters (Plugins):

- Container runtimes

- CoreOS Tectonic – Enterprise Ready, Portable, Upstream Kubernetes

- rkt – container engine

- Kurma – container runtime

- Kubernetes – a system to simplify container operations

- OpenShift – Red Hat’s container platform

- Cloud Foundry – a platform for cloud applications

- Mesos – a distributed systems kernel

- 3rd party plugins

- Project Calico – a layer 3 virtual network

- Weave – a multi-host Docker network

- Contiv Networking – policy networking for various use cases

- SR-IOV

- Cilium – BPF & XDP for containers

- Infoblox – enterprise IP address management for containers

- Multus – a Multi plugin

- Romana – Layer 3 CNI plugin supporting network policy for Kubernetes

- CNI-Genie – generic CNI network plugin

- flannel a network fabric for containers, designed for Kubernetes

- CoreOS Kubernetes Namespace CNI – select CNI plugin per-namespace

- VMware NSX plugin

- Nuage VSP plugin

“CNI provides a much needed common interface between network layer plugins and container execution,” said Chris Aniszczyk, COO of Cloud Native Computing Foundation. “Many of our members and projects have adopted CNI, including Kubernetes and rkt. CNI works with all the major container networking runtimes.”

As a CNCF hosted project, CNI will be part of a neutral community aligned with technical interests, receive help in defining an initial guideline for a network interface specification focused on connectivity and portability of cloud native application patterns. CNCF will also assist with CNI marketing and documentation efforts.

“The CNCF network working group’s first objective of curating and promoting a networking project for adoption was a straightforward task – CNI’s ubiquity across the container ecosystem is unquestioned,” said Lee Calcote, Sr. Director, Technology Strategy at SolarWinds. “The real challenge is addressing the remaining void around higher-level network services. We’re preparing to set forth on this task, and on defining and promoting common cloud-native networking models.” Anyone interested in seeing CNI in action should check out Calcote’s talk on container networking at Velocity on June 21.

For more on CNI, read The New Stack article or take a look at the KubeCon San Francisco slide deck “Container Network Interface: Network Plugins for Kubernetes and beyond” by Eugene Yakubovich of CoreOS. CNI will also have a technical salon at CloudNativeCon + KubeCon North America 2017 in Austin on December 6.

To join or learn more about the Kubernetes SIGs and Working Groups, including the Networking SIG, click here. To join the CNCF Networking WG, click here.

Stay up to date on all CNCF happenings by signing up for our monthly newsletter.