Today, we are publishing our first CNCF Technology Radar, a new initiative from the CNCF End User Community. This is a group of over 140 top companies and startups who meet regularly to discuss challenges and best practices when adopting cloud native technologies. The goal of the CNCF Technology Radar is to share what tools are actively being used by end users, the tools they would recommend, and their patterns of usage.

Slides: github.com/cncf/enduser-public/blob/master/CNCFTechnologyRadar.pdf

How it works

A technology radar is an opinionated guide to a set of emerging technologies. The popular format originated at Thoughtworks and has been adopted by dozens of companies including Zalando, AOE, Porsche, Spotify, and Intuit.

The key idea is to place solutions at one of four levels, reflecting advice you would give to someone who is choosing a solution:

- Adopt: We can clearly recommend this technology. We have used it for long periods of time in many teams, and it has proven to be stable and useful.

- Trial: We have used it with success and recommend you take a closer look at the technology.

- Assess: We have tried it out, and we find it promising. We recommend having a look at these items when you face a specific need for the technology in your project.

- Hold: This category is a bit special. Unlike the other categories, we recommend you hold on using something. That does not mean that these technologies are bad, and it often might be OK to use them in existing projects. But technologies are moved to this category if we think we shouldn’t use them because we see better options or alternatives now.

The CNCF Technology Radar is inspired by the format but with a few differences:

- Community-driven: The data is contributed by the CNCF End User Community and curated by community representatives.

- Focuses on future adoption, so there are only three rings: Assess, Trial, and Adopt.

- Instead of covering several hundred items, one radar will display 10-20 items on a specific use case. This removes the need to organize into quadrants.

- Instead of publishing annually, the cadence will be on a shorter time frame, targeting quarterly.

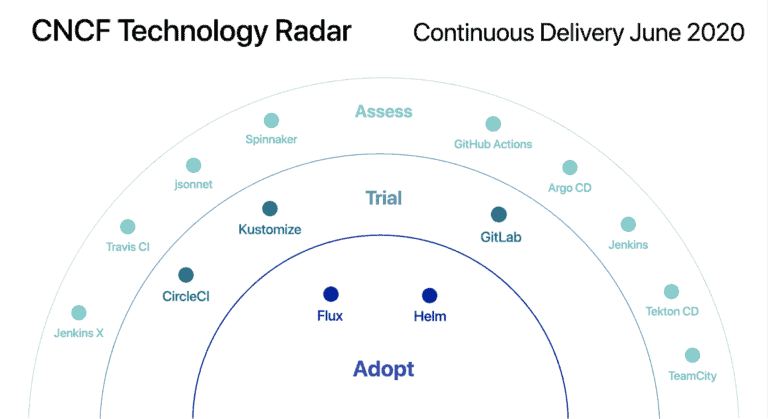

Our first technology radar focuses on Continuous Delivery.

CNCF Technology Radar: Continuous Delivery, June 2020

During May 2020, the members of the End User Community were asked which CD solutions they had assessed, trialed, and subsequently adopted. 177 data points were sorted and reviewed to determine the final positions.

This may be read as:

- Flux and Helm are widely adopted, and few or none of the respondents recommended against.

- Multiple companies recommend CircleCI, Kustomize, and GitLab, but something was lacking in the results. For example, not enough responses, or a few recommended against.

- Projects in Assess lacked clear consensus. For example, Jenkins has wide awareness, but the placement in Assess reflects comments from companies that are moving away from Jenkins for new applications. Spinnaker also showed broad awareness, but while many had tried it, none in this cohort positively recommended adoption. Those who are looking for a new CD solution should consider those in Assess given their own requirements.

The Themes

The themes describe interesting patterns and editor observations:

- Publicly available solutions are combined with in-house tools: Many end users had tried up to 10 options and settled on adopting 2-4. Several large enterprise companies have built their own continuous delivery tools and open sourced components, including LunarWay’s release-manager, Box’s kube-applier, and stackset-controller from Zalando. The public cloud managed solutions on the CNCF landscape were not suggested by any of the end users, which may reflect the options available a few years ago.

- Helm is more than packaging applications: While Helm has not positioned itself as a Continuous Delivery tool (it’s the Kubernetes package manager first), it’s widely used and adopted as a component in different CD scenarios.

- Jenkins is still broadly deployed, while cloud native-first options emerge. Jenkins and its ecosystem tools (Jenkins X, Jenkins Blue Ocean) are widely evaluated and used. However, several end users stated Jenkins is primarily used for existing deployments, while new applications have migrated to other solutions. Hence end users who are choosing a new CD solution should assess Jenkins alongside tools that support modern concepts such as GitOps (for example, Flux).

The Editor

Cheryl Hung is the Director of Ecosystem at CNCF. Her mission is to make end users successful and productive with cloud native technologies such as Kubernetes and Prometheus. Twitter: @oicheryl

Read more

CNCF Projects for Continuous Delivery:

- Argo is an open source container-native workflow engine for orchestrating parallel jobs on Kubernetes. A CNCF incubating project, it is composed of Argo CD, Argo Workflows, and Argo Rollouts.

- Flux is the open source GitOps operator for Kubernetes. It is a CNCF sandbox project.

- Helm is the open source package manager for Kubernetes. It recently graduated within CNCF.

Case studies: Read how Babylon and Intuit are handling continuous delivery.

What’s next

The next CNCF Technology Radar is targeted for September 2020, focusing on a different topic in cloud native such as security or storage. Vote to help decide the topic for the next CNCF Technology Radar.

Join the CNCF End User Community to:

- Find out who exactly is using each project and read their comments

- Contribute to and edit future CNCF Technology Radars. Subsequent radars will be edited by people selected from the End User Community.

We are excited to provide this report to the community, and we’d love to hear what you think. Email feedback to info@cncf.io.

About the methodology

In May 2020, the 140 companies in the CNCF End User Community were asked to describe what their companies recommended for different solutions: Hold, Assess, Trial, or Adopt. They could also give more detailed comments. As the answers were submitted via a Google Spreadsheet, they were neither private nor anonymized within the group.

33 companies submitted 177 data points on 21 solutions. These were sorted in order to determine the final positions. Finally, the themes were written to reflect broader patterns, in the opinion of the editors.