Guest Post from Weidong Cai and Ye Yin of Tencent

Recently, the Tencent Games container team named Tenc has open sourced the Kubernetes (K8s) multi-cluster scheduling project tensile-kube. This blog will briefly introduce the tensile-kube.

Birth of tensile-kube

There are dozens of online K8s clusters on the Tenc computing platform. These clusters have some fragmented resources that cannot be effectively used. A common scenario is that a job requires N resources, but the remaining resources of the existing clusters A, B, C, etc. do not satisfy N, and the total resources of clusters A, B, and C can also satisfy N. In addition, we have another scenario that users have services that need to be published to multiple clusters at the same time.

In fact, the industry has had similar needs for a long time and solutions such as federation have been born. However, these will be a large transformation cost for the existing K8s while adding a lot of complicated CRDs. Therefore, we independently developed a more lightweight solution named tensile-kube based on virtual-kubelet.

Introduction to virtual-kubelet (VK)

VK is a basic library that is open sourced by Microsoft. Its implementation is usually called a provider. The early VK included various providers. At present, the VK community has separated it into three parts: virtual-kubelet, node-cli and provider. The virtual-kubelet provides an abstract capability. By implementing and register the provider in a K8s cluster, users’ pods can be scheduled to this virtual node. The core capability of virtual-kubelet is the life cycle control of Node and Pod.

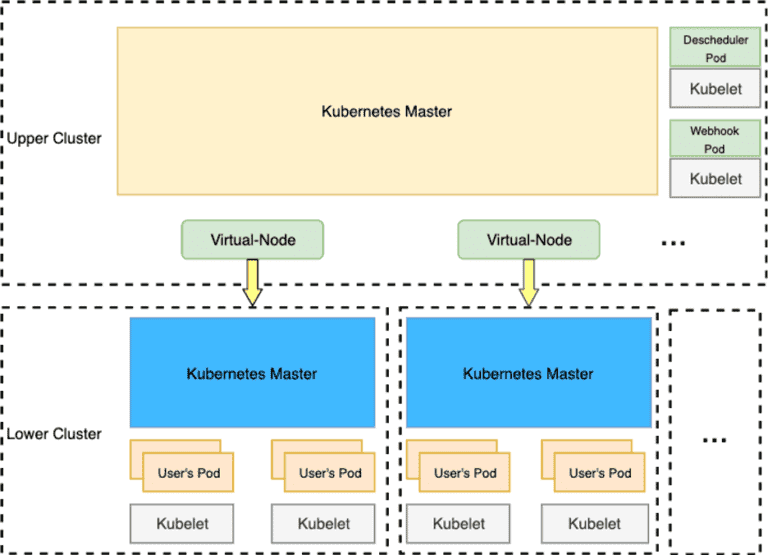

Architecture of tensile-kube

There are 4 core components of tensile-kube:

- virtual-node

It is a K8s provider implemented based on virtual-kubelet and multiple controllers are added to synchronize resources such as ConfigMap, Secret and Service. - webhook

It helps convert the fields in the Pod that may interfere with the scheduling of the upper-layer cluster and write them into the annotation. The virtual-node restores them when creating the Pod to avoid affecting the user’s expected scheduling results. - descheduler

To avoid the impact of resource fragmentation, this component has been redeveloped to make it more suitable for this scenario. - multi-scheduler

It is developed based on scheduling framework and mainly used for connecting the API Server of the lower-level clusters to avoid the impact of resource fragmentation and determine whether the virtual-node resources are sufficient when scheduling.

Notice:

- multi-scheduler would be better for small clusters. If you want to use it, you need to replace kube-scheduler in the upper cluster with multi-scheduler.

- large clusters are recommended to use descheduler while multi-scheduler is too heavy.

Features of tensile-kube

- No intrusion into the native K8s cluster

In tensile-kube, the connection between the upper and lower clusters is established through virtual-node. The upper-layer Pod is scheduled to the virtual-node through the scheduler and then the Pod is created to a lower-layer cluster. The entire solution is non-intrusive to K8s. - Low cost of K8s upper platform reconstruction

From the perspective of the upper-level K8s platform, the clusters connected through tensile-kube is a large cluster. The difference can almost be shield, so for the platform, almost no transformation is required. - Development based on native virtual-kubelet

Tensile-kube is based on the native virtual-kubelet without too many extra dependencies. It just depends on the features of native virtual-kubelet which would enable us upgrades the cluster more conveniently.

All of these make tensile-kube achieve the scheduling and management of multiple clusters easily. In addition, it is suitable for most scenarios.

Main abilities of tensile-kube

- Cluster fragment resource integration

There are resource fragments existing in clusters. Through tensile-kube, these fragments can be combined into a large resource pool to make full use of idle resources. - Ability of multi-cluster scheduling

Combined with Affinity and etc., Pod’s multi-cluster scheduling can be achieved. For clusters based on K8s 1.16 and above, we can also implement more fine-grained cross-cluster scheduling based on TopologySpreadConstraint. - Cross-cluster communication

Tensile-kube natively provides the ability to communicate across clusters through Service. But the premise is that the network between the different lowers clusters are able to accessed through Pod IP, such as: private clusters share a flannel. - Convenient operation and maintenance cluster

When we upgrade or change a single cluster, we often need to notify business migration, cluster shielding scheduling, etc. Through tensile-kube, we only need to set the virtual-node to be unschedulable. - Native kubectl capabilities

Tensile-kube supports all operations of native kubectl, including: kubectl logs and kubectl exec. This is very convenient for the operators and maintainers to manage the pods on the lower-level clusters without additional learning costs.

Other possible applications

- Edge computing

- Hybrid cloud

Feature work

It has been 6 years since Tenc brought K8s into production environment in 2014. Tensile-kube is born from our years of container-related experience. At present, based on tensile-kube, we have expanded the original cluster from two thousand nodes to more ten thousand nodes. Previously, we have submitted many PRs to the VK community to expand VK functions and optimize its performance. In the future, we will continue to improve VK and tensile-kube in the following aspects:

- Multi-cluster scheduler optimization;

- Real-time optimization of provider status synchronization;

- Resource name conflicts in sub clusters.

Project address: https://github.com/virtual-kubelet/tensile-kube