KubeCon + CloudNativeCon sponsor guest post from Vijoy Pandey, VP / CTO of Cloud, Distributed Systems, and Ventures at Cisco

As we wrap up our first ever virtual KubeCon in 2020, as a community, we also celebrate our biggest KubeCon yet. With over 18,000 registered attendees using a virtual experience that went super smooth for all involved, I have to extend a special thank you and kudos to the entirety of the Linux Foundation and the CNCF events team, and everyone else who was involved in making the event a success across every metric.

HoloConf

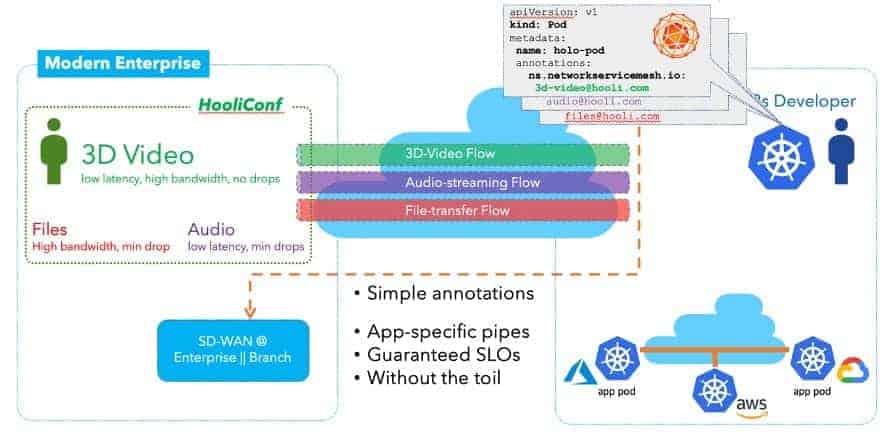

A new holographic video conferencing app (illustratively) made its debut at this event. Gavin Belson at Hooli hired a cool blue Kubernetes developer, Claire, to develop HooliConf – a holographic conferencing application that would allow for simultaneous 3D video, standard video, audio, file access, and text chat capabilities. Not only would this cloud native app need to developed across multiple Kubernetes clusters across an on-premises K8s deployment and K8s clusters in AWS, GCP, and Azure, the consumption of this app would have to be done through a fancy portal in Gavin’s office at the Hooli Campus. The networking demands of such an app would imply some gnarly ticket management, cross-organizational ballet, velocity mismatches between SREs in different teams, opaque and closed software infrastructure, leading to incredible toil.

The need for the Network to become Cloud Native

We started on this networking journey with a plea for the Network, to Please Evolve a little over a year ago at KubeCon EU/Barcelona in May 2019. We claimed that, as modern cloud native apps are becoming more pervasive, applications components are becoming thinner (microservices, functions), and geographically diverse (cloud regions, on-premises, across the globe), the connectivity problem for even a single application is becoming much, much worse. A quick look at the service dependency graph of a cloud native application (e.g., the Monzo banking app or the Amazon graph shown here) will give us a sense of the networking problem needing to be solved. This modern cloud native network is for the application developer. It follows the principles of simplified connectivity, relevant context, and follows the same activation models that are used in cloud native application development.

Figure 1: Amazon micro-services dependency graph

It was time to make Networking, cloud native.

The Network Service Mesh (NSM) community formed to solve this challenge. The project attained CNCF Sandbox status in April 2019 with contributors from RedHat, Cisco, and VMware, among others. By KubeCon North America/San Diego in November last year, the NSM community was assisting Claire build multi-cloud, multi-cluster K8s applications by extending the core concepts that are already familiar to her as a K8s developer, without trying to dip her foot into traditional IP networking complexity. Claire could now build a multi-cloud, multi-cluster sharded database (e.g., Vitess-based) application with minimal-toil and no BGP, VLAN, firewall ruleset, IP subnet, or IP routing complexity.

The very first NSMCon Day 0 event was held at KubeCon NA 2019, and oversold its 50-person room capacity within days of announce.

The Cloud Native WAN

Therefore, when Gavin threw out the challenge of making the WAN cloud native and application-aware for its new HoloConf app, the NSM community jumped at the challenge. The idea was simple, conceptually: to stretch the CRD annotation-based simplicity of consuming the network via NSM all the way across a WAN, across an SD-WAN fabric, to a branch, edge or an enterprise end-user.

– To remove the complexity of stitching various networks, models, configurations together to achieve an application’s needs.

– To extend the minimal-toil high-velocity approach with which Claire was already familiar.

– To keep things simple and not get mired by the complexities of IP subnetting and routing.

– To make SD-WAN application-aware.

– To satisfy the different needs of 3D video, standard video, audio, file sharing and texting across the various K8s clusters and all the way to the user across an SD-WAN fabric.

We partnered with PlanetScale (the awesome folks behind Vitess) to show how a sharded database use case across multiple K8s clusters, owned or rented, can leverage the operational simplicity of NSM, and also solve for a variety of multi-cluster use cases that are not solvable by the traditional CNI model in Kubernetes.

We held our second NSMCon Day 0 event, which had a whopping 1200+ pre-registrations. We were running at ~700 attendees at any given point during the day – an increase of 2300%!

What Next?

Through our three-chapter evolution journey from Barcelona to virtual Amsterdam, we have seen how complex multi-cluster connectivity problems can be solved by simply thinking of service endpoints as rendezvous points along a virtual wire between K8s clusters, and all the way to an end user.

This cloud native networking experience has made it clear that there is a need for a broader, more capable, Application Network.

A Network that connects all service endpoints, and only those endpoints – wherever they happen to be and in whatever form – as modern cloud-native, or traditional monolithic systems. This Network is built for the application developer and connects the service APIs that are of prime concern to a modern cloud native application, but also connects them back to traditional monolithic application endpoints. It has narrow and deep application context and is not worried about the statistical traffic flowing through the physical and virtual networks below.