Member Post

Guest post originally published on the Volterra blog by Pranav Dharwadkar, VP of Products at Volterra

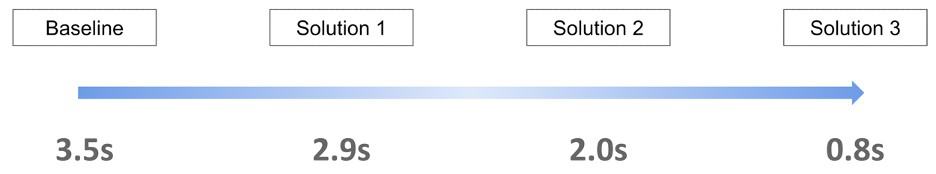

This blog describes a key challenge of web application performance faced by online enterprises around the world. This blog describes how DevOps teams can “Un-Distance” their web-app and improve application performance by ~77%, from 3.5 secs to 0.8 secs, without rewriting their application.

What is Web Application Performance?

Web application performance is typically measured by how long it takes for the content of the page to be visible, as perceived by the end-user. Web developers use several metrics to measure their web-app performance such as First Contentful Paint, Largest Contentful Paint, or Document Complete using tools such as Web Page Test, Google Lighthouse, or Rigor.

Why is Web Application Performance important?

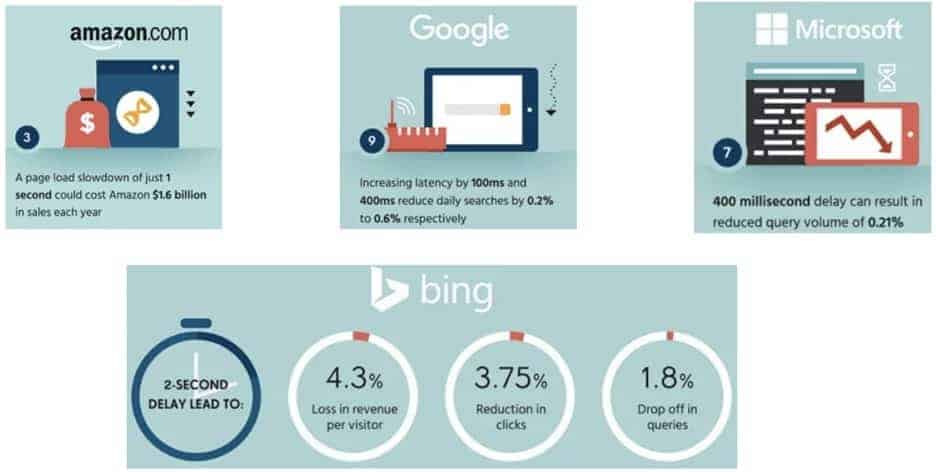

A slight degradation in web application performance has a huge impact on the user experience and consequently sales through the online portal. E-commerce giant Amazon reported that a 1-second degradation in application performance results in a $1.6B loss in revenue. Google and Microsoft report similar economic impact numbers as shown in Figures 1 and 2.

These data points clearly confirm that Web Application Performance is top of mind for online enterprises.

What is the challenge?

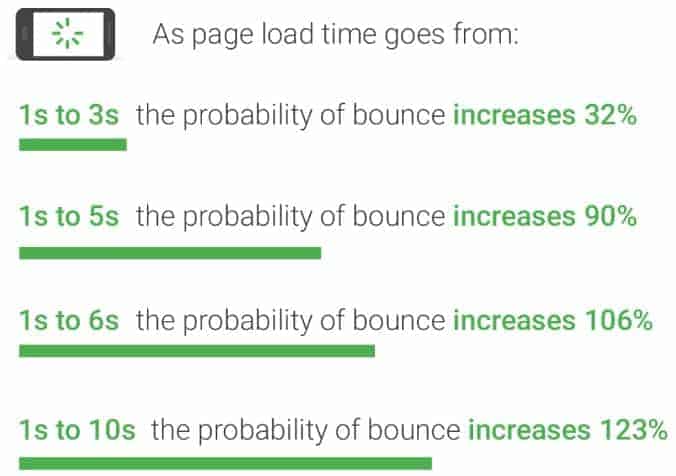

Let’s take a specific example of an online enterprise in the ecommerce vertical. Many ecommerce enterprises have their web application in one or few data centers and these data centers don’t exist in all the countries where the ecommerce enterprises’ users live. When the user is in a different country/region as the data center, high network latency from the user to the data center results in slow page load times of almost 6-12 seconds. As mentioned above, when the page load times exceed 5 seconds, the probability of bounce increases to 90%.

Let’s deconstruct a web-app page load as shown in Figure 3

- First, a secure SSL session is established, that requires 6 messages back and forth between the user’s browser and the web-app.

- Next, the web-app will typically use the cookies in the user’s browser to determine if the user has an account with the ecommerce vendor.

- Based on the cookie, If the user has an account the user is shown a login page, else the user is shown a checkout-as-a-guest page.

Six messages just to set up the secure SSL session when transmitted over high latency links (potentially transatlantic/transpacific links) surely impacts page load times and consequently results in poor user experience.

How can you improve app performance without rewriting your app?

There are many techniques to improve web-app performance by optimizing the application such as compressing the images or optimizing dependencies to reduce page load times. Many times, these optimizations might not be possible because of the business requirements driving application design. Even if these optimizations are possible, it might not be a feasible option because the developers who wrote the app are no longer with the organization or busy developing new services. Therefore DevOps teams in e-commerce organizations value solutions that improve app performance but don’t require changes to the application. Here are three potential solutions.

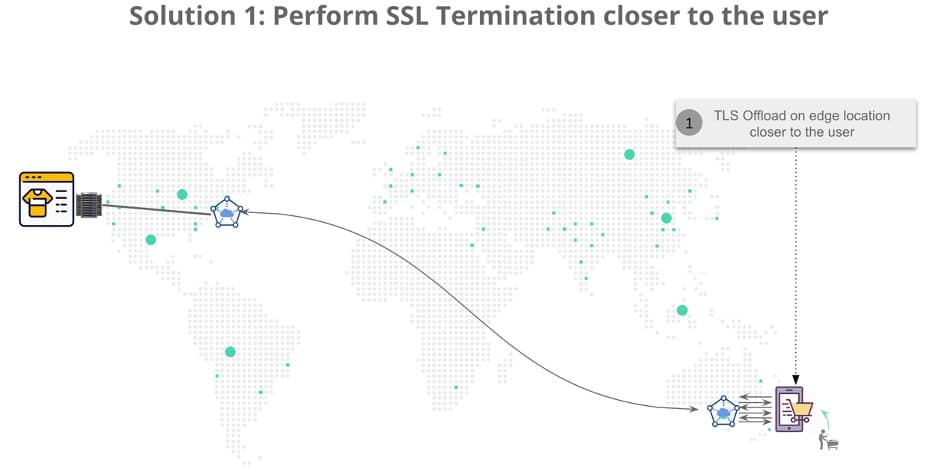

Solution 1: SSL Termination (a.k.a. TLS offload) closer to the user

The simplest solution to improve web application performance is to perform SSL session termination closer to the user, preferably in the user’s region or country. The SSL termination could be performed in an edge compute location in the user’s region/country. By doing so, the set of six messages to set up the SSL session is terminated locally on the edge compute location in the user’s region/country and does not traverse transatlantic or transpacific links. In addition, there will be a persistent SSL session between the edge/cloud compute location and the origin server further improving performance. This can be visualized as shown in Figure 4.

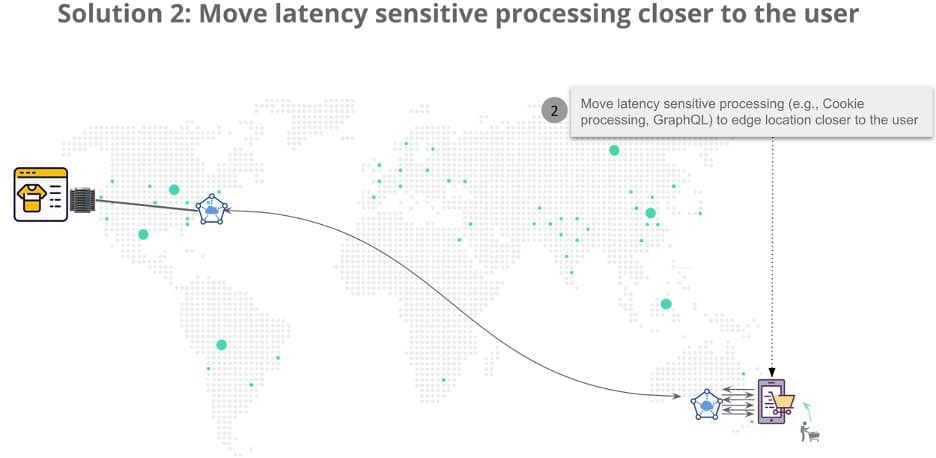

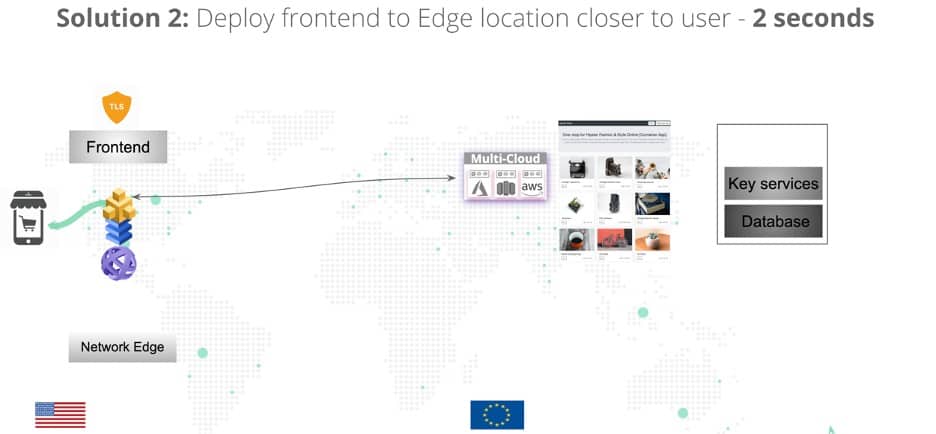

Solution 2: Move latency-sensitive processing such as cookie processing closer to the user

To improve the performance further, enterprises can deploy latency sensitive portions of their applications on the Edge/Cloud location closer to the user. Examples of latency-sensitive portions of the web-app include cookie processing or graphQL or backend-for-front-end. In an ecommerce enterprise example, they typically process cookies to determine which page to paint the user e.g., if the user has an account, then paint the login page, else paint a guest page. Processing cookies on the edge/cloud location closer to the user increases performance dramatically vs. processing cookies on the data-center half-way across the globe. This can be visualized as shown in Figure 5.

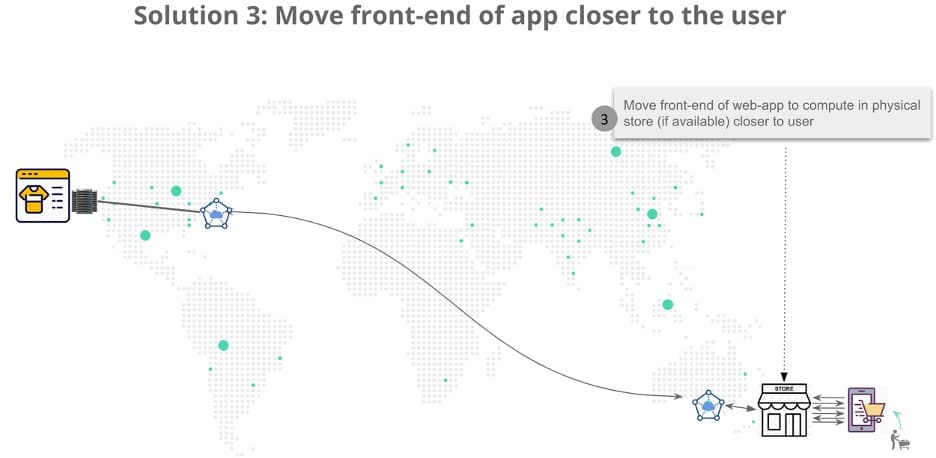

Solution 3: Move front-end of web-app (e.g., product catalog) to the edge i.e., physical store

Many ecommerce vendors such as Macy’s, Home Depot, Target have physical stores as well where consumers browse the online product catalog, inside the physical store, to check item location as well as access deals/coupons. To improve the user experience, they could choose to move only the front-end of their web-app to in-store compute. All the remaining components of their app, i.e., databases, could remain in the back-end data center as that won’t be accessed as frequently as the front-end product catalog. This can be visualized as shown in Figure 6.

Key architectural tenets to consider in any solution

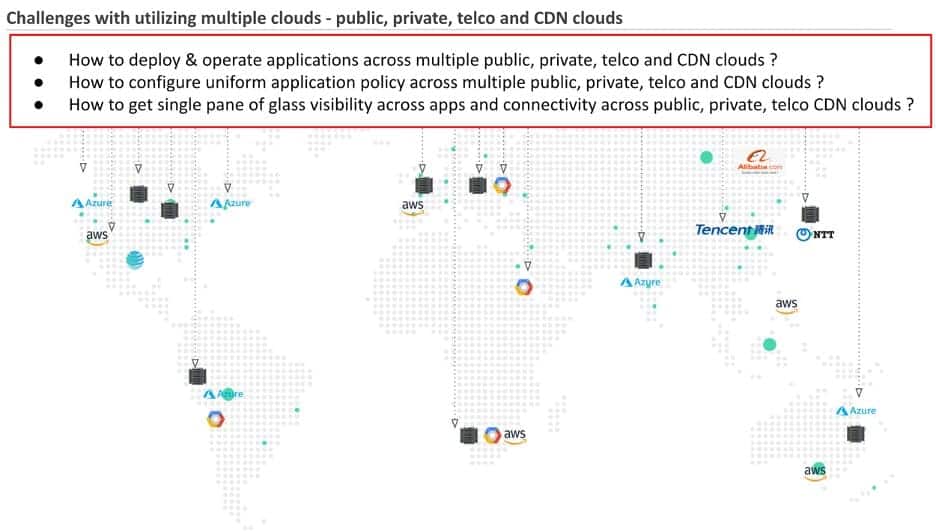

This problem is fundamentally a distributed computing problem across multiple heterogeneous clouds. DevOps teams could go the do-it-yourself route by cobbling together piecemeal solutions from different vendors such as public cloud providers, CDN providers, or edge providers or buy a packaged solution from distributed cloud services platform providers. The challenges are highlighted in Figure 7.

Here are the key architectural tenets DevOps teams should consider when evaluating any solution.

- Consistent application runtime environment across multiple clouds: DevOps teams should strongly consider solutions that leverage Kubernetes to provide a consistent application runtime environment across multiple environments – private DC, public cloud, telco or edge devices

- Geo-proximity traffic routing: As the front end of the application could be deployed in different locations cloud vs. edge vs. physical store edge, every solution should ensure the user’s traffic should be diverted to the closest location where the front-end service is deployed.

- Global load balancing: Any solution considered should offer global load balancing as the backend services could be in multiple locations around the globe.

- Uniform application and network security policies across multiple clouds: Any solution considered should provide the ability for a uniform application and network security policy to be applied in whichever cloud the front-end service is deployed.

- Single pane of glass observability across multiple clouds and across application and network connectivity: Any solution considered should provide a single pane of glass observability across connectivity, security, and application deployments across multiple clouds.

Results achieved

Test Setup

The test setup described next was used to demonstrate the performance improvements offered by each of the solutions using Volterra’s distributed cloud service platform.

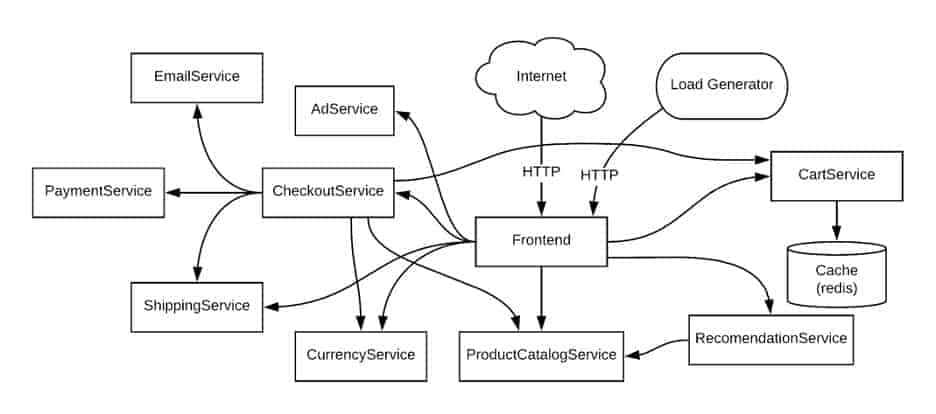

- The web-app used was Online Boutique, consisting of 11 microservices as shown in Figure 8

- The web-app was deployed using Kubernetes and connectivity between the services was established using Envoy.

- Web App performance was measured using the “Document Complete” metric on the tool “webpagetest.org”

- Services and Databases are deployed in DCs in Paris, while users (or clients) are in San francisco.

Test Scenario and Results

The following four scenarios were tested and the performance improvements are described for each scenario.

Baseline Scenario: First, I baseline the web-app performance with the user in San Francisco, and the web-app running entirely on a Kubernetes cluster in Paris data center as shown in Figure 9. The performance of the web-app for the baseline scenario was measured at 3.5 seconds.

Solution 1 Test Scenario: In the test for solution 1, the user is still in San Francisco, the web-app is still running on a Kubernetes cluster in the Paris data center, however, the SSL session is terminated on an Envoy based reverse proxy running on Network Edge location in San Jose. This scenario is shown in Figure 10.

A persistent SSL session is automatically created between the Envoy based reverse proxy running on the Network Edge location in San Jose and the web-app running in Paris data center. The performance of the web-app improved to 2.9 seconds, an improvement of ~18%.

Solution 2 Test Scenario: In the test for solution 2, the front-end and currency microservice are deployed on a Kubernetes cluster in a Network Edge location in San Jose. The remaining microservices and databases still run in a Kubernetes cluster in the Paris data center. This scenario is shown in Figure 11.

The rationale behind only moving the front-end and currency microservice is that only these microservices are involved during the users’ casual browsing activity. The performance of the web-app improves to 2 seconds, an improvement of 31% over Solution 1’s results.

Solution 3 Test Scenario: In the test for solution 3, the front-end and currency microservice were deployed to a Kubernetes cluster on commodity Intel NUC (4 virtual cores) in a physical store edge.

The rationale behind only moving the front-end and currency microservice is that only these microservices are involved during the users’ casual browsing activity in the physical store edge. The performance of the web-app improves to 0.8 seconds, an improvement of 60% over Solution 2’s results.

Summary Results and Economic Value

A summary of the test results is shown in Figure 13. The economic value can be determined by extrapolating the improvements in seconds with the value of each second of improvement to the organization. For example, Amazon values each improvement of 1-second results in an economic value of $1.6B; therefore solution 3 provides an economic value of $4.3B over baseline.

As can be seen from these results, DevOps teams can achieve significant performance improvements without rewriting the application using the three options proposed in this blog while maintaining the same level of security protection for their application. To learn more about real customer use cases that can be solved by a distributed cloud platform or have real customer use cases to share with me, feel free to reach out to me via LinkedIn or Twitter.