Guest post originally published on DoiT’s blog by Mike Sparr, Sr Cloud Architect at DoiT International

One of the coolest aspects of Google’s Anthos enterprise solution in my opinion is Anthos Config Management (ACM). You can set up a Git repo and connect your various clusters to it and they will standardize their configs and prevent drift in a GitOps manner. This is especially important for large enterprises with hundreds to thousands of clusters under management in various hosting locations.

Inspired by ACM, I wondered if I could recreate that type of functionality using another GitOps solution, Argo CD. I’m pleased to share it worked as expected and when I made changes to config files in the Git repo, they applied to both clusters seamlessly.

The Setup

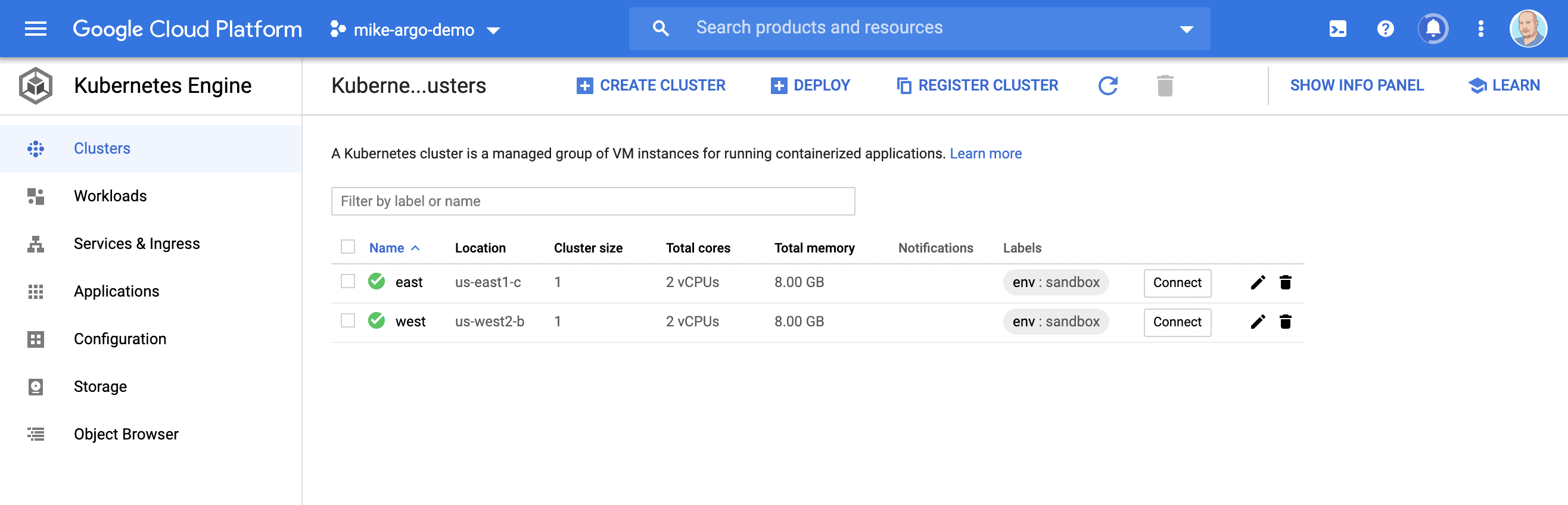

For simplicity, I created two clusters on Google Cloud’s managed Kubernetes service, GKE, in two separate regions to simulate East and West scenarios. Of course, you could install Argo CD on clusters anywhere and make sure they can access your Git repo.

I created the following shell script to bootstrap everything; however, for production use, I’d suggest managing the infrastructure using Terraform if possible.https://blog.doit-intl.com/media/6292479e6e6dc04fc573e6daf86235dc

Bootstrapped Clusters

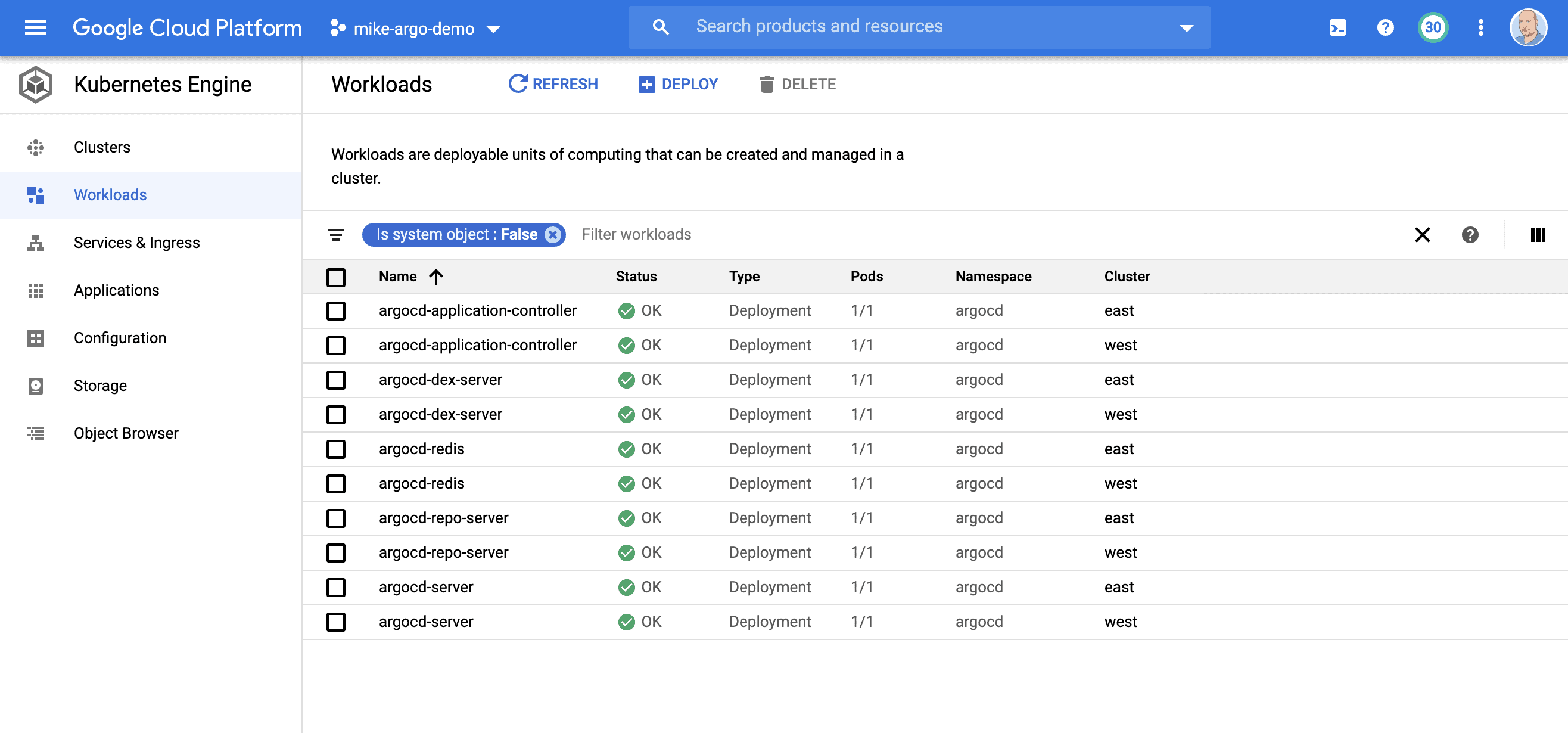

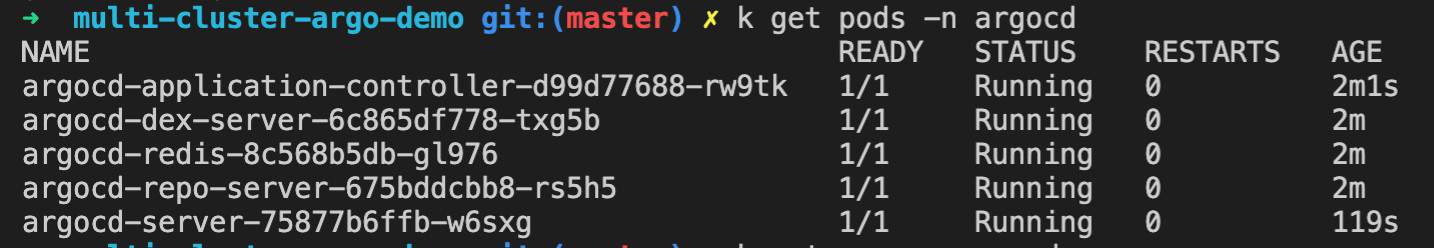

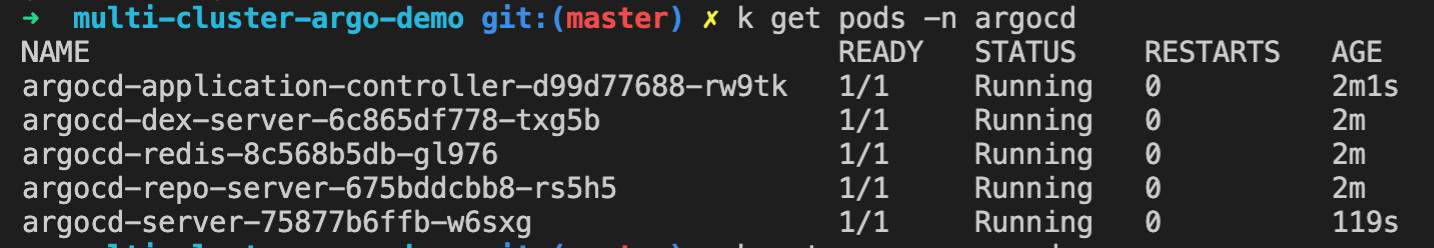

Within 8–10 minutes both clusters were active and Argo CD workloads were deployed.

App of Apps

What’s unique about this setup is I also installed Argo CD on each cluster with an initial Application using the App of Apps pattern pointing to my Github repository. This offers flexibility to add any number of configs to a repo in the future and customize the clusters or apps you deploy to them.https://blog.doit-intl.com/media/47b8ebc5c647ae2d92d7b27232562fb1

Note that automated sync is purely optional. If the number of clusters was massive, I would recommend this so your clusters will self heal and manage drift. One downside to auto-sync is the rollback feature will not work, however.

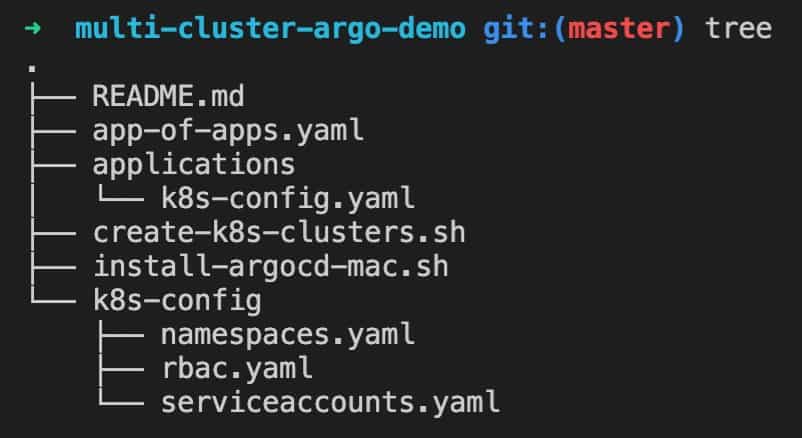

The applications/ folder (path) has one app in it (for now) which is called k8s-config.yaml and that is yet another Argo app that points to another folder with our Kubernetes configs.https://blog.doit-intl.com/media/7ecd04e3ea088db7da7eaf3abc96d105

The k8s-config/ folder (path) contains all the YAML files we want to be applied to my kubernetes clusters. You can also optionally declare an app to apply configs recursively if you have a lot of files to organize.

Source Code Repository

For my experiment I published a source code repository on Github at mikesparr/multi-cluster-argo-demo with the following directory structure.

Everything in this example is within a single repository but you could separate concerns by using different repositories and granting different teams permissions to edit them.

Argo UI

From the command line you can port-forward to the argo-serverservice

kubectl -n argocd port-forward svc/argo-server 8080:443

In your browser visit http://localhost:8080 and when prompted accept the security exception (no https). TIP: by default, you login with admin and the full name of the argocd server pod:

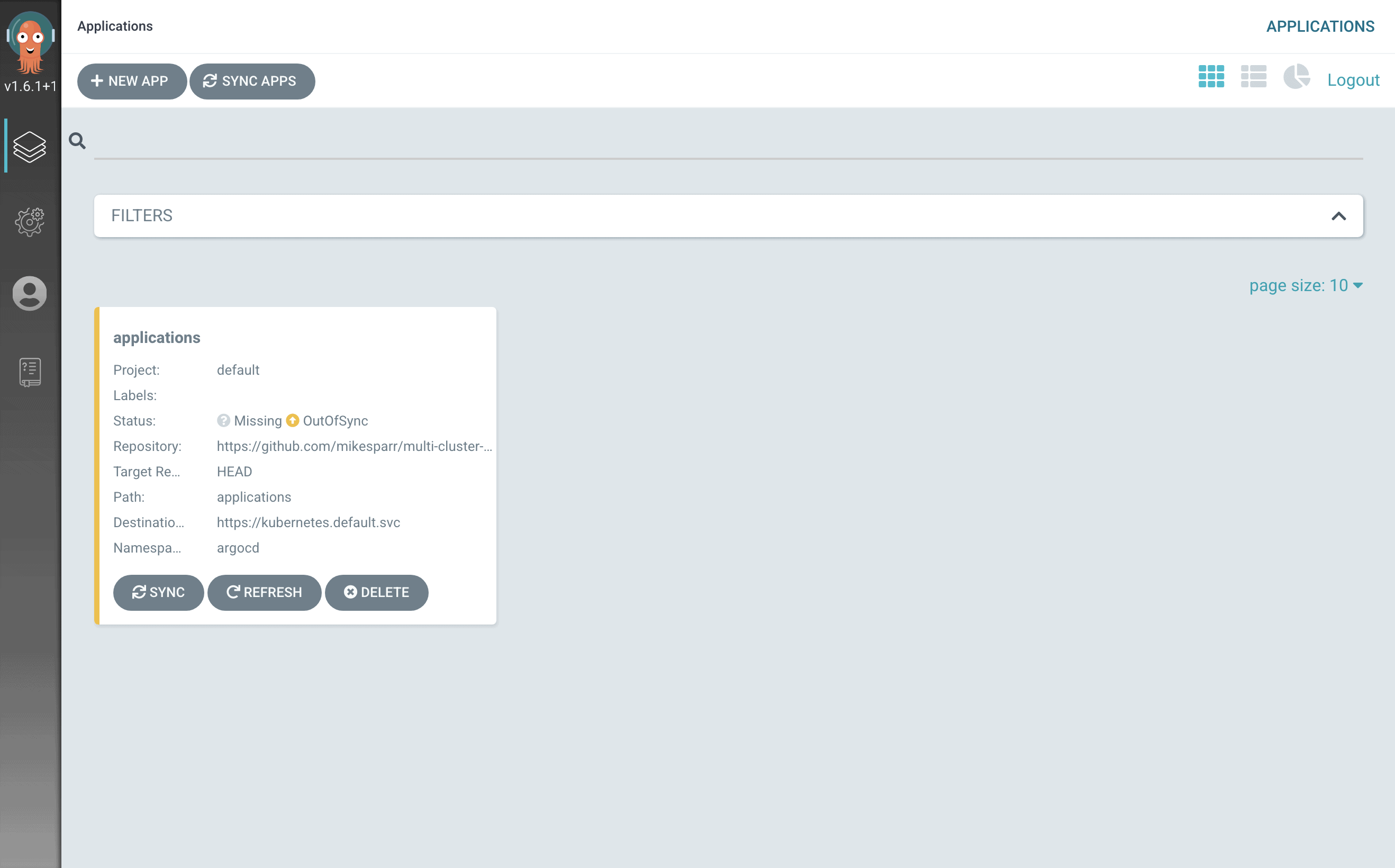

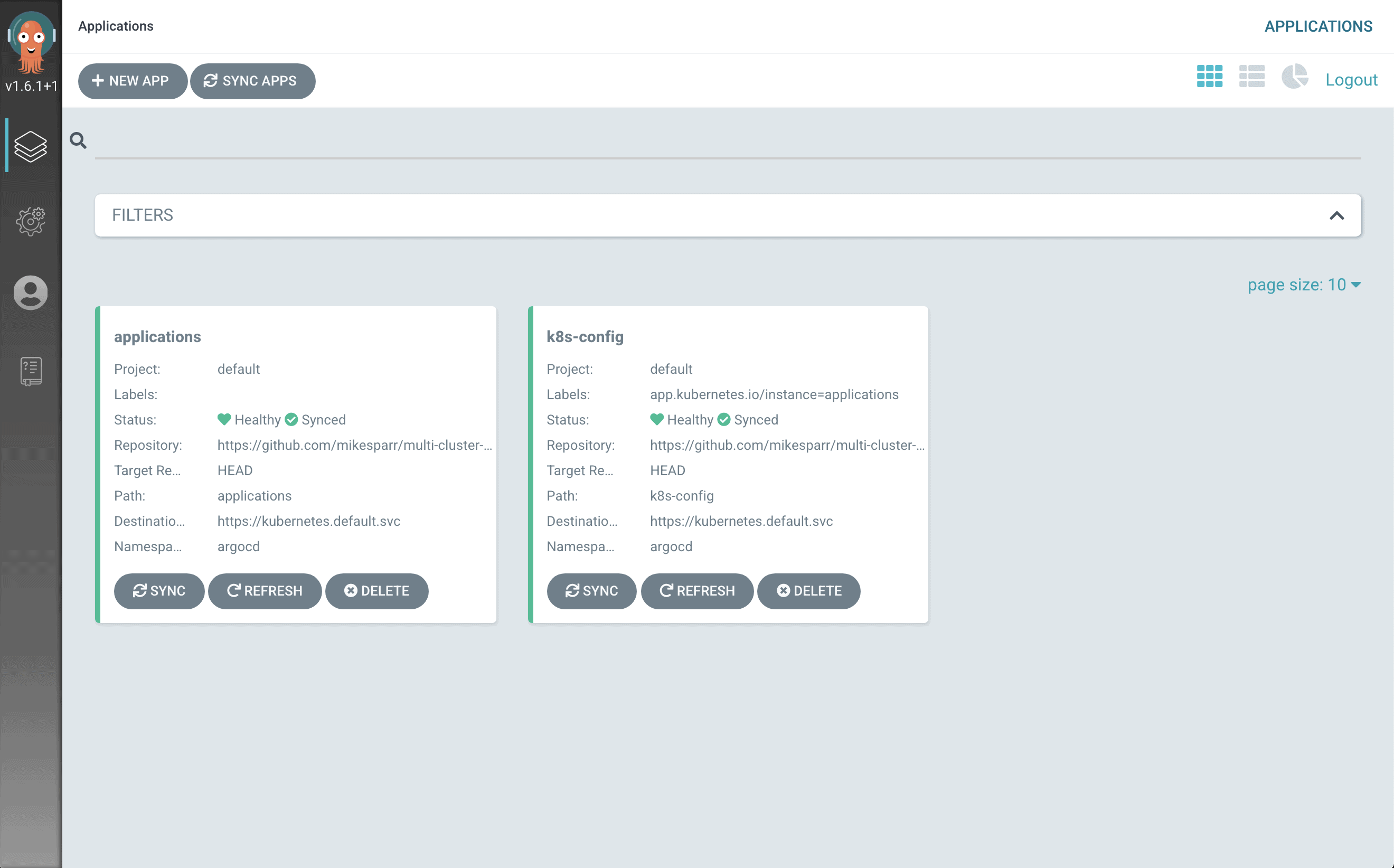

After your App of Apps (applications) syncs, then it will recognize your first app k8s-config.

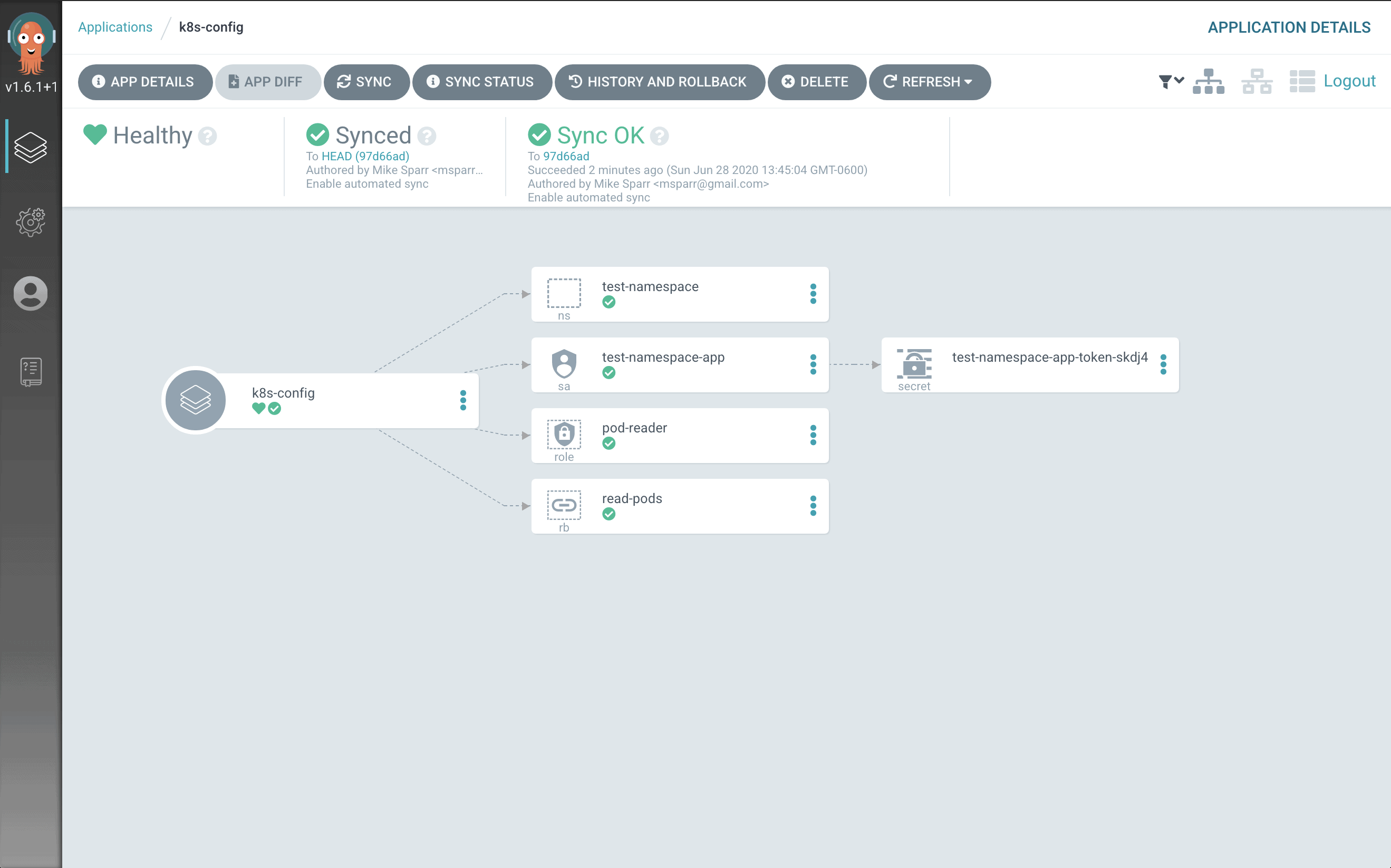

If you click on the k8s-config app panel, you can see a detailed view of everything it installed on the server.

Confirming Cluster Configs

Switch your kubectl context to each cluster and check your namespaces, serviceaccounts, roles, and rolebindings for the test-namespace. You can see them all installed on both clusters. Congratulations!

Video Demo

Infinite Potential

Imagine you want to add an API Gateway to your stack and decide on Ambassador or Kong, both configured with CRDs and YAML. You could simply add another folder or repo, then another app YAML within the applications/ folder, and ArgoCD would automatically install and configure it for you.

For each application the engineering team publishes, they could edit the Docker image version in a Deployment manifest, create a pull request for the change, and you have built-in manual judgments and separation of duties. Once the PR is merged, Argo CD will deploy it to that cluster and environment respectively.

Another use case would be to support multi-cloud deployments and balance your traffic with DNS for a true active-active configuration. Yet another use case may be to migrate from one cloud to another.

I look forward to playing around with more possibilities and hope you enjoyed another way to keep clusters in sync across various environments.

Cleanup

If you used the script and/or repository please don’t forget to clean up and remove your resources to avoid unnecessary billing. The simplest way is to delete your clusters using the command below (or your project).

gcloud container clusters delete west --zone us-west2-b

gcloud container clusters delete east --zone us-east1-c

Work with Mike at DoiT International! Apply for Engineering openings on our careers site. https://careers.doit-intl.com/