Guest post by KubeEdge Maintainers

Service Architecture Evolution

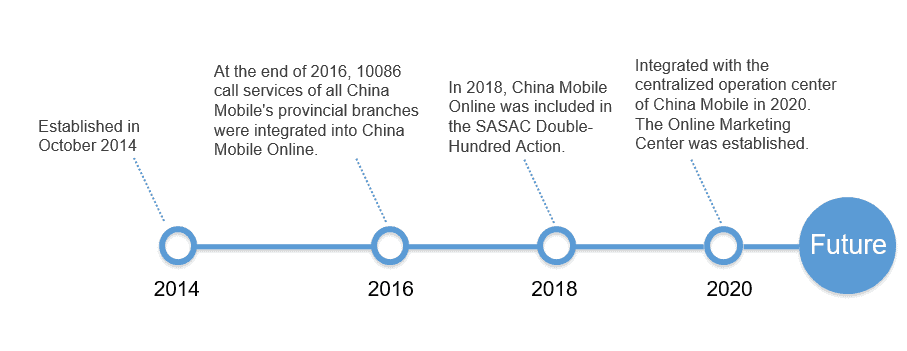

- Introduction to China Mobile Online Marketing Service Center

The Center is a secondary organ of the China Mobile Communications Group. It operates and manages online service resources and channels. The Center holds the world’s largest call center with 44,000 agents, 900 million users, and 53 customer service centers. It leads customer service technologies and services.

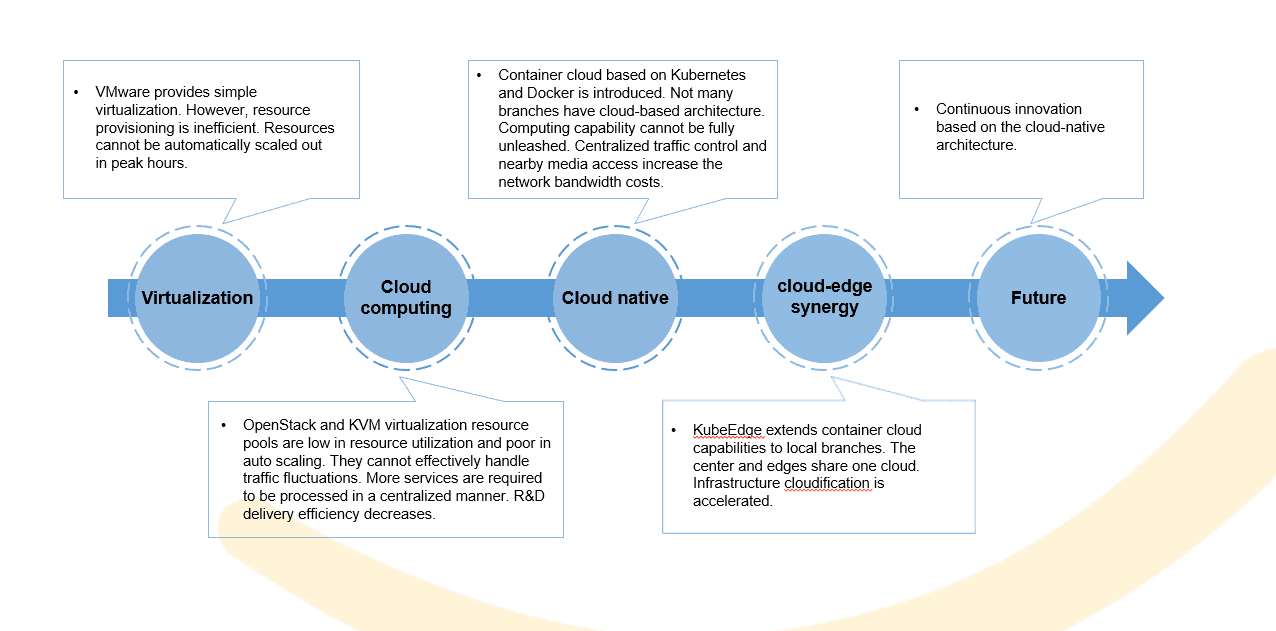

- Way to Cloud Native

Cloud-Edge Synergy Solution Selection

- Service requirements and pain points

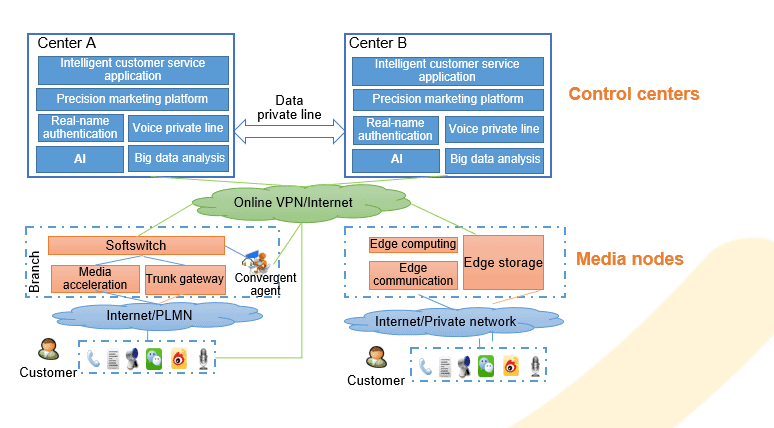

The convergent customer service system features centralized traffic control and nearby media access. In this architecture, a small number of servers need to be deployed in the equipment rooms of each provincial branch. A star topology is formed with two centers at its core and 31 provincial branches as the access points. In addition, multimedia service systems such as the video customer service and all-voice portal need to be deployed in local branches to ensure low network latency, faster streaming data transmission, and better user experience. Painpoints of branch resource pools:

- Painpoint 1: Slow service response. Branch service systems are silos. They do not share server pools. Service systems are unable to scale out quickly in service spikes, which result in poor customer experience and stability.

- Painpoint 2: Inefficient O&M. Services deployed in local branches are manually maintained. Manual operation is error-prone and inefficient.

- Painpoint 3: Low resource usage. Currently, local branch servers are mainly based on traditional virtualization. Computing power cannot be aggregated. Resource utilization is low. Inefficient and redundant resources need to be integrated and reused.

- Cloud-Edge Synergy Solution Selection (1)

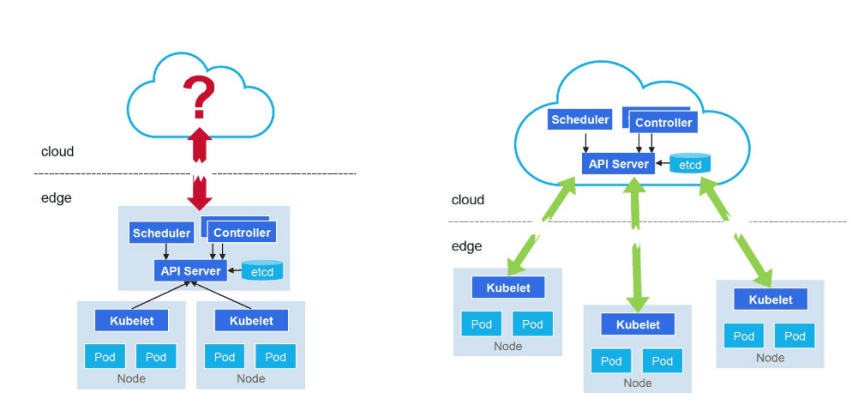

How should we manage the nodes deployed on local branches, namely, the nodes at the edge? The edge can be deployed as either clusters or individual nodes.

To deploy an edge cluster, a full set of management capabilities needs to be deployed alongside the cluster. This could lead to a large resource overhead, which is not favored, especially for services that are expected to deliver high performance and availability.

Deploying edge nodes require much less resources compared with deploying an edge cluster. Nodes are managed by a cloud-based Kubernetes master node. Services at the edge can be directly controlled from the cloud. This solution inherently achieves cloud-edge synergy. Two capabilities need to be incorporated before we can finally settle with this solution. The first is offline autonomy, which provides the architecture with resilience. The edge could be disconnected or poorly connected to the cloud. In this case, kubelet should be able to run or edge components should be able to start service pods. When the connection is poor, pods should be up after the host is restarted. The second is that the edge nodes must be fully compatible with Kubernetes APIs.

Considering that the nodes in each local branch are not of a large scale, deploying them as edge nodes would cause lower resource overhead. Therefore, the edge node solution is chosen.

- Cloud-Edge Synergy Solution Selection (2)

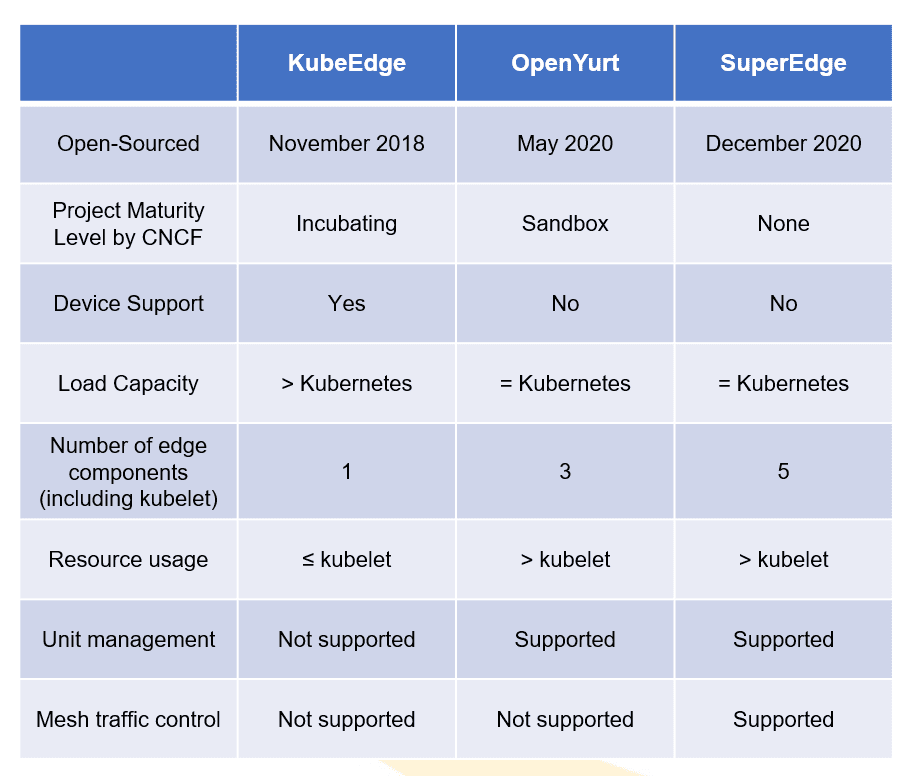

KubeEdge, OpenYurt, and SuperEdge are the mainstream open-source projects for edge nodes.

They are evaluated and compared in the following three aspects:

- Project maturity: KubeEdge and OpenYurt are donated to CNCF. The former is an incubating project and the latter a sandbox project. SuperEdge has not been donated to CNCF.

- Cluster load capability: KubeEdge deeply customized Kubernetes list-watch with CloudCore managing the cloud side and EdgeCore managing the edge side. KubeEdge supports a large scale of edge nodes. This is useful as more edge nodes will be deployed to support ever-increasing service requirements.

In addition, the number of edge components is also considered. The more components there are, the harder the O&M could be. For edge components that include kubelet, KubeEdge only requires one, OpenYurt three, and SuperEdge five. KubeEdge now is leading the game.

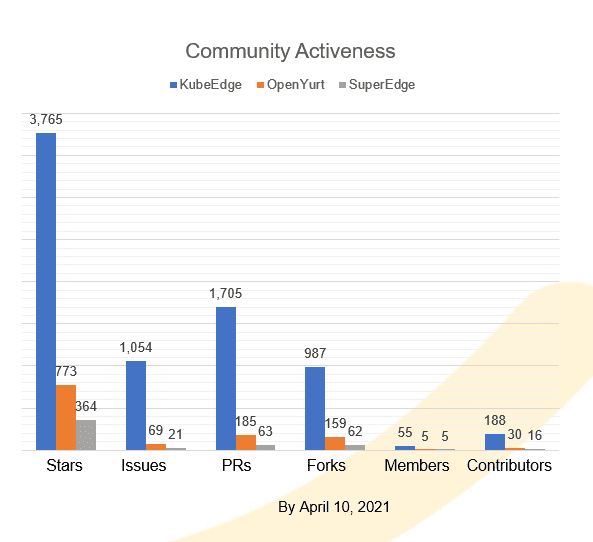

- Community activeness: On GitHub, KubeEdge has way more stars, PRs, and issues than the other two projects. These factors explain why we chose KubeEdge for the architecture.

- Cloud-Edge Synergy Framework

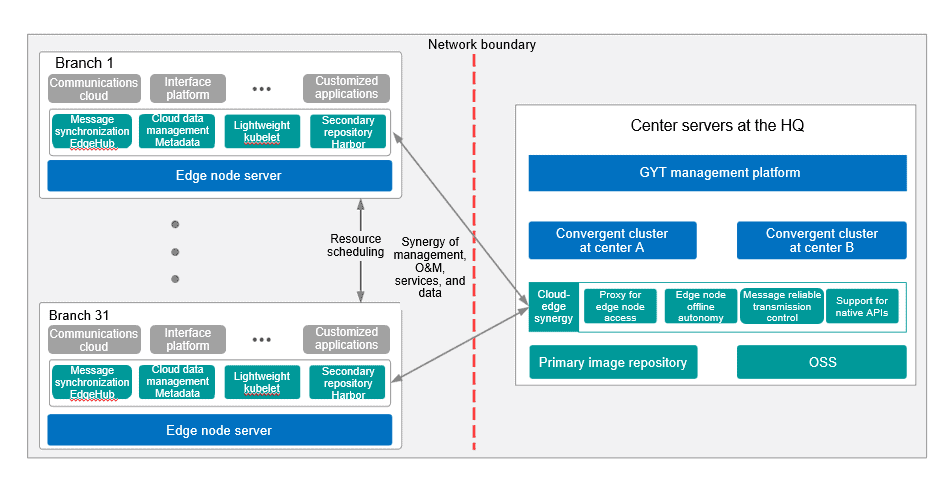

A cloud-edge synergy architecture consisting of two centers and multiple edges is built based on KubeEdge. This solution extends container cloud compute capabilities to the edge nodes in local branches, which enables unified resource scheduling and management.

- Centralized management

Cluster management by a unified container management platform

- Edge management

Edge node management based on KubeEdge

- Offline autonomy

Optimized offline autonomy by adding edge proxy on EdgeCore

- CI/CD

Higher service release efficiency by using the CI/CD capabilities of the management platform

- Cloud-edge synergy

A platform for data, management, and O&M synergy

Problems and solutions in solution practices

- Edge Networking

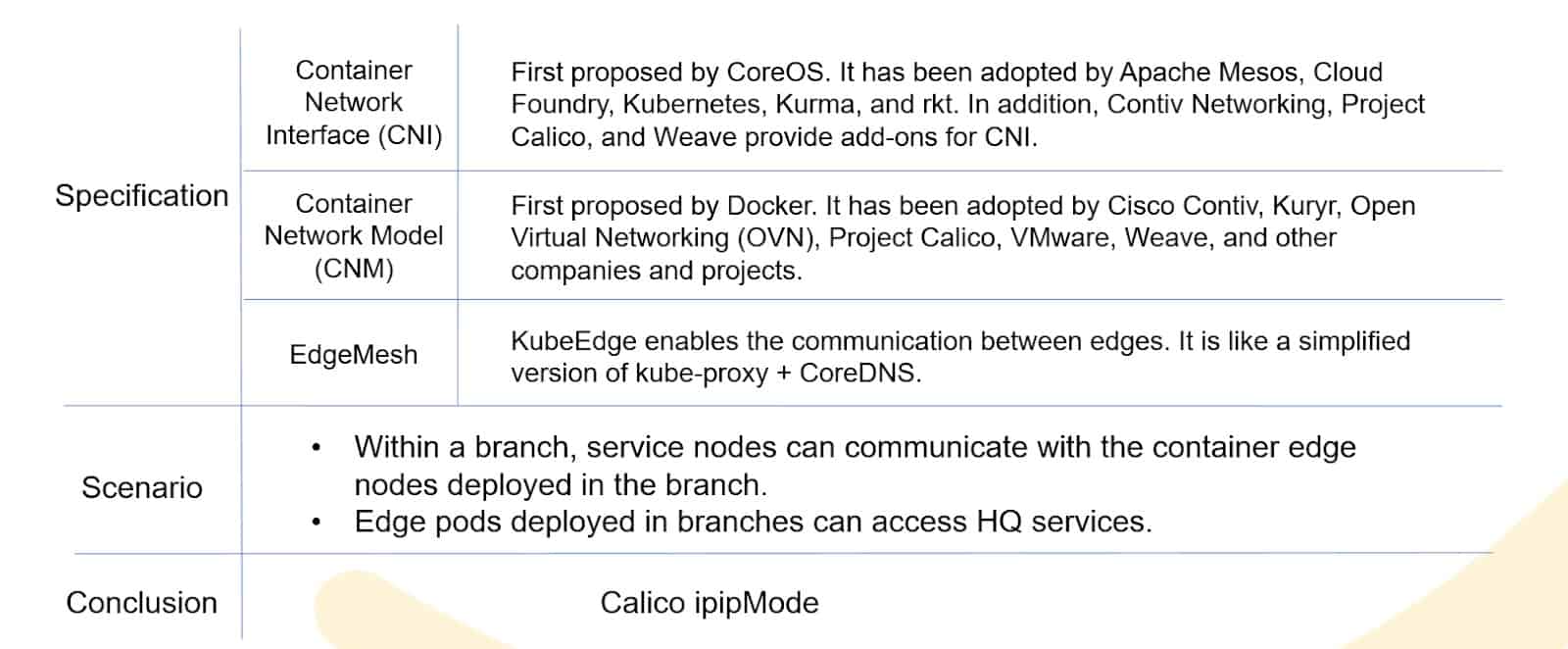

Diverse networking models are available for edge nodes. The following solution is chosen to ensure local traffic can be processed within local branches.

- Edge Resource Management

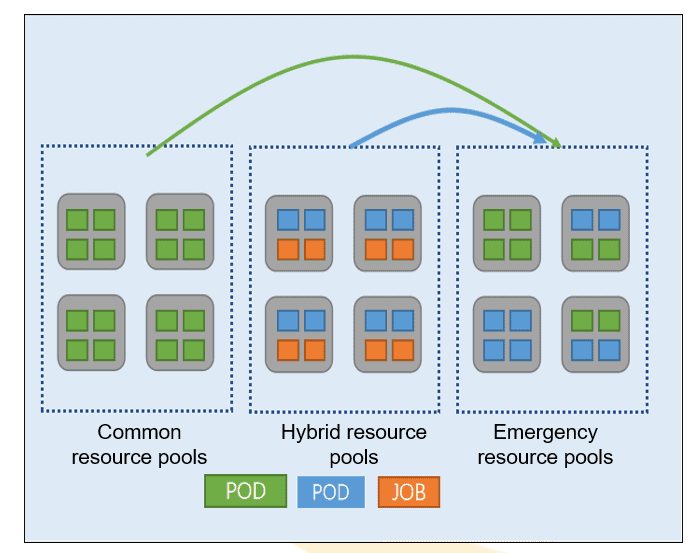

Normally, quotas are used to allocate tenant cluster resources. However, this mechanism can result in severe resource fragmentation. It does not fit the elastic scaling capability of the container cloud and is not suitable for enterprise-grade large-scale applications.Kubernetes taints are used to address this problem. Multiple pools are built to suit different services, as shown below:

Resource pools:

- Taints: Taints are used to arrange a cluster into multiple resource pools.

- Scheduling: Multiple types of taints are introduced with each having different priorities. These taints determine the scheduling priorities of resource pools.

Advantages:

- Higher resource utilization: Tenants do not need to reserve redundant resources. Less resource fragmentation.

- Quick emergency response: An emergency resource pool centralizes redundant computing capability, which can quickly respond to emergencies with strong support.

- Edge Service Access | Deploying Capabilities at the Edge

KubeEdge extends container cloud computing capabilities to the edge. EdgeMesh enables simple service exposure. However, layer-7 load balancing is not supported. Therefore, cloud capabilities need to be deployed at the edge.

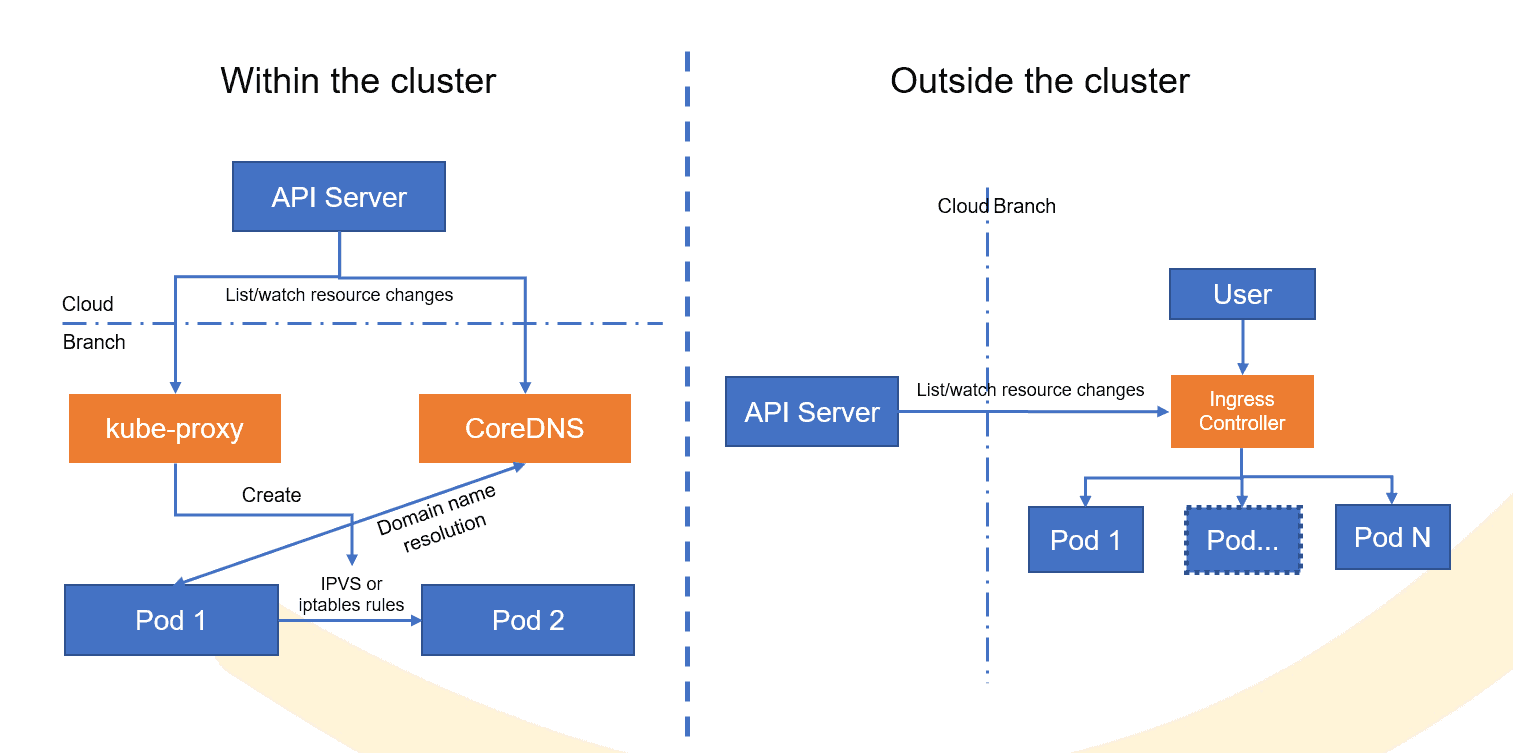

A cluster handles intra-cluster traffic and outbound traffic. For intra-cluster traffic, kube-proxy and CoreDNS that are originally on the cloud are moved to the edge. When pod1 talks to pod2, pod1 uses the dedicated domain name of each branch to perform domain name resolution on CoreDNS. After the cluster IP address is obtained, the traffic is forwarded to pod2 based on the iptables or IPVS policy generated by kube-proxy.

For outbound traffic, a customized Ingress controller is deployed for service exposure.

- Edge Service Access | Service Grouping and Traffic Distribution

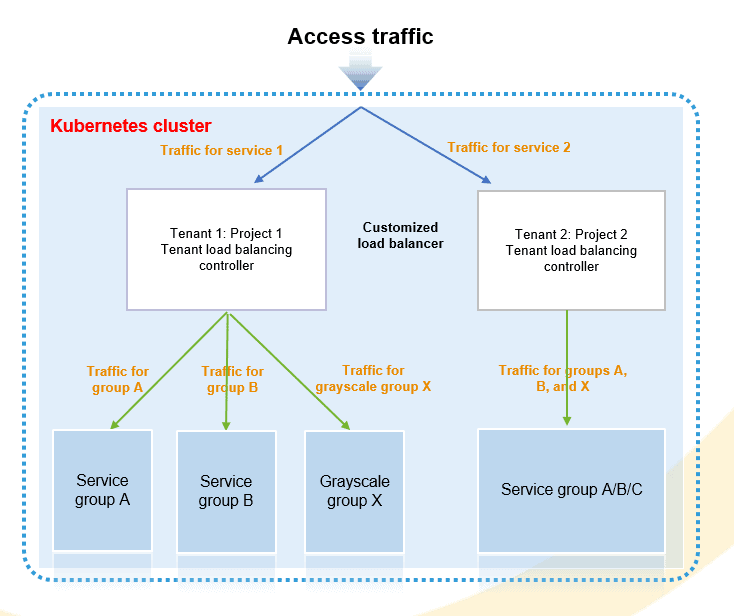

- Traffic isolation: To handle ultra-large traffic access and achieve traffic isolation, multiple IngressControllers are defined and allocated to one or more tenants.

- Group-based deployment: Services can be deployed into multiple groups. This mechanism can isolate application system faults and reduce possible fault impacts.

- Grayscale release: To learn users’ feedback in an early stage and limit possible impacts introduced by application upgrade, requests from a specific group of users are distributed to a grayscale version. Product features are released step by step to reduce risks.

- Service release without interruption: Services are deployed in the active/standby mode. When services are being released, service pods are scaled automatically based on traffic ratio. In this way, services can be released without interruption and user awareness.

- Hierarchical Image Repositories

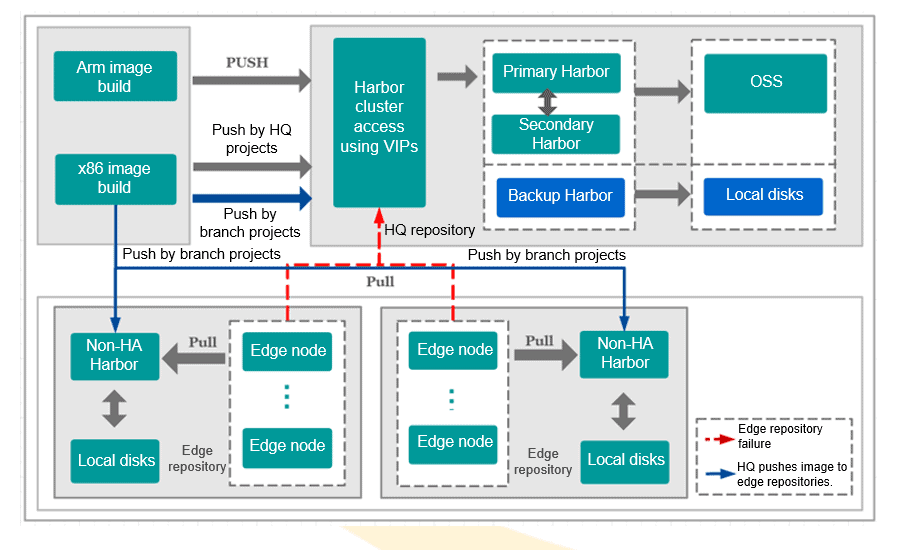

Networks at the edge could be of poor quality. When a unified cloud image repository is used, image pull can cause great burden on the network. Therefore, a hierarchical architecture is used to relieve the burden brought by image pull on the network and provide faster image pull speed.

- Center

Active and standby repositories: The active image repository is connected to OSS. The standby repository is deployed in non-HA mode and uses local disks

HA: The active repository uses Keepalived and VIP to provide service.

- Edge

Independent deployment: An independent image repository is deployed at each branch. Edge nodes pull images from the nearest image repository.

HA: Image repositories at the edge are deployed in non-HA mode, but they and the repositories at the centers form an active/standby mechanism.

- Management

Centralized build: Centralized image build by using the CI capability of the management platform

Unified push: Branch service images are pushed to the central and edge repositories in a unified manner.

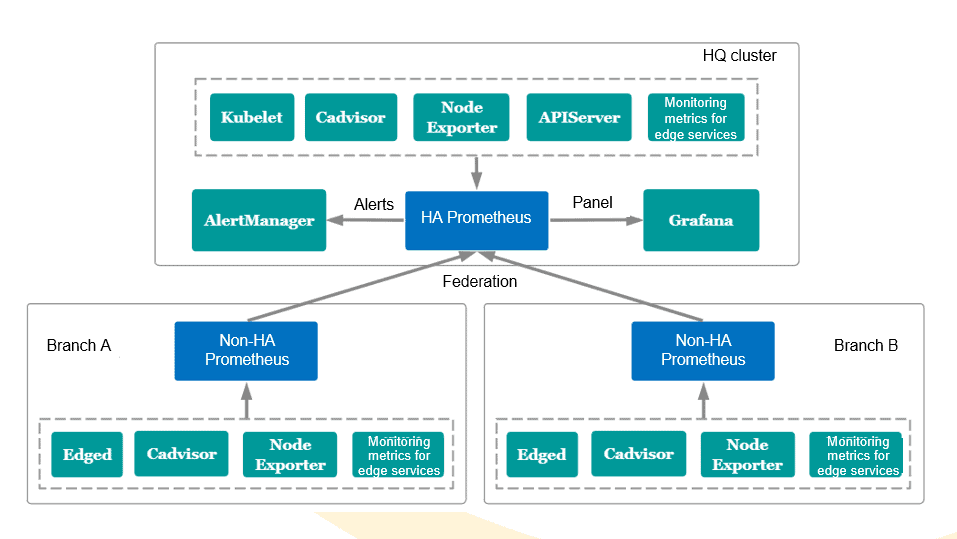

- Federated Monitoring

A center + edge federated monitoring system based on Prometheus, AlertManager, and Grafana.

- Federated monitoring

Non-HA Prometheus is deployed in branches to build cluster federation with Prometheus on the cloud.

- Unified results display

Data is aggregated to the HQ Prometheus cluster and is displayed using the Grafana panel in a unified manner.

- Centralized alarm reporting

Data is analyzed by Prometheus at the HQ. AlertManager is connected for centralized alarm reporting.

- Customized metrics

Prometheus at the edge can collect data based on customized metrics.

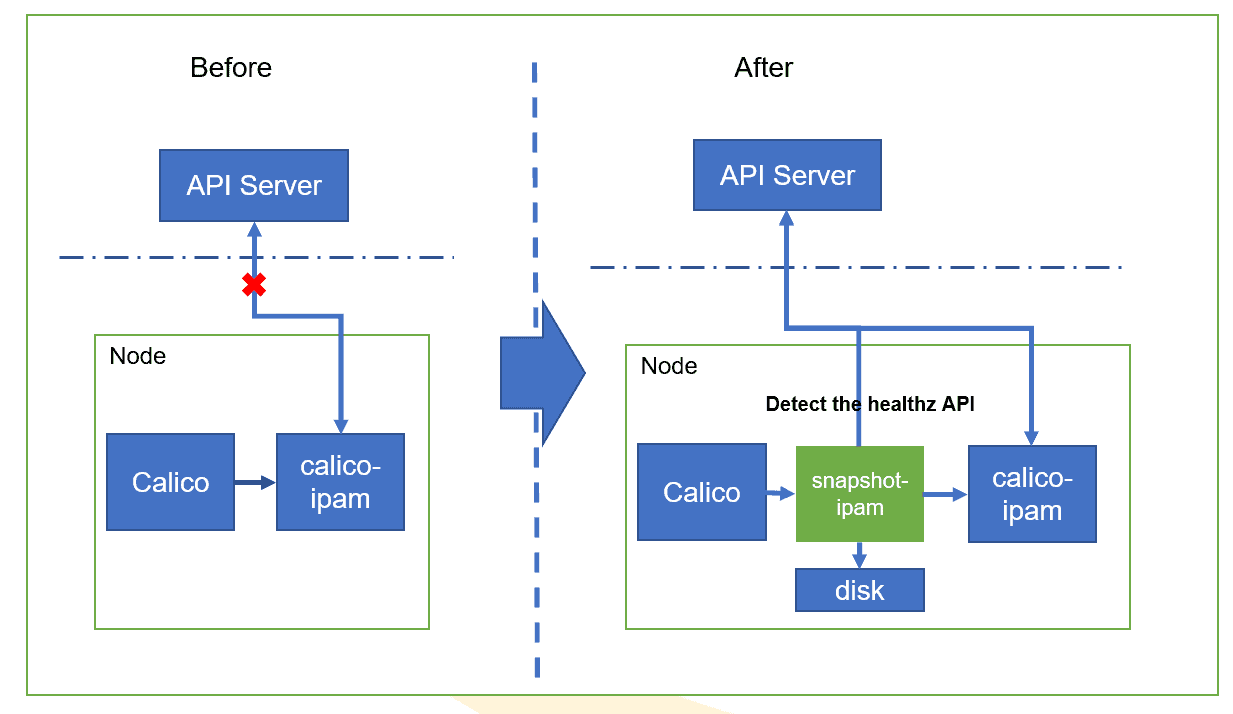

- Offline IP Address Retaining

The native CNI component basically depends on cloud capabilities (API Server/etcd). IP addresses cannot be allocated when pods are offline. IP address snapshots ensure that a pod retains its original IP address when the pod is restarted offline.

- Problem

IP address allocation is controlled by CNI. How can a pod retain its original IP address when it is restarted?

- Solution

An IP snapshot is added to the results returned by CNI. When the network is normal, the IPAM component is called to allocate IP addresses. When it is offline, local disks are used to retain the original IP addresses.

- No intrusion

No intrusion to the overall CNI design. It can flexibly interconnect with other CNI implements.

- Easy deployment

DaemonSets are used to distribute binaries.

- Ease of use

Only simple adjustments on your existing CNI configuration file are required.

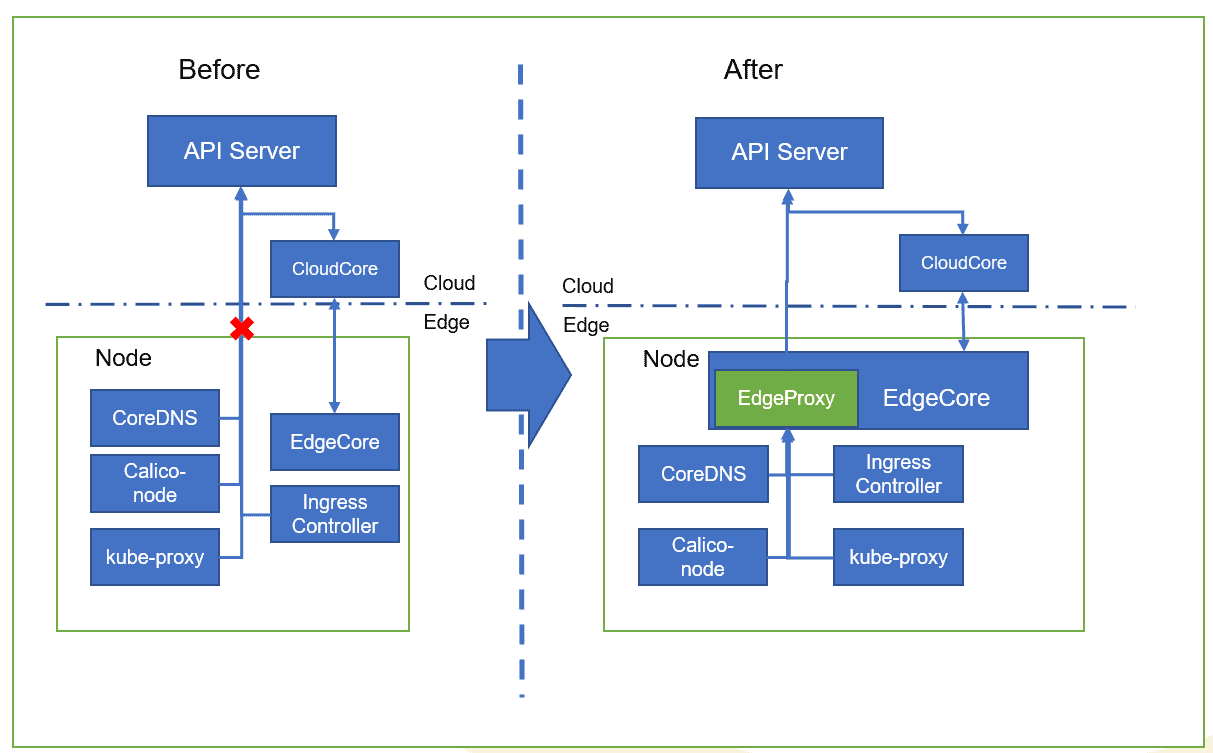

- Edge Proxy

For edges deployed with a large set of cloud capabilities, edge proxies can improve the offline autonomy capability for system components and enable node-level offline autonomy.

- Problem

How can system components perform offline autonomy when disconnected?

- Solution

An edge proxy is added to proxy system component requests as an HTTP proxy. It intercepts requests, and caches them locally. When the system components are offline, the cached data is used to provide services for the system components.

- Notice

This mode does not address the relist/rewatch issue. You can use the edge list-watch function of KubeEdge 1.6.

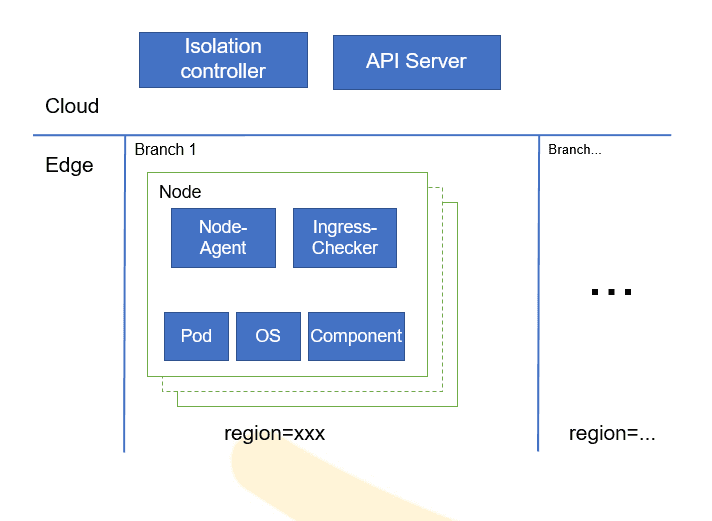

- Edge Fault Isolation

The two-level isolation architecture enables automatic monitoring and isolation, which covers key cluster components and scenarios such as node failures. The architecture reduces service downtime and ensures service stability.

- Accurate identification: Fine-grained component monitoring on running status. Fault scenario information is provided.

- Diagnosis in seconds: Traffic to the abnormal pods can be redirected in seconds, reducing the service impacts.

- Bidirectional heartbeat detection: Improved fault identification accuracy helps prevent problems caused by node-agent startup.

- Region-level detection: Single-node or regional faults can be accurately identified, reducing identification errors in weak edge network connectivity.

Contribution to the KubeEdge Community

Improvements on KubeEdge:

- keadm debug tool suite

- It simplifies and provides more methods for edge node faults location. It mainly includes keadm debug get/check/diagnose/collect commands.

- Contributed to the community

- CloudCore memory usage optimization

- The usage of the informer and client of CloudCore is optimized. LocationCache data is simplified. The overall memory usage is reduced by about 40%.

- Contributed to the community

- Default node labels and taints

- When an edge node is started, specified labels and taints are added by default to simplify the process of adding attributes to edge nodes.

- Contributed to the community

- Host resource reservation

- Edge node system resources can be reserved. You can set a maximum number of pods that can be deployed on a node to prevent all resources from being occupied by pods. This feature improves the stability of edge nodes.

- To be reviewed by the community

Summary & Outlook

- From the community: Edge management capabilities and service release efficiency are improved based on KubeEdge.

- Into the service: Network and load balancing solutions are tailored to service requirements.

- Polish up the architecture: Hierarchical image repositories, federated monitoring, and other features improve the overall architecture.

- Driven by scenarios: Refine fault scenarios and comprehensive fault isolation narrow down the fault impact scope.

- Back to the community: Improved capabilities are contributed back to the community.

Outlook

- Unified load architecture

At present, the architecture allows pods to be deployed at edge. However, some services can run only on VMs due to historic reasons or specific requirements. It is not economic to maintain a VM resource pool especially for these services. Explorations are being made in edge VM management using Kubernetes, KubeEdge, and KubeVirt, a VM management open-source project based on Kubernetes.

- Edge middleware service management

In the architecture aforementioned, each edge branch deploys several servers and has its own services, databases, and middleware. They can be properly managed from the cloud using Operator. However, in a weak network environment, list-watch is not completely reliable. How should we manage middleware services at the edge? Currently, a Kubernetes + KubeEdge + middleware service solution seems to be a good direction to look at. We’d like to share our progress in later articles.

Developers are always welcome to join the KubeEdge community and build the cloud native edge computing ecosystem together.

More information for KubeEdge:

- Website: https://kubeedge.io/en/

- GitHub: https://github.com/kubeedge/kubeedge

- Slack: https://kubeedge.slack.com

- Email list: https://groups.google.com/forum/#!forum/kubeedge

- Weekly community meeting: https://zoom.us/j/4167237304

- Twitter: https://twitter.com/KubeEdge

Documentation: https://docs.kubeedge.io/en/latest/