Guest post originally published on VMblog by W. Watson, Principal Consultant, Vulk Coop and Karthik S, Co-founder, ChaosNative & Maintainer at LitmusChaos

What is telco reliability?

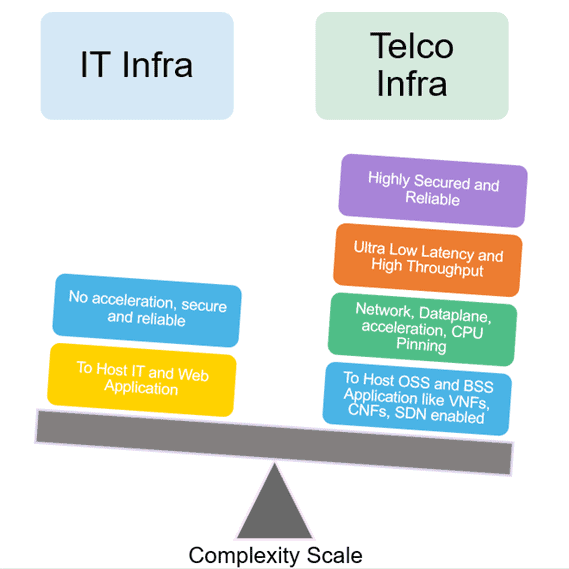

What does reliability mean to telcos? When the emergency systems that are responsible for a 911 call go offline, people’s lives are at stake. In one sense this story is the driving force for the type of telecommunications systems that people have come to expect. Reliability in a telco environment is far more critical and in a way, a complex requirement when compared to, say, the traditional IT infrastructure.

When we dig deeper, we can look at the reliability [1][2][3] of a specific protocol, TCP:

Reliability: One of the key functions of the widely used Transport Control Protocol (TCP) is to provide reliable data transfer over an unreliable network. … Reliable data transfer can be achieved by maintaining sequence numbers of packets and triggering retransmission on packet loss.

— Serpanos, Dimitrios, Wolf, Tilman

In this way, reliability is about the correctness of data (regardless of the methods used to ensure that data is correct). One method for measuring reliability is mean time between failure (MTBF [4]).

Telco availability

Another description of what we’ve come to expect from the emergency system story is known as availability and serviceability. How long a system can stay functional, regardless of corruption of data (e.g. a successful phone call that has background noise), is the availability of the system. How easily a system can be repaired when it goes down is the serviceability [5] of the system. The availability of a system is measured by the percentage of uptime it has, e.g. 99.99% uptime, e.g. Serviceability of a system can be measured by mean time to recover (MTTR [ 6]).

Cloud native availability

Cloud native systems [7] try to address reliability (MTBF) by having the subcomponents have higher availability through higher serviceability (MTTR) and redundancy. For example, having ten redundant subcomponents where seven components are available and three have failed will produce a top level component that is more reliable (MTBF) than a single component that “never fails” in the cloud native world.How do cloud native systems focus on MTTR? This is implemented by focusing on pipelines (the ability to easily recreate the whole system) that deploy immutable infrastructure using declarative systems (saying what a system should do, not how it should do it) combined with the reconciler pattern (constantly repairing the system based on its declared specifications). With this method, cloud native systems prioritize availability of the overall system over reliability of the subcomponents.

Two ways to resilience

We have seen two descriptions, telco and cloud native, of resilient systems. If we take a deeper look at how these systems are implemented, maybe we can find common ground.

K8s Enterprise Way

The cloud native infrastructure uses the reconciler pattern. With the reconciler pattern, the desired state of the application (e.g. there should be three identical nginx servers) is declared as configuration, and the actual state is a result of querying the system. An orchestrator repeatedly queries the actual state of the system and then when there is a difference between the actual state and the desired state, it reconciles them.

Kubernetes is an orchestrator and an implementation of the reconciler pattern. The configuration for an application, such as how many instances (pods, containers) and how those instances are monitored (liveness, readiness) are managed by Kubernetes.

The Erlang Way

Erlang has been used to build telecommunications systems to be fault tolerant and highly available from the ground up [ 8]. The Erlang method for building resilient systems is similar to the K8s enterprise way, in that there are lower layers that get restarted by higher layers:

In erlang you build robust systems by layering. Using processes, you create a tree in which the leaves consist of the application layer that handles the operational tasks while the interior nodes monitor the leaves and other nodes below them … [9]

Instead of an orchestrator (K8s) that watches over pods, Erlang has a a hierarchy of supervisors [10]:

In a well designed system, application programmers will not have to worry about error-handling code. If a worker crashes, the exit signal is sent to its supervisor, which isolates it from the higher levels of the system … Based on a set of … parameters … the supervisor will decide whether the worker should be restarted. [11]

In an Erlang system, reliability is accomplished by achieving fault tolerance through replication [12]. In both traditional telco systems and cloud native enterprise systems, workers that restart in order to heal themselves is a common theme. Repairability, specifically of subcomponents, is an important feature of cloud nativre systems. The Erlang world has the mantra “Let it fail”. This speaks to the repairability and mean time to recovery (MTTR) of subcomponents. Combining reliability, or mean time between failure (MTBF) and repairability makes it possible to focus on MTTR, which will produce the type of highly available and reliable systems that telco cloud native systems need to be.

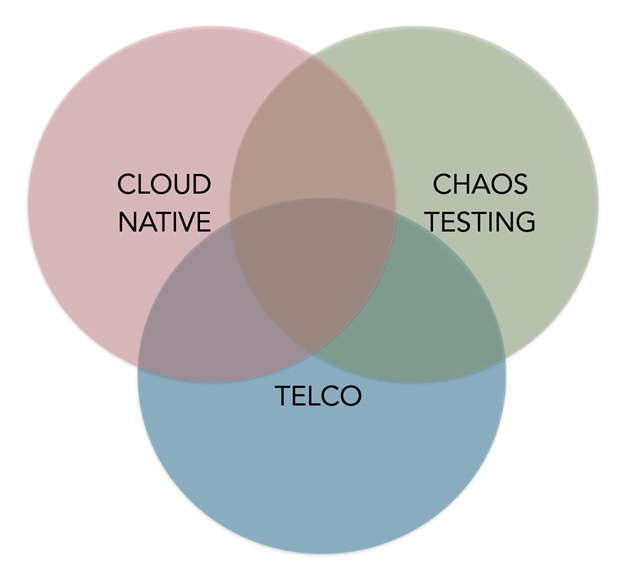

Telco within cloud native

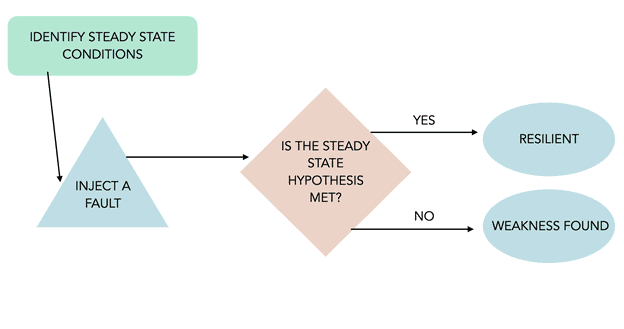

Like other domains that have found value in microservices architecture and containerization, telco is slowly but steadily embracing Cloud Native [13]. One important reason for this is the need for telco applications to be able to run on different infrastructures as most service providers have bespoke configurations. This has resulted in the creation of CNFs (Cloud-Native Network Functions) that are built ground-up based on standard cloud-native principles, including containerized apps subjected to CI/CD flows, maintenance of test beds controlled via IaC (Infrastructure-as-Code) constructs, with everything being deployed and operated on Kubernetes.The CNF-WG is a body within the CNCF (Cloud Native Computing Foundation) that is involved in defining the process around evaluating the cloud nativeness of networking applications, aka CNFs. One of the (now) standard practices furthering reliability in the cloud-native world is Chaos Testing or Chaos Engineering. It can be defined as:

..the discipline of experimenting on a system in order to build confidence in the system’s capability to withstand turbulent conditions in production.

In layman’s terms, chaos testing is like vaccination. We inject harm to build immunity from outages.

Subjecting the telco services on Kubernetes to chaos on a regular basis (with the right hypothesis and validation thereof) is a very useful exercise in unearthing failure points and improving resilience. The CNF-TestSuite initiative employs such resilience scenarios executed on the vendor-neutral CNF testbed.

In the subsequent sections, we will take a deeper look at Chaos testing, what benefits can be expected from it, and how it plays out within the Cloud-Native model.

Antifragility and chaos testing

Antifragility is when a system gets stronger, rather than weaker, under stress. An example of this would be the immune system in humans. Certain types of exposure to illness actually can make the immune system stronger.

Some things benefit from shocks; they thrive and grow when exposed to volatility, randomness, disorder, and stressors and love adventure, risk, and uncertainty. Yet, in spite of the ubiquity of the phenomenon, there is no word for the exact opposite of fragile. Let us call it antifragile. Antifragility is beyond resilience or robustness. The resilient resists shocks and stays the same; the antifragile gets better — Nassim Taleb [14]

Cloud native architectures are a way to achieve resilience through enforcing self-healing configuration where the best examples of such don’t have human intervention.

When chaos testing, individual subcomponents are removed or corrupted and the system is checked for correctness. This is done on a subset of production components to ensure the proper level of reliability and availability is baked in at all levels of architecture. If we add chaos testing to a cloud native architecture, the result should be an organization that develops processes that have resilience in mind from the start.

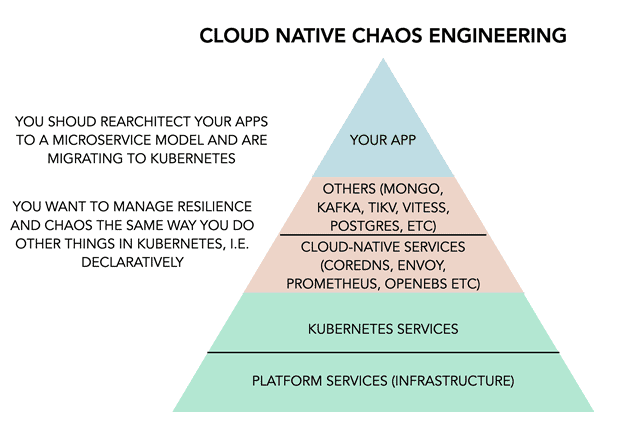

Chaos engineering in the cloud native world

Originally brought about as the result of a “shift-right” philosophy that advocated testing in production environments to verify system behaviour upon controlled failures, chaos engineering has evolved to become more ubiquitous in terms of where and how it is employed. The “Chaos First” principle involves testing resilience via failures at each stage of product development and in environments that are not production, i.e., in QA and staging environments. This evolution has been accelerated by the paradigm shift caused in development and deployment practices due to the emergence of cloud-native architecture, which has microservices and containerization at its core. With increased dependencies and an inherently more complex hosting environment (read Kubernetes), points of failures are increased even further, bringing about a stronger case for the practice of chaos testing. While the reconciliation model of cloud-native/Kubernetes provides greater opportunities for self-healing, it still needs to be coupled with right domain intelligence (in the form of custom controllers, right deployment architecture etc.,) to provide resilience.

Add to this the dynamicity of a Kubernetes-based deployment environment wherein different connected microservices undergo independent upgrades – there is a real need for continuous chaos or resilience testing. This places chaos tests as an integral part of the devops (CI/CD) flow.

Conclusion

Borrowing from the lessons learned when applying chaos testing to cloud native environments, we should use declarative chaos specifications to test telecommunication infrastructure in tandem with its development and deployment. The CI/CD tradition of “pull the pain forward” with a focus on MTTR will produce the type of highly available and reliable systems that cloud native telecommunication systems will need to be.

Continue the conversation

If you are interested in this topic and would like to help define cloud native best practices for telcos, you’re welcome to join the Cloud Native Network Functions Working Group (CNF WG) slack channel, participate in CNF WG calls on Mondays at 16:00 UTC, and attend the CNF WG KubeCon NA session virtually on Friday, October 15th.

For more details about the CNF WG and LimusChaos, visit:

##