Guest post originally published on Elastisys’ blog by Lars Larsson, Senior Cloud Architect, and DevOps Expert Engineer at Elastisys

The cloud computing service model has shown us that infrastructure as a service is here to stay. It is a given in 2021 that we consume virtualized hardware in the shape of virtual machines, virtual networks, and virtualized storage. All with seemingly infinite capacity (well, kind of) that we can just tap into.

But managing operating systems and networks for virtual machines is perhaps not what most companies want to dedicate their time to. Instead, managed services can be a cost-effective way of getting the benefits of cloud computing and focusing more directly on what makes the company’s application unique. Delivering value to customers, rather than managing underlying systems.

In this article, I compare managed service offerings for modern and containerized applications. First, we take a look at the more general Platform-as-a-Service (PaaS) model, which has inspired and defined the field. The more nished Kubernetes-as-a-Service and Container-as-a-Service models are then also discussed and described in more detail. I then show how the respective pros and cons of old managed container runtime models form the basis for a new service model: Kubernetes-Platform-as-a-Service (KPaaS), which provides the advantages from both.

Platform-as-a-Service (PaaS)

PaaS builds upon Infrastructure-as-a-Service (IaaS), but extends its scope. A typical PaaS is opinionated, in the sense that it supports perhaps a single programming language or language runtime. But in exchange, it integrates deeply with your application on a code level. With surrounding tooling, this service model makes it easy to quickly develop, build, update, and manage your application.

A great example of PaaS is exemplified by the Heroku developer experience. Application developers never manage underlying servers. Code, written in a small selected set of languages, is deployed to the Heroku platform. The appropriate language runtime is fully managed by Heroku, and kept up to date. Thus, it’s not the responsibility of the application developer to upgrade, e.g., NodeJS runtimes, nor the servers upon which it is deployed.

On the other hand, this deep integration means that your application is essentially beholden to the platform’s service provider. If you are a Heroku customer, your application is deeply integrated with that PaaS. You’re not easily moving away from it.

Because PaaS will target mainstream use cases, your application may/will eventually outgrow what is offered. I’ve helped plenty of customers make that transition, when Heroku’s services (and pricing model, which is anecdotally also more geared toward startups than enterprises) has restricted their growth.

Brief parenthesis about containers

Container technology allows one to package application source code (or ready-built program executables in binary form) together with all code it depends on. The resulting container therefore has everything in it to fully describe all the software needs to run. This means that it is in no way bound to any one particular platform. Instead, it is fully portable: it runs exactly the same on a developer’s laptop as on a cloud server.

Software running in containers must, however, be inter-connected to collaborate and be managed by a platform that runs containerized software (or simply “containers”). One such container orchestration system is the open source Kubernetes. It is not the only one, but it is by far the most popular. Kubernetes’ popularity is largely due to being open source. Its main still active competitor is AWS ECS. Older ones, such as Docker Swarm, DC/OS, and CloudFoundry, have largely fallen to the wayside.

Kubernetes-as-a-Service (KaaS)

Kubernetes consists of logically two parts. The control plane, and the worker nodes. The control plane is the brain of the Kubernetes system: it decides on which worker node individual containers should be run, along with a slew of other automation tasks. The control plane is deployed on one set of cloud servers, and the worker nodes are a different set of cloud servers.

KaaS typically means that the control plane part is managed by a service provider. This is either the cloud infrastructure provider itself or a partner. The most common KaaS are AWS’ EKS, Azure’s AKS, and Google’s GKE.

KaaS customers therefore don’t have to manage the brain of the Kubernetes cluster, but definitely the brawn: the worker nodes. This definitely reduces complexity, but still leaves plenty of system administration work for teams to manage. The worker nodes must be kept up to date, both with operating systems and Kubernetes releases, and infrastructure-related things such as networking and storage must be dealt with.

KaaS makes it easier to run a containerized application, but is definitely not a hands-off experience for teams to manage. Plenty of job ads for “Kubernetes administrators” that list one of the major KaaS as the underlying platform show this clearly.

Container-as-a-Service (CaaS)

Customers of CaaS instead opt for a fully hands-off container runtime experience. In essence, they hand the container to the service provider along with a description of the resources it should have (X GB RAM, Y CPU cores), and let them figure out how to deploy it. The most popular such service is AWS Fargate. Which system underlies that service is hidden from customers: they would neither be able to nor need to troubleshoot any issue anyway, since the service is fully managed.

The backside to this reduction in complexity is that while CaaS makes it simple to run containers as such, such services are vendor-specific platforms. And there is very little community mindshare and support that has been formed around, for instance, even the popular AWS Fargate.

What I’ve heard from developers and DevOps engineers regarding Fargate is that the value offering sounds great at first, but that they ultimately feel out of control. All the responsibility from management to keep the application up and running, but none of the control and ability to make sure that it is. And because Fargate is AWS-specific technology, and AWS is the only one with actual control, the only way to use Fargate that ensures business continuity is to pay for a premium support deal as well. And as you can see from their support pricing page, that certainly raises the price of Fargate (which you will hear DevOps people grumble about in general) even further.

Comparison between PaaS, KaaS, and CaaS

So PaaS required deep integration to make an application work with the platform. But it was a smart setup, so system administration could be minimized.

Then containers and KaaS came along, and the integration was essentially reduced to zero, because containers were fully independent packages of software. They just needed a smart enough system around them to deploy the containers.

And because KaaS required system administrators, CaaS was invented. But that then reintroduced platform dependence!

CaaS is essentially PaaS, but with containers.

So the great thing about CaaS is that developers don’t have to care about managing underlying container runtimes or orchestration systems. The bad thing is that it locks them in, and that there are just not a lot of great solutions out there in the community around these vendor-specific platforms.

And the great thing about KaaS is that it is Kubernetes, around which there is a lot of community traction and great solutions to common problems. But with KaaS, you have to do system administration that you don’t really want to, or care about.

Reaping the benefits of KaaS and CaaS with a fully-managed Kubernetes Platform

I believe that there is a sweet spot between KaaS and CaaS. In the best of worlds, I would like that middle ground to give me:

- Kubernetes, pure and simple, so I can benefit from all the development in the cloud native community, and deploy standard Kubernetes applications via manifests, Helm, or whatever;

- managed Kubernetes control plane and worker nodes, because I want to target a working cluster, and know that my responsibility is purely to keep my application up and running; and yet

- no cloud-specific technologies (logging, access control, monitoring) that lock me to a vendor’s proprietary platform.

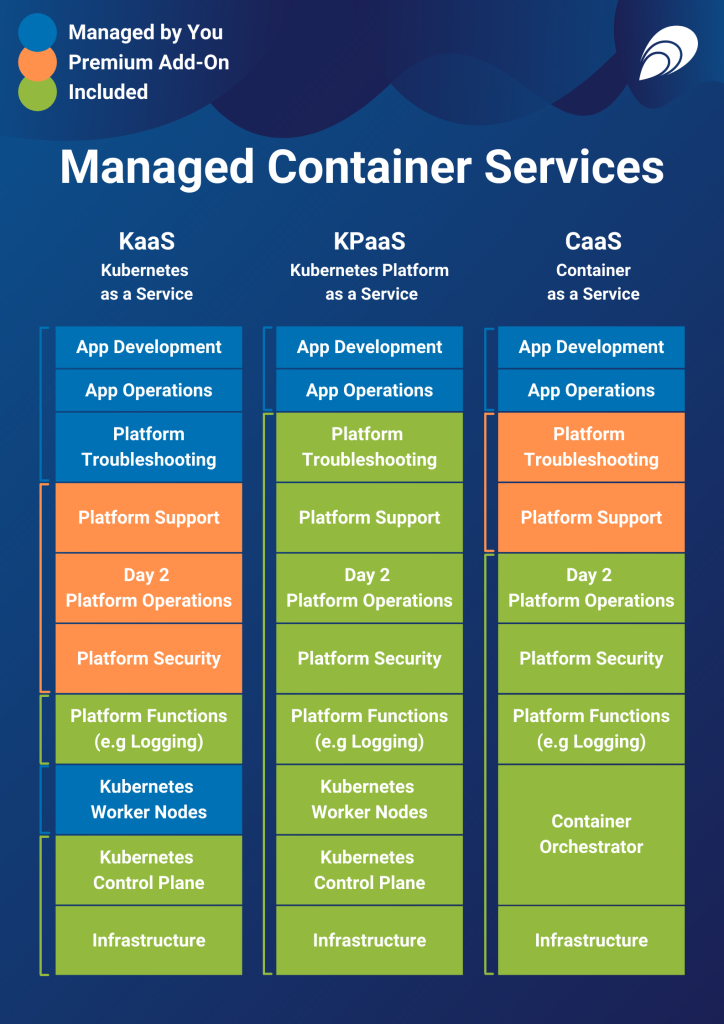

The infographic below shows what parts you as a customer would be responsible for, and what the service provider promises to offer to you.

This sweet spot takes the best parts of KaaS and CaaS. The main drawback of KaaS is that only the control plane is managed, but not the worker nodes. A CaaS will give you that, but its container orchestration is not Kubernetes, so you can’t run off-the-shelf applications with ease. But because the sweet spot takes the best from both options, it does not have their respective drawbacks.

But, as you know, this is not something the major cloud providers offer. And honestly, I doubt they ever will. Especially with the last part about avoiding cloud-specific tech that locks customers in. They could have something akin to this already if they wanted to. They just don’t.

Instead, to get this kind of fully managed Kubernetes platform service (KPaaS), we have to turn to third parties, who are not beholden to any particular cloud provider. This means that the sweet spot also has to come with its own logging, monitoring, and access control features (and so forth), because they cannot rely on those offered by a single cloud provider. I see that as a blessing, because that means that the managed Kubernetes service will operationally feel the same way, regardless of which cloud provider(s) it happens to be deployed upon.

What service model fits your use case?