Project post from Ruan Zhaoyin of KubeEdge

This article describes how e-Cloud uses KubeEdge to manage CDN edge nodes, automatically deploy and upgrade CDN edge services, and implement edge service disaster recovery (DR) when it migrates its CDN services to the cloud.

This article includes the following four parts:

- Project background

- KubeEdge-based edge node management

- Edge service deployment

- Architecture evolution directions

Project Background

China Telecom e-Cloud CDN Services

China Telecom accelerates cloud-network synergy with the 2+4+31+X resource deployment. X is the access layer close to users. Content and storage services are deployed at this layer, allowing users to obtain desired content in a low latency. e-Cloud is a latecomer in CDN, but their CDN is developing rapidly. e-Cloud provides all basic CDN functions and abundant resources, supports precise scheduling, and delivers high-quality services.

Background

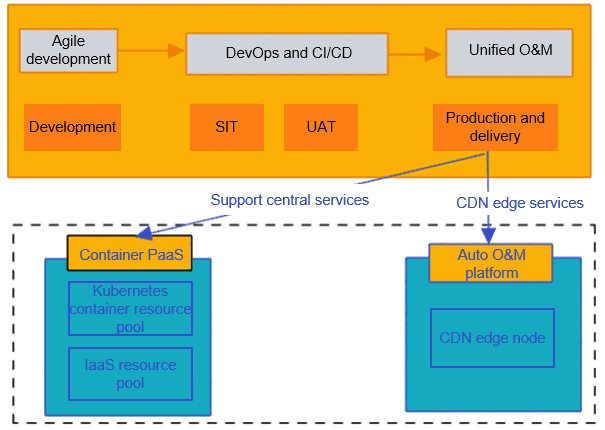

Unlike other cloud vendors and traditional CDN vendors, e-Cloud CDN is developed cloud native. They built a CDN PaaS platform running on containers and Kubernetes, but have not completed the cloud native reconstruction of CDN edge services.

Problems they once faced:

- How to manage a large number of CDN edge nodes?

- How to deploy and upgrade CDN edge services?

- How to build a unified, scalable resource scheduling platform?

KubeEdge-based Edge Node Management

CDN Node Architecture

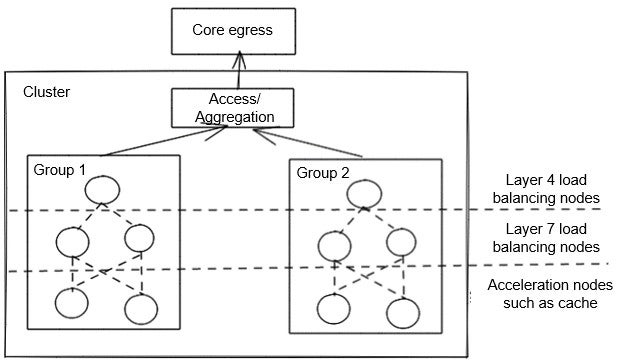

CDN provides cache acceleration. To achieve nearby access and quick response, most CDN nodes are deployed near users, so requests can be scheduled to the nearby nodes by the CDN global traffic scheduling system. Most CDN nodes are discretely distributed in regional IDCs. Multiple CDN service clusters are set up in each edge equipment room based on the egress bandwidth and server resources.

Containerization Technology Selection

In the process of containerization, they considered the following technologies in the early stage:

Standard Kubernetes: Edge nodes were added to the cluster as standard worker nodes and managed by master nodes. However, too many connections caused relist issues and heavy load on the Kubernetes master nodes. Pods were evicted due to network fluctuation, resulting in unnecessary rebuild.

Access by node: Kubernetes or K3s was deployed in clusters. But there would be too many control planes and clusters, and a unified scheduling platform could not be constructed. If each KPI cluster was deployed in HA mode, at least three servers were required, which occupied excessive machine resources.

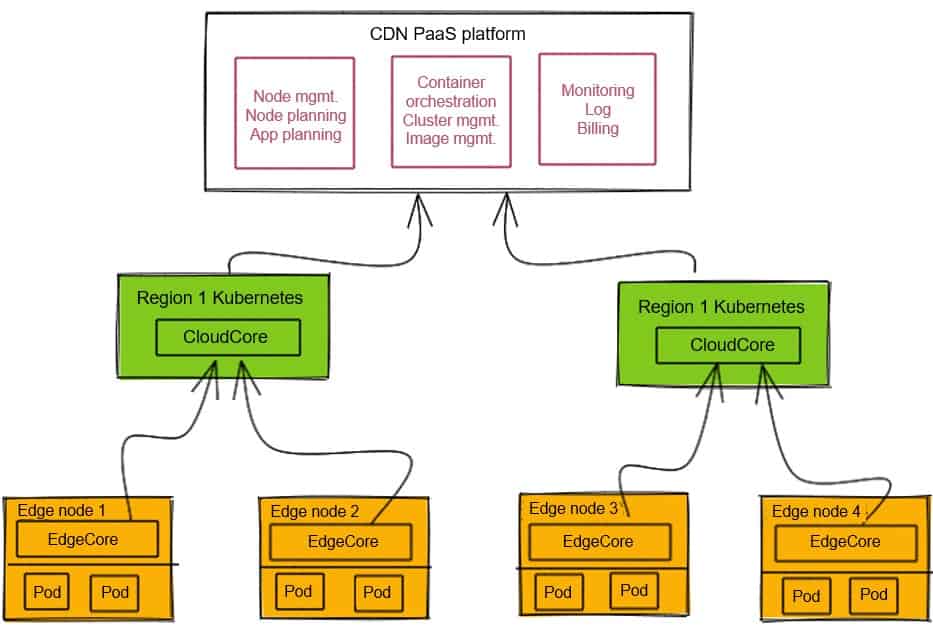

Cloud-edge access: Edge nodes were connected to Kubernetes clusters using KubeEdge. Fewer edge node connections were generated and cloud-edge synergy, edge autonomy, and native Kubernetes capabilities could be realized.

Solution Design

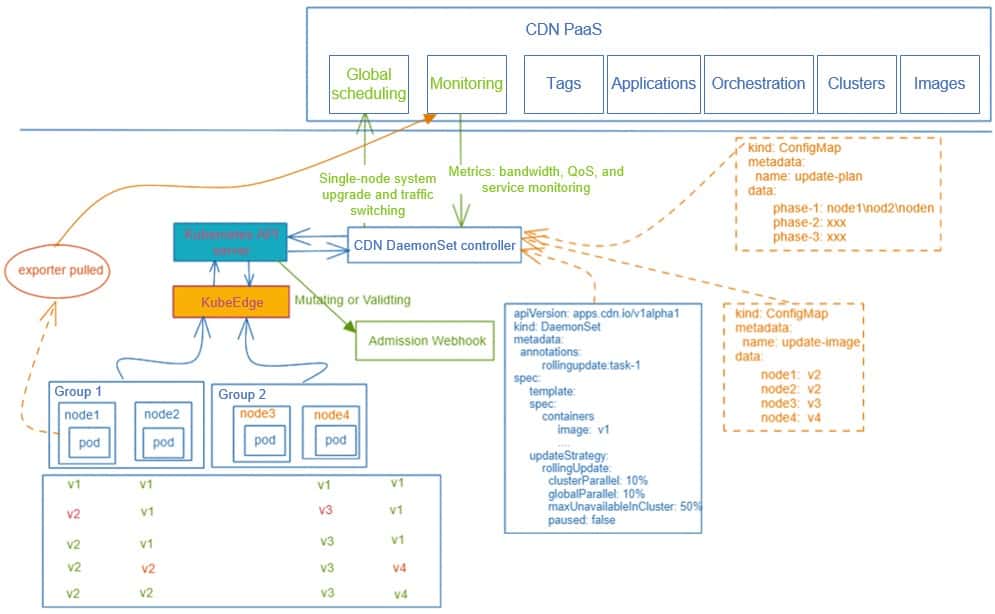

The preceding figure shows the optimized architecture.

Several Kubernetes clusters were created in each regional center and data center to avoid single-point access and heavy load on a single Kubernetes cluster. Edge nodes were connected to the regional cluster nearest to them. The earlier 1.3 version only provided the single-group, multiple-node HA solution. It could not satisfy large-scale management. So they adopted multi-group deployment.

This mode worked in the early stage. However, when the number of edge nodes and deployed clusters increased, the following problem arose:

Unbalanced Connections in CloudCore Multi-Replica Deployment

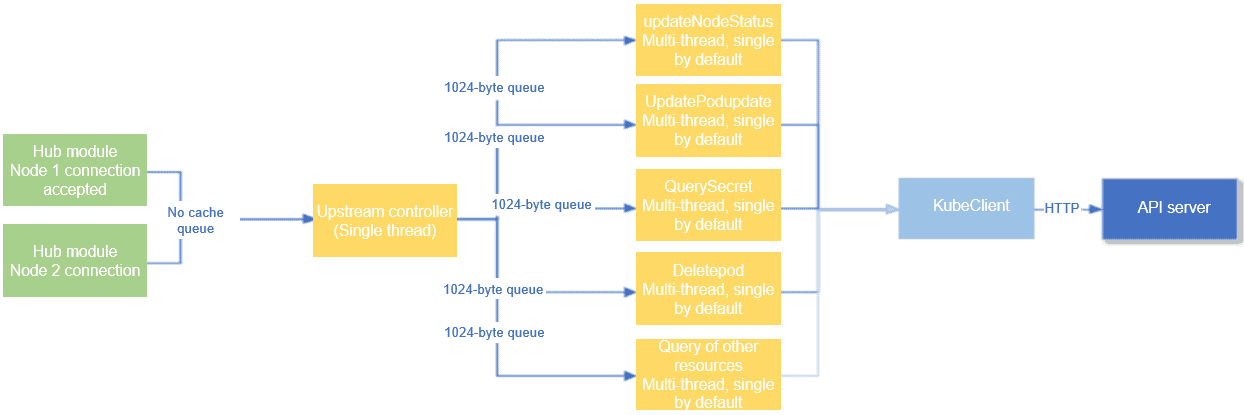

The preceding figure shows the connection process from the hub to upstream and then to the API server. The upstream module distributed messages using single threads. As a result, messages were submitted slowly and certain edge nodes failed to submit messages to the API server in time, causing deployment exceptions.

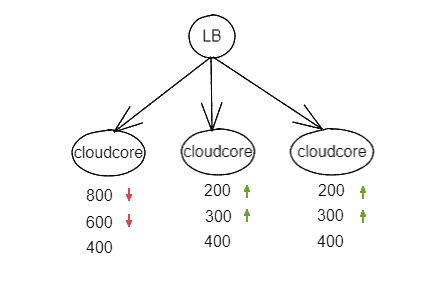

To solve this problem, e-Cloud deployed CloudCore in multi-replica mode, but connections were unbalanced during the upgrade or unexpected restart of CloudCore. e-Cloud then added layer-4 load balancing to the multiple copies, and configured load balancing policies such as listconnection. However, layer-4 load balancing had cache filtering mechanisms and did not ensure even distribution of connections.

After optimization, they used the following solution:

CloudCore Multi-Replica Balancing Solution

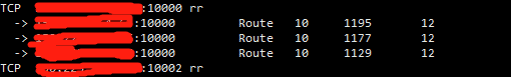

- After a CloudCore instance is started, it reports information such as the number of real-time connections through ConfigMaps.

- It calculates the expected number of connections of each node based on the number of connections of itself and other instances.

- It calculates the difference between the number of real-time and expected connections. If the difference is greater than the maximum tolerable difference, the instance starts to release the connections and starts a 30s observation period.

- After the observation period, the instance starts a new detection period. It will stop this process when the connections are balanced.

Changes in the number of connections

Load balancing after the restart

Edge Service Deployment

CDN Acceleration Process

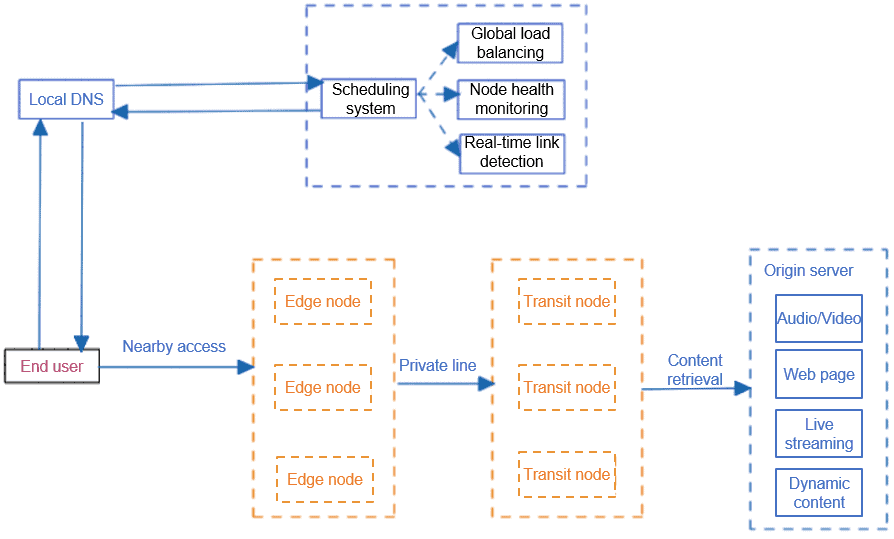

CDN consists of two core systems: scheduling and cache. The scheduling system collects the status of CDN links, nodes, and node bandwidth usage on the entire network in real time, calculates the optimal scheduling path, and pushes the path data to the local DNS, 302 redirect, or HTTP dynamic streaming (HDS).

The local DNS server then resolves the data and sends the result to the client, so the client can access the nearest edge cluster. The edge cluster checks whether it has cached the requested content. If no cache hits, the edge cluster checks whether its upper two or three layers have cached the content. If not, the edge cluster retrieves the content from the cloud site.

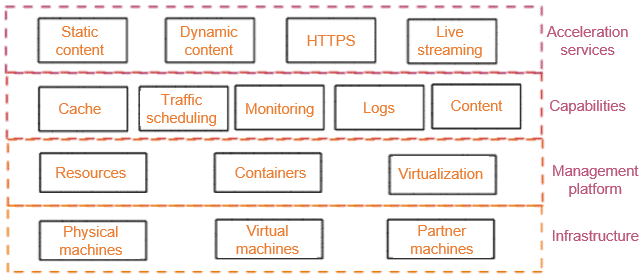

In the cache system, the services used by products, such as live streaming and static content acceleration, are different. This requires more costs for development and maintenance. The convergence of different acceleration services may be a trend.

Features of the CDN cache service:

1. Exclusive storage and bandwidth resources

2. Large-scale coverage: The cache service of the software or a machine may support even 100,000 domain names.

3. Tolerance of DR faults by region: The cache of a small number of nodes can be lost or expire. Too much cache content will cause breakdown. When so, the content will be back-cached to the upper layers. As a result, the access slows down and services become abnormal.

4. High availability: Load balancing provides real-time detection and traffic switching/diversion. Layer 4 load balancing ensures traffic balancing between hosts in a group. Layer 7 load balancing ensures that only one copy of each URL is stored in a group through consistent hashing.

The following problems are worthy of attention during CDN deployment:

- Controllable node container upgrade

- A/B test of versions

- Upgrade verification

The upgrade deployment solution includes:

- Concurrent control of batch upgrade and intra-group upgrade

- Creating a batch upgrade task

- Upgrading the specified node through the controller

- Fine-grained version settings

- Creating host-level version mapping

- Adding the logic for selecting a pod version to the controller

- Graceful upgrade:

- Normal traffic switching and recovery using pre-stop and post-start scripts

- Associating GSLB for traffic switching in special scenarios

- Upgrade verification: The controller works with the monitoring system. If a service exception is detected during the upgrade, the controller stops the upgrade and rolls back to the source version.

- Secure orchestration: Admission webhooks are used to check whether workloads and pods meet the expectation.

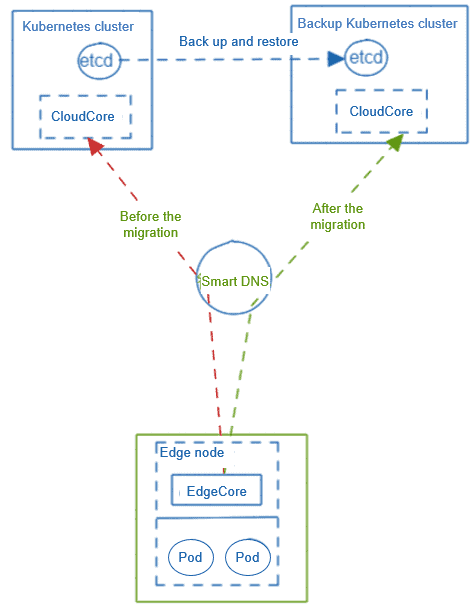

KubeEdge-based CDN Edge Container DR and Migration

Migration procedure

- Back up etcd and restore it in the new cluster.

- Switch to the DNS.

- Restart the CloudCore and disconnect the cloud and edge hubs.

Advantages

- Low cost. With edge autonomy of KubeEdge, edge containers do not need to be rebuilt, and services are not interrupted.

- Simple and controllable process and high service security

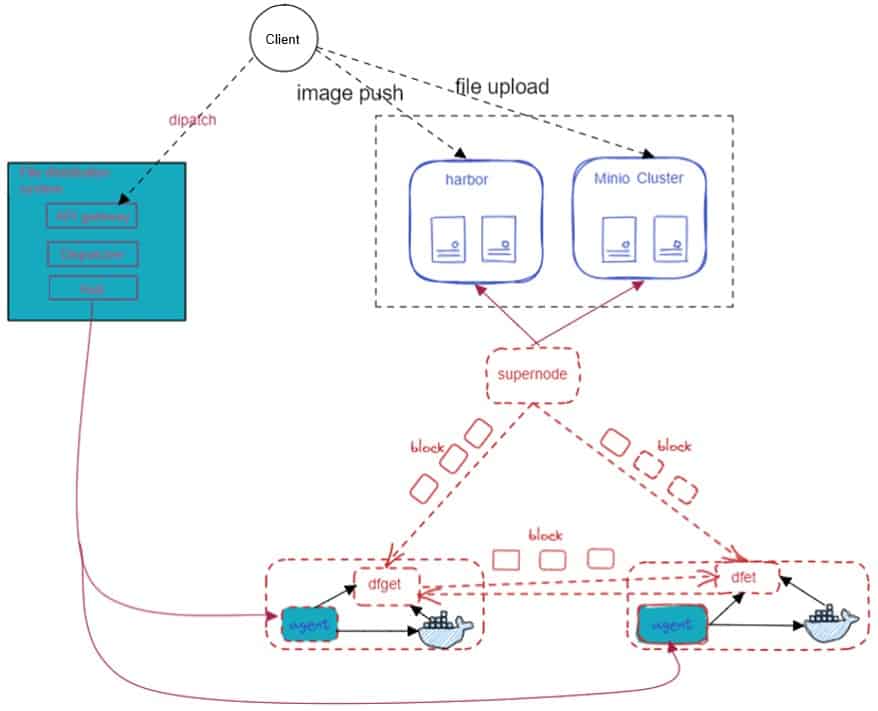

CDN Large-Scale File Distribution

Scenarios

- CDN edge service configuration

- GSLB scheduling decision data

- Container image buffer tasks

Architecture Evolution Directions

Edge Computing Challenges

- Management of widely distributed, diverse resources with inconsistent architecture and specifications

- Limited bandwidth and poor stability of heterogeneous, mobile, and other weak networks

- Lack of a unified security system for edge services

- A wide range of service scenarios and types

Basic Capabilities of CDN-based Edge Computing Platform

- Resources:

- Distributed nodes and excess resources reserved for service surges

- Cloud-edge synergy through KubeEdge

- Heterogeneous resource deployment and management on clients

- Scheduling and networking:

- Dedicated EDNS for precise scheduling at the municipal level and nearby access

- Unified scheduling of CDN and edge computing

- Cloud-edge dedicated network for reliable management channels, data backhaul, and dynamic acceleration

- IPv6 support

- Security:

- CDN+WAF anti-DDoS, traffic cleaning, and near-source interception

- HTTPS acceleration, SSL offloading, and keyless authentication

- Gateway:

- Edge scheduling and powerful load balancing

- Processing of general protocols, including streaming media protocols

CDN Edge Computing Evolution

Edge Infrastructure Construction

- Hybrid nodes of edge computing and CDN

- Node-level service mesh

- Complete container isolation and security

- Ingress-based and universal CDN gateways

- Virtual CDN edge resources

- Edge serverless container platform

- Unified resource scheduling platform for CDN and containers

Opportunities

- Offline computing, video encoding and transcoding, and video rendering

- Batch job

- Dialing and pressure testing

More information for KubeEdge:

- Website:https://kubeedge.io/en/

- GitHub: https://github.com/kubeedge/kubeedge

- Slack: https://kubeedge.slack.com

- Email list: https://groups.google.com/forum/#!forum/kubeedge

- Weekly community meeting: https://zoom.us/j/4167237304

- Twitter: https://twitter.com/KubeEdge