Guest post originally published on the SIGHUP blog by Alessandro Lo Manto

The IT world is adopting container-based infrastructures more and more every day. However, the advantages, disadvantages and even the limitations are not clear to everyone.

Considering that even large companies are approaching container-based infrastructures, it is a good idea to account for possible attack areas and the potential impact of a data breach.

Technologies like Docker (containerd) and LXC are not really isolated systems as they share the same Linux Kernel as the operating system they are hosted on.

For a potential attacker, having their container launched within a large company is a golden opportunity. Does technology allow us to defend ourselves easily?

Current container technology

It’s been repeated several times, Containers are a new way of packaging, sharing, and deploying applications, as opposed to monolithic applications where all features are packaged in a single software or possibly an operating system.

Right now, containers do not exploit anything new, but they are an evolution created above the Linux Namespace and Linux Control Groups. The namespace creates a virtual and isolated user space and provides the application with its system resources such as file system, network and processes. This abstraction allows the application to be started independently without interfering with other applications running on the same host.

So, thanks to namespaces and cgroup together, we can definitely start many applications running in the same host in an isolated environment.

Container vs Virtual Machine

It is clear that container technology solves a problem in terms of isolation, portability and streamlined architecture compared to a virtual machine environment. But let’s not forget that the virtual machine allows us to isolate our application, especially at the kernel level, and therefore the risk that a hacker can escape a container and compromise the system is much higher than escaping a virtual machine.

Most kernel Linux exploits might work for containers, and this could allow them to escalate and compromise not only the affected namespace but also other namespaces within the same operating system.

These security issues led researchers to try to create real separated namespaces from the host. Specifically called “sandboxed” and right now there are several solutions that provide these features: gVisor or, for example, Kata Containers.

Container Runtime in Kubernetes

We can go deeper into this type of technology within a container orchestrator, Kubernetes.

Kubernetes uses a component, kubelet, to manage containers. We can define it as the captain of the ship that takes charge of the specifications that are supplied to it and performs its operations punctually and precisely.

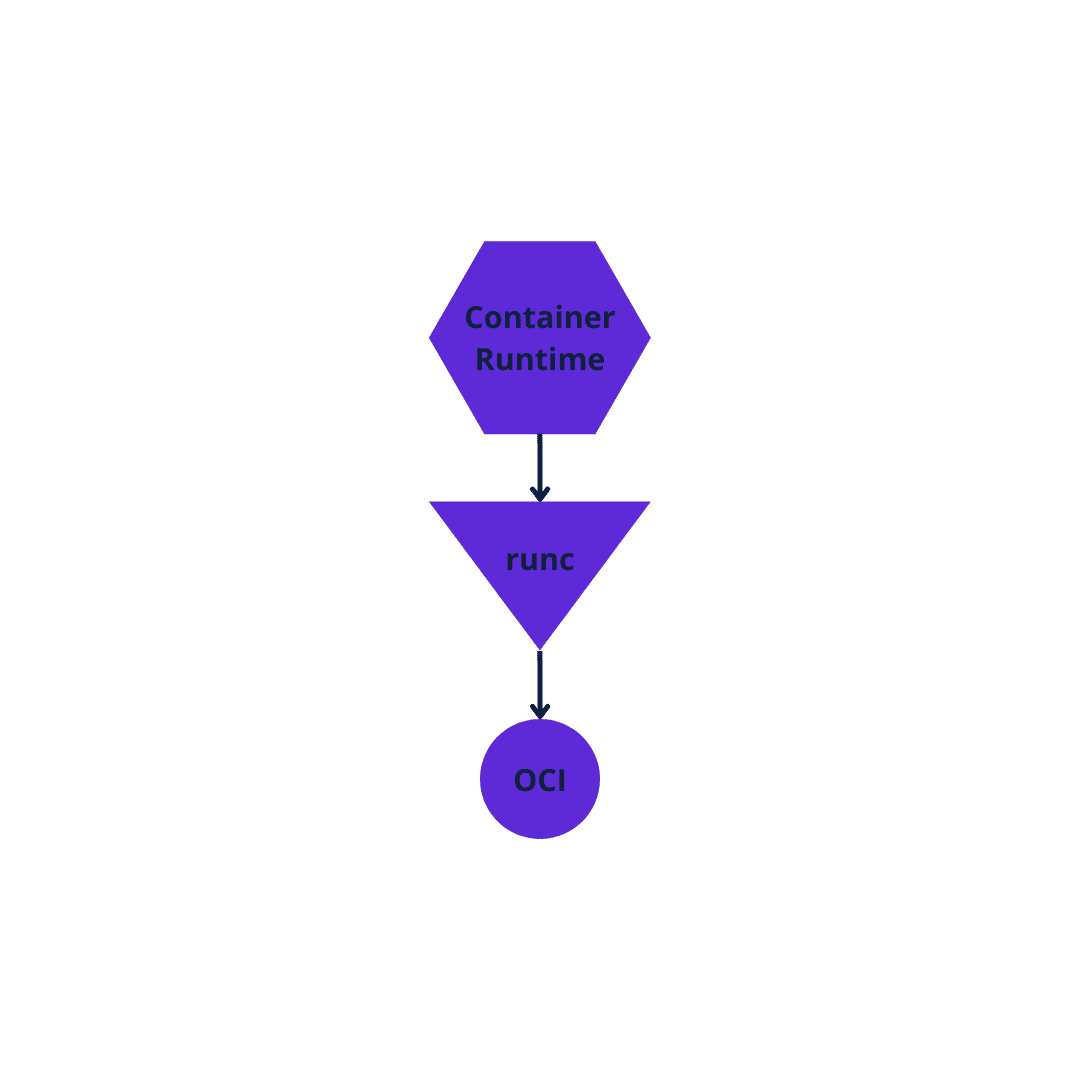

Kubelet takes pod specifications and makes them run as containers on the host machine to which they are assigned and can interact with any container runtime as long as it is OCI standard compliant (whose implementation is RunC)

RunC, originally embedded into the Docker architecture, was released in 2015 as a standalone tool. It has become a commonly used, standard, cross-functional container runtime that DevOps teams can use as part of container engines.

RunC provides all the functionality to interact with existing low-level Linux features. It uses namespaces and control groups to create and run container processes.

In the following paragraph, we will introduce the runtime class and the core elements. There is also the RuntimeClass handler whose default value will be RunC (for installations of Kubernetes that use containerd as the container runtimes).

Runtime Class

The Runtime Class, as its name suggests, allows us to operate with various container runtimes. In 2014, Docker was the only runtime container available on Kubernetes. Starting with version 1.3 of Kubernetes, compatibility with Rocket (RKT) was added and finally with Kubernetes 1.5, the Container Runtime Iterface (CRI) was introduced with the possibility of having a standard interface and all the container runtime you can interface directly, with this standard saving developers the trouble of adapting to all types of container runtimes and worrying about version maintenance.

The CRI, in fact, has allowed us to decouple the part of container runtime from Kubernetes and, above all, allowed technologies such as Kata Containers and gVisor to connect to a container runtime as containerd.

In Kubernetes 1.14, RuntimeClass was introduced again as a built-in cluster resource with a handler property at its core.

The handler indicates the program that receives the container creation request and corresponds to a container runtime.

kind: RuntimeClass

apiVersion: node.k8s.io/v1

metadata:

name: #RuntimeClass Name

handler: #container runtime for example: runc

overhead:

podFixed:

memory: "" # 64Mi

cpu: "" # 250m

scheduling:

nodeSelector:

<key>: <value> # container-rt: gvisor- The handler field points to specific container runtime or configuration to be used.

- Declaring overhead allows the cluster (including the scheduler) to account for it when making decisions about Pods and resources. Through the use of these fields, you can specify the overhead of running pods utilizing this RuntimeClass and ensure these overheads are accounted for in Kubernetes.

- The scheduling field is used to ensure that Pod are scheduled on the right nodes.

By default, our handler, if we have a cluster with Docker or containerd, is runc, but if we use gVisor it will be runsc.

Isolate Linux Host and Containers with gVisor in Kubernetes

Now we will see how can we have more than one container runtime in a Kubernetes cluster and choosing a more strict one for sensitive workload.

For this tutorial, I use a previous project where I carried out the installation of a Kubernetes cluster with contained.

https://github.com/alessandrolomanto/k8s-vanilla-containerd

Initialize the Kubernetes Cluster

make vagrant-startAfter bringing machines up, verify if all components are up and running

vagrant ssh master

kubectl get nodesNAME STATUS ROLES AGE VERSION

master Ready control-plane,master 7m59s v1.21.0

worker1 Ready <none> 5m50s v1.21.0

worker2 Ready <none> 3m51s v1.21.0

Install gVisor on worker1

ssh worker1 # Vagrant default password: vagrant

sudo suInstall latest gVisor release

(

set -e

ARCH=$(uname -m)

URL=https://storage.googleapis.com/gvisor/releases/release/latest/${ARCH}

wget ${URL}/runsc ${URL}/runsc.sha512 \\

${URL}/containerd-shim-runsc-v1 ${URL}/containerd-shim-runsc-v1.sha512

sha512sum -c runsc.sha512 \\

-c containerd-shim-runsc-v1.sha512

rm -f *.sha512

chmod a+rx runsc containerd-shim-runsc-v1

sudo mv runsc containerd-shim-runsc-v1 /usr/local/bin

)

FINISHED --2022-04-28 07:24:44--

Total wall clock time: 5.2s

Downloaded: 4 files, 62M in 3.1s (20.2 MB/s)

runsc: OK

containerd-shim-runsc-v1: OK

Configure container runtime

cat <<EOF | sudo tee /etc/containerd/config.toml

version = 2

[plugins."io.containerd.runtime.v1.linux"]

shim_debug = true

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

runtime_type = "io.containerd.runc.v2"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runsc]

runtime_type = "io.containerd.runsc.v1"

EOFRestart containerd service

sudo systemctl restart containerd

Install the RuntimeClass for gVisor

cat <<EOF | kubectl apply -f -

apiVersion: node.k8s.io/v1beta1

kind: RuntimeClass

metadata:

name: gvisor

handler: runsc

EOF

Verify:

vagrant@master:~$ kubectl get runtimeclass

NAME HANDLER AGE

gvisor runsc 17s

Create a Pod with the gVisor RuntimeClass:

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: nginx-gvisor

spec:

runtimeClassName: gvisor

containers:

- name: nginx

image: nginx

EOF

Verify that the Pod is running:

kubectl get pod nginx-gvisor -o wide

vagrant@master:~$ kubectl get pod nginx-gvisor -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-gvisor 1/1 Running 0 31s 192.168.235.129 worker1 <none> <none>

For updated info, follow official docs.

Conclusions

We have looked that the current container technology has weak isolation issue.

Common practices such as patching containers quickly and least security contexts privilege can effectively restrict the attack surface. We should start even implementing runtime security measures like the tutorial above because now it is possible to have more than one container runtime.

Sure, it is not something that everyone needs, but it will certainly come in handy when you want to run untrusted containers without impacting the host in any way.

Let’s consider that you are a container hosting service and start containers of different customers on the same host machine. Could you compromise other customers because of the shared context? Start thinking about how to mitigate these issues.