Guest post by Mengxin Liu, Kube-OVN Founding Engineer, Alauda Senior Engineer.

As cloud native technologies converge to data centers and the infrastructure, more and more enterprises are using Kubernetes and KubeVirt to run virtualized workloads and manage both virtual machines and containers from a unified control plane. However, the usage scenarios of VMs are significantly different from those of containers. At the same time, the emerging container networking solutions are not designed for virtualization scenarios – the feature set and the performance characteristics are quite different from those of the traditional data center networks. Networking has become the bottleneck of adopting cloud native virtualization.

Kube-OVN, as its name implies, is an integration of the OVN/OVS based networking with Kubernetes. Since OVN and OVS have been widely used in traditional virtualization scenarios, Kube-OVN has attracted a lot of attention from the KubeVirt community since it was open sourced. Some early adopters of KubeVirt have further improved the networking capabilities of Kube-OVN for their use cases. This resulted in a large number of new and optimized Kube-OVN features specifically targeted towards solving some of the networking challenges faced by KubeVirt.

VM Static IP Addresses

Container networks usually do not require that IP addresses be fixed. In VM usage scenarios, however, the IP addresses are often used as the primary access method. Users typically require that the VM addresses remain stable as the VM is restarted, upgraded, or migrated. Virtualization administrators also have a need to control address assignments and want the ability to specify VM addresses.

After several iterations, Kube-OVN now supports:

* IP address specification at VM creation, with addresses remaining stable throughout the lifecycle of the VM.

* Random IP address assignment at VM creation, with addresses remaining stable throughout the lifecycle of the VM.

* NICs remaining fixed during VM live migration.

Specifying static IP addresses at VM creation

To assign a specific IP address to a VM at its creation time, simply add an annotation ovn.kubernetes.io/ip_address to the template of KubeVirt’s VirtualMachine resource:

apiVersion: kubevirt.io/v1alpha3

kind: VirtualMachine

metadata:

name: testvm

spec:

template:

metadata:

annotations:

ovn.kubernetes.io/ip_address: 10.16.0.15

For VMs created this way, Kube-OVN will automatically assign the specified IP address according to the annotation field, and this address will remain stable for the remainder of the VM’s lifecycle.

Assigning random static IP addresses at VM creation

In some cases, the administrator does not want to manually assign an IP address each time a VM is created, but still want the address to remain fixed for the VM throughtout its lifecycle.

The ability to associate the assigned addresses with VirtualMachine instances has been introduced in Kube-OVN v1.8.3 and v1.9.1. The lifecycle of the assigned IP addresses in Kube-OVN will be aligned with that of the VirtualMachine instance, enabling a one-to-one mapping. Administrators do not need to manually assign VM addresses in order to keep them stable.

To enable this feature, simply add the following parameter to the kube-ovn-controller.

–keep-vm-ip=true

https://github.com/kubeovn/kube-ovn/issues/1297https://github.com/kubeovn/kube-ovn/pull/1307

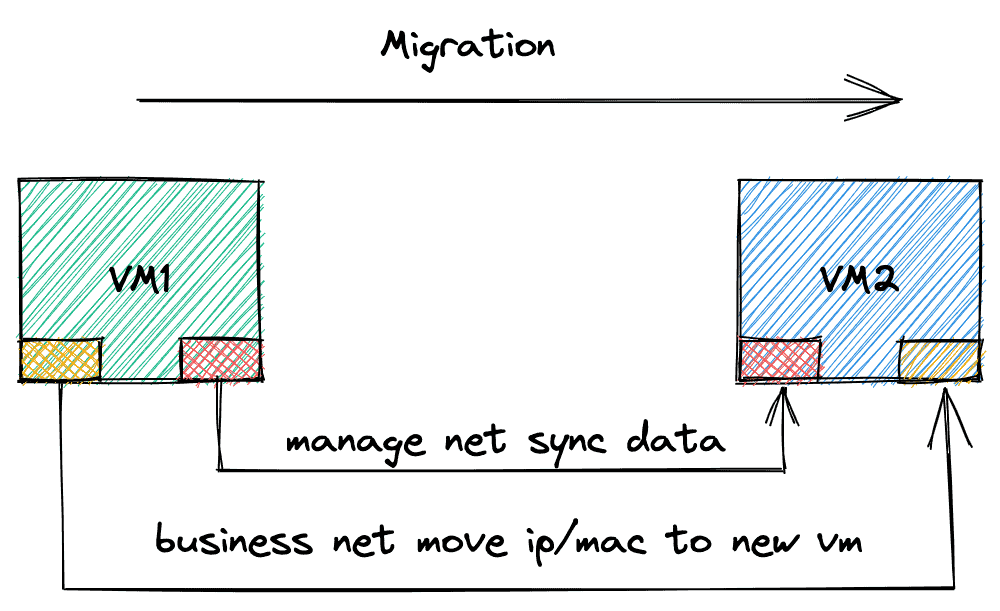

Stable IP addresses across VM live migration

KubeVirt’s live migration faces a lot of networking challenges, especially in the fixed IP scenario, which makes it more complicated to avoid IP conflicts. KubeVirt uses the default network for state synchronization during live migration. By using the multus-cni to separate the service network from the migration network, we can manage IP address migration at the functional level.

- The default network is only used for live migration and requires multus-cni to attach Kube-OVN as an attachment NIC to the VM.

- Add the corresponding annotation to the VM to enable fixed IP and live migration support:

<attach>.<ns>.ovn.kubernetes.io/allow_live_migration: ‘true’

- KubeVirt’s DHCP will set the wrong default route. You need to add an annotation:

<attach >.<ns>.ovn.kubernetes.io/default_route: ‘true’

to select the correct default routing NIC.

With the above configuration, Kube-OVN will take over the transfer process of the network during the live migration and choose the right point in time for the network switchover.

https://github.com/kubeovn/kube-ovn/pull/1001

Multi-tenant Networks

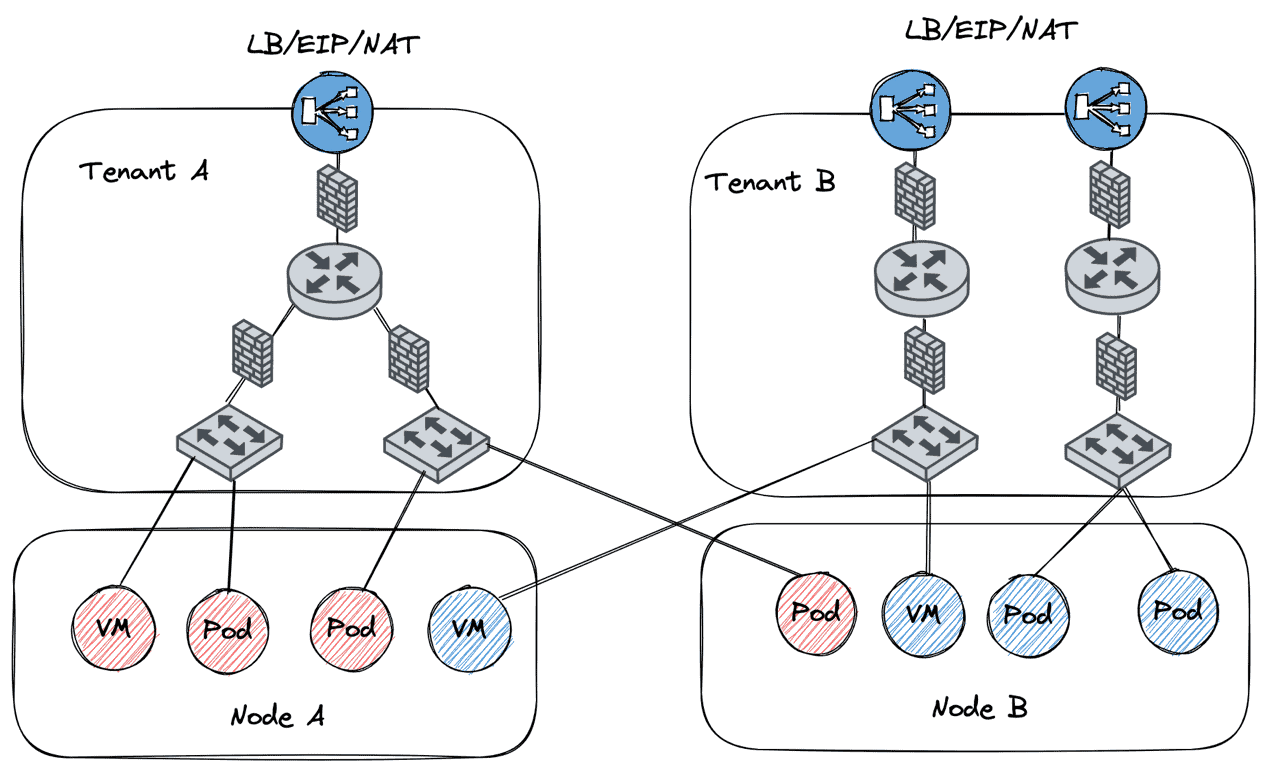

In traditional virtualization usage scenarios, multi-tenancy with VPCs is generally considered a best practice in many enterprise IT environments. However, the lack of built-in support for tenant level isolation in Kubernetes has led to shortcomings in the ability to support multi-tenancy in container networks and KubeVirt.

Kube-OVN enables multi-tenant networking in Kubernetes by introducing a new set of networking CRDs: VPC, Subnet, NAT-Gateway. The virtualization control plane in KubeVirt can manage VPCs and Subnets so that different VMs reside in different tenant networks. This enables complete multi-tenancy capabilities in virtualization scenarios.

In addition, Kube-OVN provides control knobs around LB/EIP/NAT/Route Table, etc. This enables administrators to manage a cloud native virtualization network as if it were a traditional data center network.

kube-ovn/vpc.md at master · kubeovn/kube-ovn

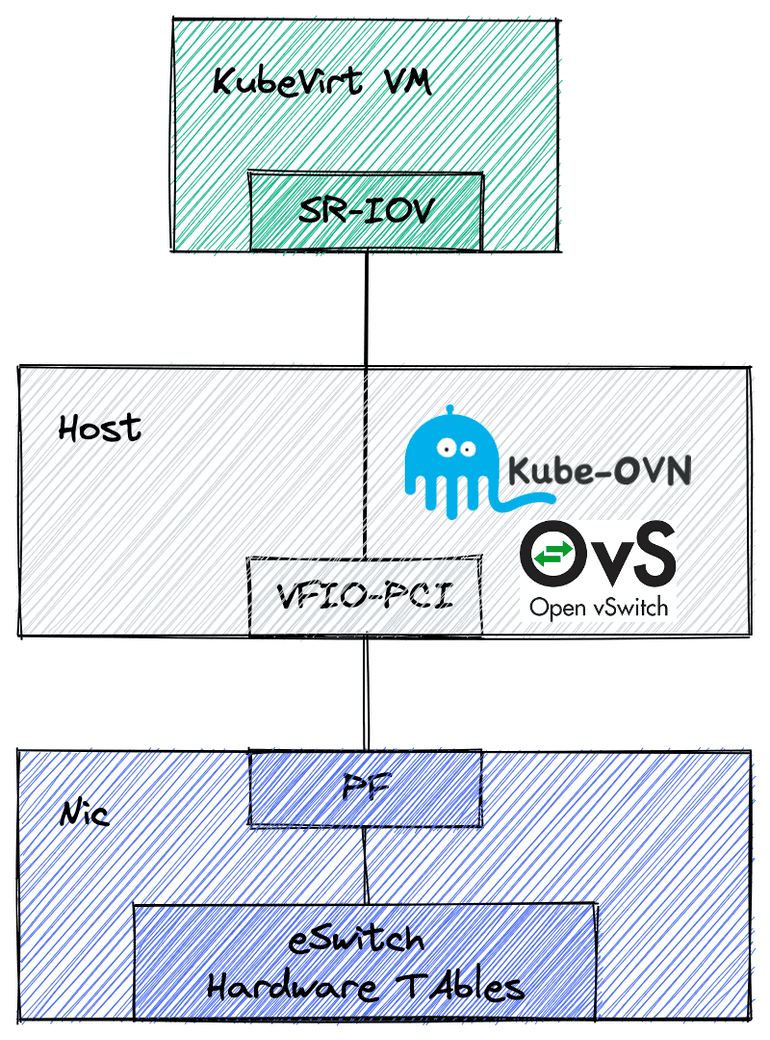

SR-IOV and OVS-DPDK support

KubeVirt’s default Pod mode network suffers from long network data paths and poor performance due to the need to adapt to CNI specifications. On the other hand, SR-IOV mode suffers from inflexible features and complex configurations.

Kube-OVN achieves high performance by directing SR-IOV devices to KubeVirt VMs through the OVS offload capability of the smart NIC. Kube-OVN also retains the address allocation and OVN logical flow table capabilities, enabling complete SDN networking and striking a good balance between performance and functionality.

Although upstream KubeVirt has no support for OVS-DPDK type networks, many users in the Kube-OVN community have independently developed KubeVirt support for OVS-DPDK . Therefore, even a normal NIC can enhance the network throughput within a VM when accelerated by OVS-DPDK.

kube-ovn/hw-offload-mellanox.md at master · kubeovn/kube-ovn

kube-ovn/hw-offload-corigine.md at master · kubeovn/kube-ovn

kube-ovn/dpdk-hybrid.md at master · kubeovn/kube-ovn

To sum up

In this blog post, we described several interesting use cases and special considerations when designing the network fabric for cloud native virtualization. The KubeVirt community has contributed to a number of virtualization related features in Kube-OVN, including VM static IP addresses, multi-tenant networks and SR-IOV and OVS-DPDK. We see Kube-OVN as a great companion for KubeVirt, and when used together, they really light up the cloud native virtualization experiences. We hope more users from the KubeVirt community will try out these features and give us feedback.