Guest post originally published on the Clockwork blog

TL;DR:

- Contrary to expectation, colocated VMs do not enjoy lower-latency connectivity to each other

- On network links between colocated VMs, packet drops are just as likely as on non-colocated links

- For maximum cloud system performance, VM colocation should be avoided

In the previous blog of this series, we discussed how cloud providers’ VM placement algorithms end up creating multiple VMs on the same physical machine, and how the resulting VM colocation can impair network bandwidth.

In this second blog post, we investigate the impact of VM colocation on additional key network performance metrics, namely network latency and network packet drops.

Network Latency

Using Clockwork’s highly accurate clock synchronization, we can identify packets that do not incur any queueing delay on their path from the sender VM to the receiver VM, and measure the intrinsic networking latency. We use the raw measurements to compute two-way delays, defined as the sum of the one-way delays from source to destination and from destination back to source, while ensuring that neither of the two terms is affected by queueing delays. This measurement is different from roundtrip time (ping time), which additionally includes turnaround processing time at the destination VM.

Since colocated VMs can communicate without using physical network links, it is reasonable to assume that their network latency is lower than in the non-colocated case. Let’s see if this assumption holds in reality. To this end, we measure the minimum two-way delay for small UDP packets while the network is at 50% load.

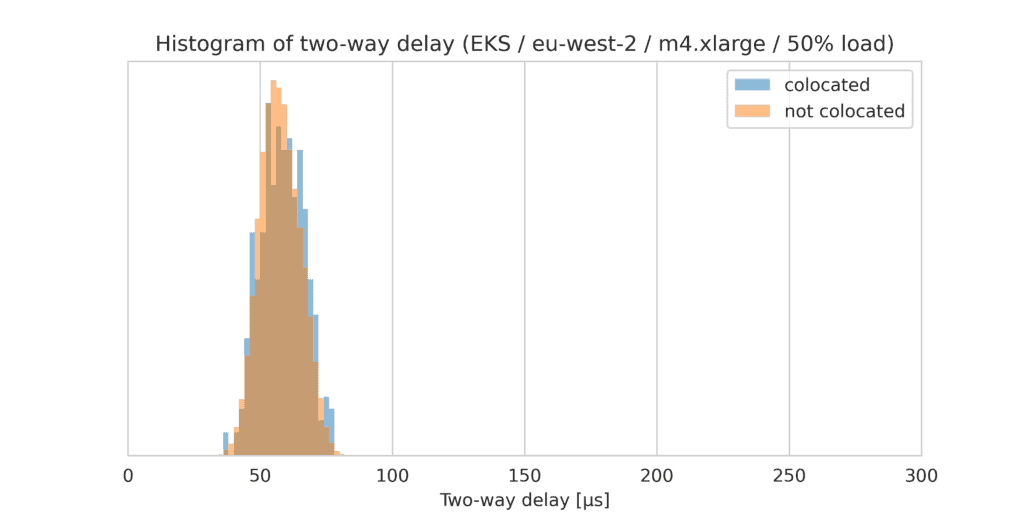

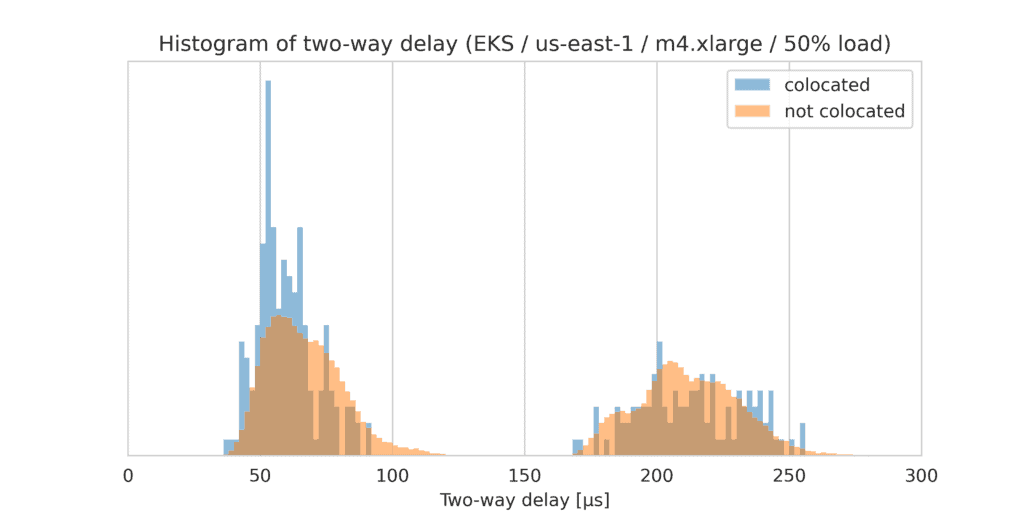

Amazon Web Services (EKS)

Our data demonstrates that in Amazon Web Services, the latency does not significantly differ between the colocated case and the non-colocated case.

This conclusion holds for all other regions and instance types in EKS that we investigated. The distribution of two-way delays is not affected by colocation. The AWS virtual network implementation hides any potential latency benefit of colocation and yields the same latency regardless of colocation.

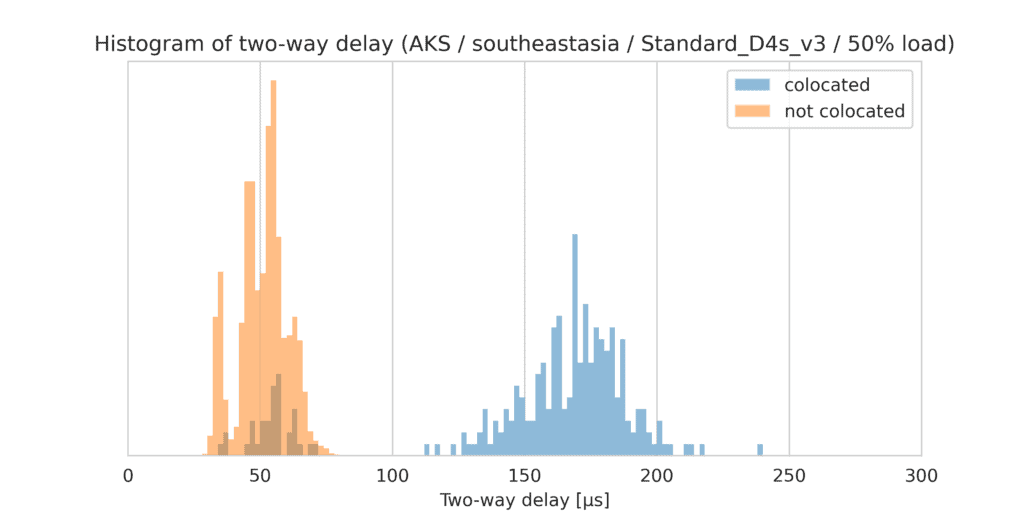

Microsoft Azure (AKS)

It turns out that in Azure, contrary to expectations, the communication latency between colocated VMs is actually larger than between non-colocated VMs. Consider the following histogram:

We observe the same counterintuitive behavior in all regions and all instance types that we investigated. The explanation lies in Azure’s accelerated networking, which is turned on by default for AKS. Packets destined for a VM on the same physical host take longer because they do not benefit from hardware acceleration. Instead, they are handled as exception packets in the software-based programmable virtual switch (VFP) on the physical host [Link].

Google Cloud Platform (GKE)

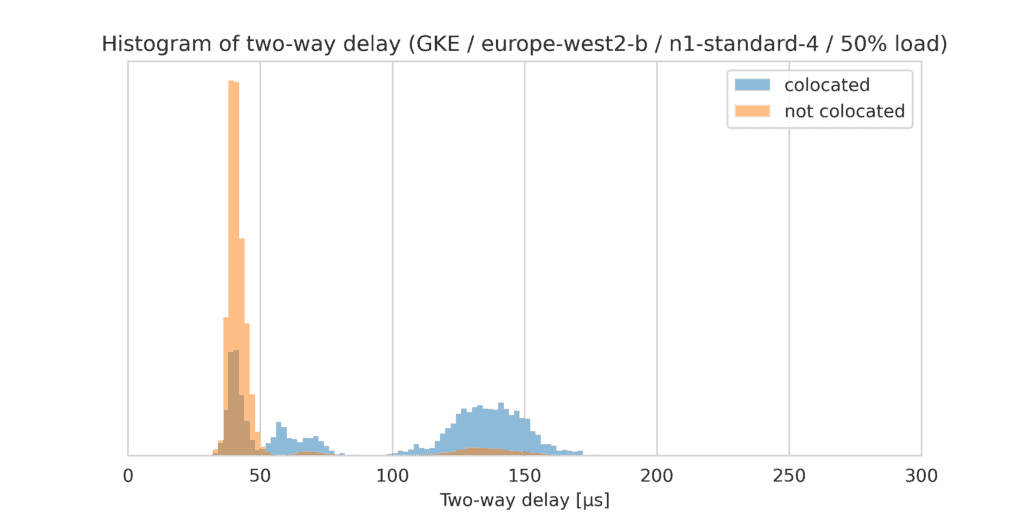

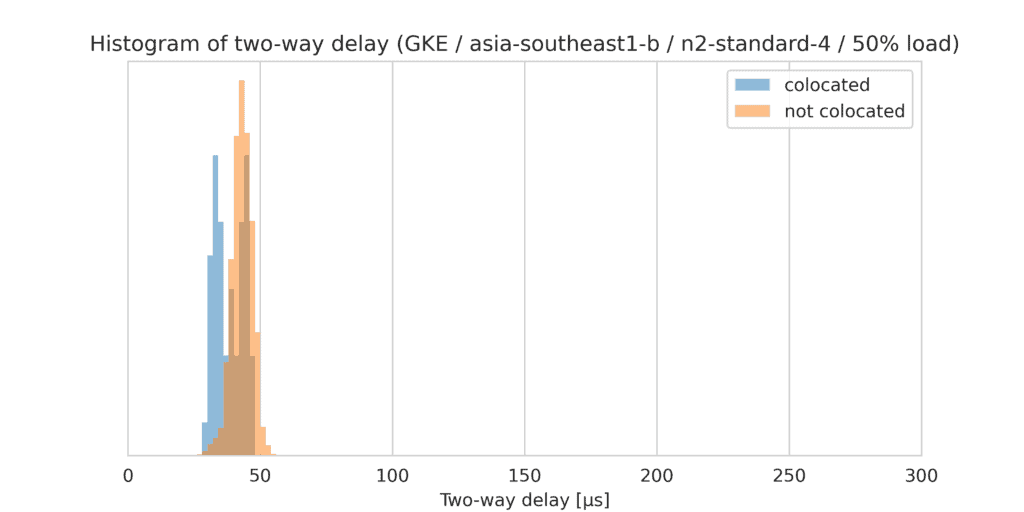

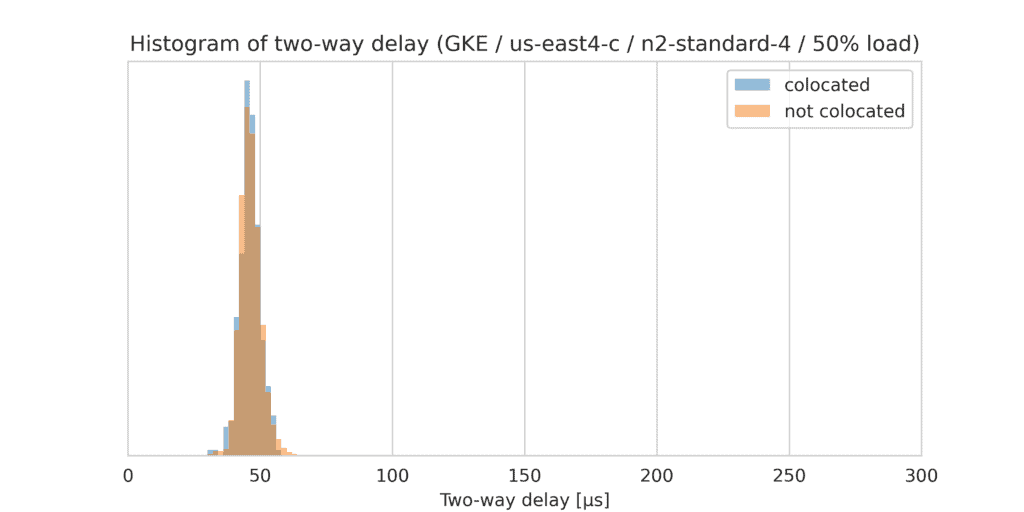

In Google Cloud Platform, the latency impact of colocation depends on a combination of region and VM type.

In some cases, links between colocated VMs have higher latency than for non-colocated VMs. This pattern looks very similar to the latency behavior in Microsoft Azure, and may be caused by a similar hardware acceleration that does not apply to colocated VMs.

Network Packet Drops

During a Clockwork Latency Sensei audit, we measure the fraction of packets that are dropped under idle conditions and under moderate (50%) network load. Under idle conditions, typically very few packets are lost. Under moderate load, intermittent congestion causes packet drops up to a rate of several hundreds packets per million (ppm), thus degrading network performance due to retransmissions and shrinking transmission windows.

Does colocation affect the rate of packet drops? For colocated VMs, packets do not pass through physical network hops, thus encountering fewer potential drop occasions. If drops occur mostly within the network (rather than at the edge of the network), then links between colocated VMs should exhibit a lower packet drop rate.

Across thousands of 50-node cluster instances, we observe that colocation does not have a significant effect on packet drop rate, as shown in the table below.

Packet drop rate

| Links between non-colocated VMs | Links between colocated VMs | |

| AKS | 68 ppm | 60 ppm |

| EKS | 220 ppm | 213 ppm |

| GKE | 60 ppm | 62 ppm |

This indicates that practically all packet drops are caused by overflowing queues that are traversed by links between colocated and non-colocated VMs alike. In other words, most packet drops occur in the virtualization hypervisor, not within the network proper.

VM colocation is bad for business

The analysis in this article and the previous blog post clearly shows that VM colocation has a net negative effect on cloud networking performance: Colocated VMs achieve lower bandwidth, incur the same or higher latency (except in certain special cases in Google Cloud), and do not provide a benefit of lower packet drop rate.

For optimal cloud system performance, colocation should be avoided.

Clockwork Latency Sensei provides visibility into VM colocation. The Latency Sensei audit report indicates which VMs are colocated, and quantifies the performance impact of colocation. Thankfully, in the cloud, once a colocation problem is identified, it is easy to shut down the underperforming VMs and replace them with new, hopefully non-colocated VMs.