Guest post originally published on the Snapt blog by Dave Blakey

Kubernetes has been a revelation for companies working with containers. It makes container orchestration consistent, which can streamline development and make CI/CD processes more reliable. However, your success depends on more than developing containerized applications and integrating Kubernetes into your CI tooling. You still need to consider how you will deploy your containers into a production environment. This process can be just as challenging as introducing containers into your software architecture in the first place.

This guide will help you to deploy Kubernetes in production effectively and efficiently.

Use Small Base Images

We discussed this topic in our Best Practices for Building Kubernetes Applications, but it’s worth repeating here.

When deploying containers into any environment, build your containers on a base image and then run setup scripts to ensure the container operates the way you want. You can streamline your entire deployment process by keeping your first base image as small as possible.

Every application will need different setup scripts, but most of your applications will run in containers built on a few base images. It’s crucial to optimize your base images because a problem in one base image will affect every nested container you deploy.

If a base image is too big, it will consume more memory and take longer to initialize, causing every nested container deployment to take longer. Even small delays add up when operating at scale and deploying apps in multiple environments several times a day.

Using small base images will help you deploy nested containers as fast as possible, which will help you scale out withing facing big performance problems. This general efficiency saves some capacity for when you need to deploy apps that genuinely require a more complex setup.

One Process Per Container

Always keep things simple. In Kubernetes, that means running only one process per container.

Restricting containers to running only one process might sound like a recipe for a fragmented application, with each process needing to communicate with others across containers. However, the simplicity of this model makes it much easier to maintain apps in Kubernetes.

With this model, DevOps teams can fix, change, or remove a troublesome process or container without affecting the rest of the application. If you identify a security vulnerability in a particular process, you can isolate the affected container and ensure that the vulnerability does not impact other parts of your application.

Knowing that container telemetry applies to only one process makes monitoring and troubleshooting the underlying processes easier. This reduces the time needed to fix faulty processes and redeploy the affected containers.

Use “kubectl apply” For Rollbacks

When a failure occurs, you might need to roll back your deployment to a previous image. It’s important to roll back to the most recent stable image and not to one that’s too old or undesirable. However, managing and restoring specific backups for every version of your application can be difficult with many containers and frequent deployments.

Kubernetes can make this much easier for you. The ‘kubectl apply’ command records the current configuration and saves it as a revision. This simple method allows you to record every revision (up to a defined limit) and ensures you have all the information you need to roll back to a previous working version.

Use Sidecars

A sidecar proxy is an application design pattern that abstracts certain features away from the main architecture to make tracking and maintenance easier. You might use sidecar proxies for inter-service communications, monitoring, and security. A sidecar proxy is attached to a parent application in the same way that a sidecar is attached to a motorcycle.

It’s important to understand what is going on with your containers. However, using a container to provide this information directly distracts from the container’s core purpose. Instead, use sidecar proxies to provide the information you need for effective container management and keep your containers focused on the processes you want them to do.

The only thing you don’t want to use a sidecar proxy for is any form of bootstrapping. When a container needs more resources, you might be tempted to use the extra resources available on a sidecar proxy to help resolve the issue. While this might fix the immediate problem, services should be able to run in their pre-defined containers. It’s best to reserve your sidecars to help identify and resolve performance issues, and not to sacrifice them for a quick fix that will not stop the same problem from occurring again.

Use Readiness and Liveness Probes

Probes are monitors built into Kubernetes that help the platform know the state of each container and when it is ready for deployment.

- Use readiness probes to identify when a container is ready to start accepting traffic.

- Use liveliness probes to know when a container needs to be restarted, for example, when a deadlock or specific performance issue occurs.

While teams can build their own deployment tools to monitor and manage these issues, using the native probes helps Kubernetes respond to issues quickly and will ensure that containers are brought into service or restarted as soon as possible.

Use Relevant Tags (And Don’t Use The “Latest” Tag)

You can use tags to ensure that your containers can access the correct versions of your images. This is especially useful for version control and rolling back to previous versions.

A common mistake is to use the “latest” tag to pull the latest version of a particular image and reduce the scripting effort in the deployment process. However, this makes it hard to know and track which version is actually deployed. The “latest” command doesn’t always deploy the version you think is the most recent. Having deployed using the “latest” command, you will find it more difficult to roll back to an earlier version.

Using explicit version tags will help to ensure you always deploy the correct version and allows your teams to control rollback by using tags for previous known versions. This is well worth the extra scripting effort.

Use Ingress Load Balancing

Kubernetes offers a few different service configurations that allow you to manage how your containers are made available publically: NodePort, LoadBalancer, or Ingress configurations. Using the correct method is key to your application’s operation, security, and load balancing.

- A NodePort service is the most primitive way to get external traffic directly to your service. NodePort, as the name implies, opens a specific port on all the Nodes (the VMs), and forwards all traffic to your service.

- A LoadBalancer service is the standard way to expose a service to the public Internet. LoadBalancer creates a Network Load Balancer that presents a single IP address that forwards all traffic to your service.

- Lastly, there is the Ingress option. Unlike NodePort and LoadBalancer, Ingress is NOT a type of service. Instead, Ingress sits in front of multiple services and acts as a “smart router” or entry point into your Kubernetes cluster.

Using an Ingress Controller creates an HTTP/S (Layer 7) Load Balancer that will let you do both path-based and subdomain-based routing to backend services. For example, you can send everything on foo.yourdomain.com to the foo service and everything under the yourdomain.com/bar/ path to the bar service.

Setting up an ingress controller is more complex than the other methods but it gives you far more control of the network traffic for each container and cluster.

Use Static IPs And Route External Services To Internal Services

With containers spinning up and spinning down, working with IPs can be complex. However, you should always try to configure containers to use specific fixed IP addresses rather than using Kubernetes dynamic addresses.

Kubernetes is pretty good at managing dynamic IP addresses, and for routing, you can always identify containers from their names and other identifiers. But it is far easier to use static IP addresses for routing traffic, especially when working with external services or through your respective load balancers.

Use A Modern App Services Platform For Load Balancing And Security

Working with containers can be tricky, but with the proper configuration, you can easily manage your deployments across all your Kubernetes clusters.

There are a lot of tools that can help you on your journey to the perfect Kubernetes deployment. Kubernetes comes with a lot of great services built-in. However, you can benefit from using a modern app services platform such as Snapt Nova.

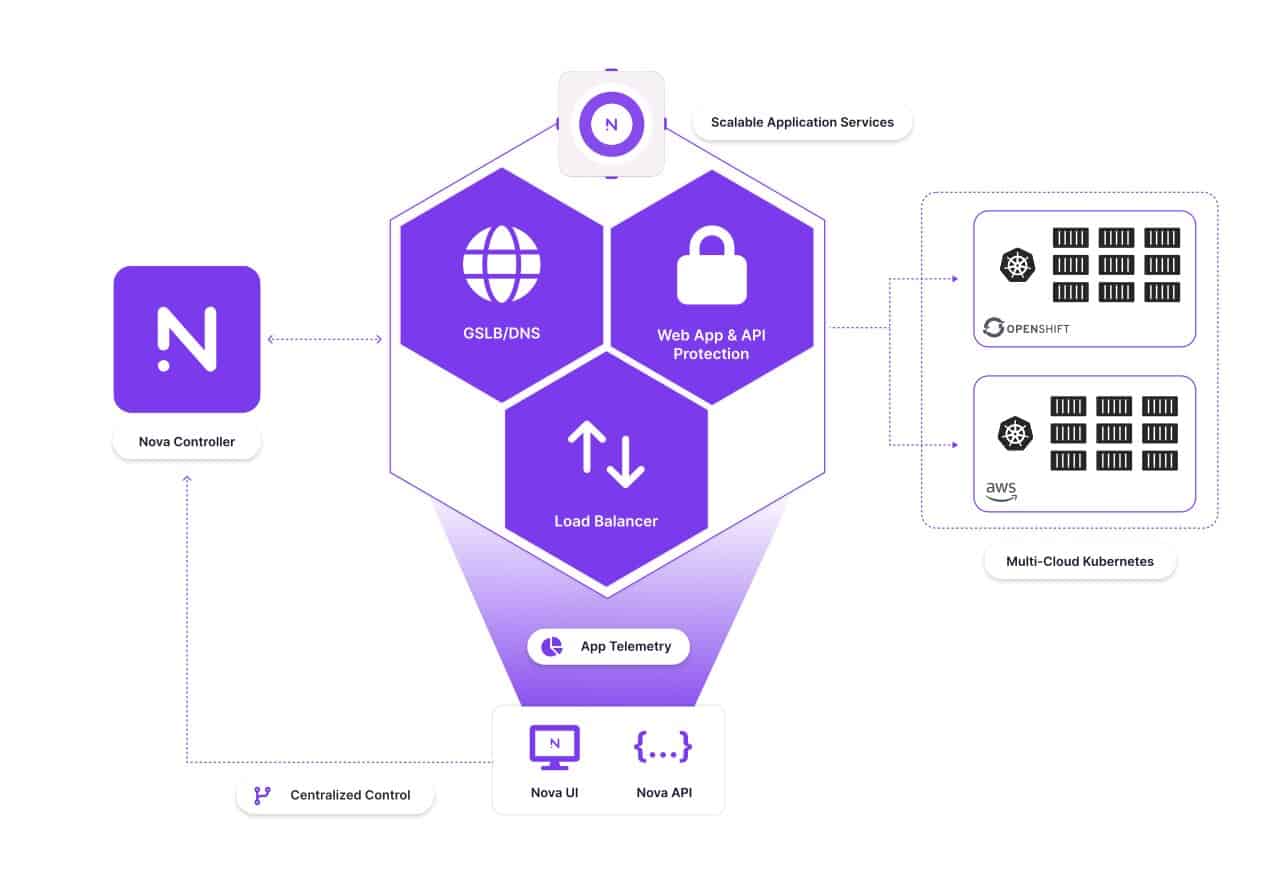

Nova provides load balancing and WAAP security on-demand to millions of nodes from a central controller and is designed for modern distributed applications. It helps organizations manage traffic flows, load, and security at ingress and between containers. Nova provides that extra layer of comfort and confidence in your Kubernetes deployment.

Many organizations run Kubernetes servers across a variety of cloud providers as well as internal data centers. Tracking all of this activity centrally without needing to work with complex setups across your Kubernetes clusters will save you a significant amount of time and ensure your applications are more highly available.

Nova is cloud-neutral and platform agnostic and is capable of providing load balancing and security at ingress in any combination of public and private clouds. This enables you to ensure consistent global policy and monitoring for containers, clouds, VMs, and monolithic apps in any location, all on one platform.