Community post by Yelin Liu, Technical Marketing at DaoCloud

On November 18, the “Kubernetes Community Days Chengdu 2022,” initiated by CNCF, DaoCloud, Huawei Cloud, Sichuan Tianfu Bank, and OPPO was successfully held, gathering end users, contributors and technical experts who shared rich content about cloud native multi-industry practices, popular open source projects, and community contribution experience. The event attracted nearly 2,000 people to watch online and attend in person. Among them, the participants were not only cloud native technology developers and practitioners, but also college students who had not yet graduated and actively participated in the Q&A session round table discussion. The spread of cloud native is becoming more and more extensive and profound. Let’s review the wonderful content of the lecturers.

01 Opening remarks

Wild History of Cloud Native

At the opening of the event, host Paco Xu, the reviewer of Kubeadm and SIG-Node in the Kubernetes community, the architect of DaoCloud and the leader of the open source team, extended a warm welcome to everyone. He also said that due to the impact of the COVID, KCD Chengdu has been repeatedly postponed, but we have finally met. He greeted everyone with well wishes, discussing in depth about going on a cloud native journey together.

Vice President of R&D, at DaoCloud, Peter Pan was invited to give an opening speech at the event. He humorously said that in order to warm up for the rich themes later, he will first bring an introduction of cloud native’s wild history.

- Cloud Wild: Cloud native technology has been around for a long time. There was LXC, microservices, distributed technologies, and other technologies developing independently in the early days. This period can be called “cloud wild”, that means everything at that time grows alone.

- Cloud second birth based on Kubernetes: Until the emergence of Kubernetes, they were assembled, and the era of the birth of the cloud came, and cloud native showed their edge. The emergence of Kubernetes has given a new definition to the operating system. For example, the most important human-machine interface of a traditional operating system has evolved into an application interface in the cloud native era, because the first citizen at this time has become the application and the data it generates.

- Cloud Overgrown: Then, with the explosion of open source, it brought many local optimal solution selection and combination challenges. Then came the era of cloud clusters (small characters: cloud survival).

- Cloud Native: However, what enterprise users need is a global optimal solution, which can be understood as covering multi-cloud orchestration, Xinchuang heterogeneity, application delivery, observability, cloud-edge collaboration, microservice governance, application store, and data service. The greatest common divisor of equal capacity. Therefore, finding the global optimal solution of the digital world and truly ushering in the cloud native era is the goal of all cloud native practitioners who are tirelessly searching.

02 Popular open source projects:

Merbridge

In the journey of finding the global optimal solution in the digital world, what are the hot technologies from the micro perspective? The first lecturer to share was Kebe Liu, from DaoCloud, Member of Istio Steering Committee, focused on cloud native and Istio, eBPF, and other areas in recent years, and the initiator of Merbridge. Merbridge originated from community discussions. Many people hope that there will be a project that can provide acceleration capabilities for service mesh based on eBPF. Merbridge has realized that, and only one line of commands can be used to enable eBPF accelerated service mesh. The goal of Merbridge is to focus on the field of service meshid acceleration.

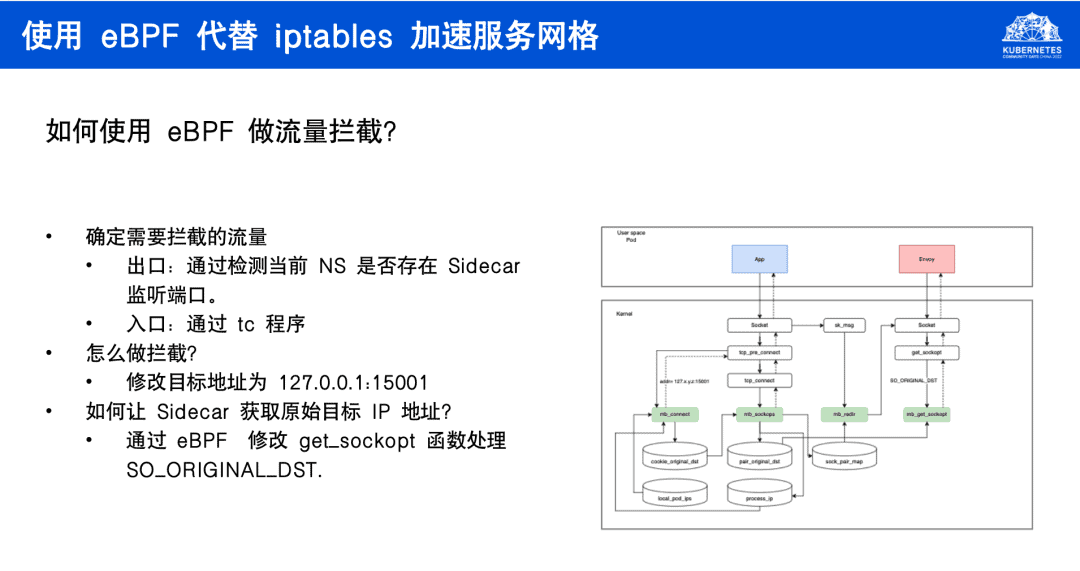

By using eBPF to completely replace iptables to achieve application acceleration, and without modifying any servicemesh-related code, it is easy to use. Kebe shared with us how Merbridge uses eBPF for traffic interception and how to solve the four-element conflict problem, and introduced the seven capabilities currently implemented by Merbridge: eBPF can be used to completely replace iptables in the service mesh scenario; network acceleration; no intrusion and no modification; support mainstream ServiceMesh such as Istio, Linkerd, and Kuma; fully support all capabilities of Istio and Kuma, including traffic filtering rules, etc.; bring 12% network delay reduction to Kuma grid; Ambient Mesh mode support (alpha), no fear of any CNI impact.

The next plan is to implement support for Ambient Mesh (alpha), adapt to lower version requirements of eBPF (planned), inter-node acceleration, and dual-stack support. Finally, he demonstrated how to use Merbridge to achieve acceleration and the acceleration effect in corresponding scenarios, and invited developers to join the community to install and try, to participate in discussions and contributions.

Resources:

- Website:https://merbridge.io/zh/

- Project link:https://github.com/merbridge/merbridge

- Slack: join.slack.com/t/merbridge/shared_invite/zt-11uc3z0w7-DMyv42eQ6s5YUxO5mZ5hwQ

03 middleware in cloud-native

OPPO middleware and database practice

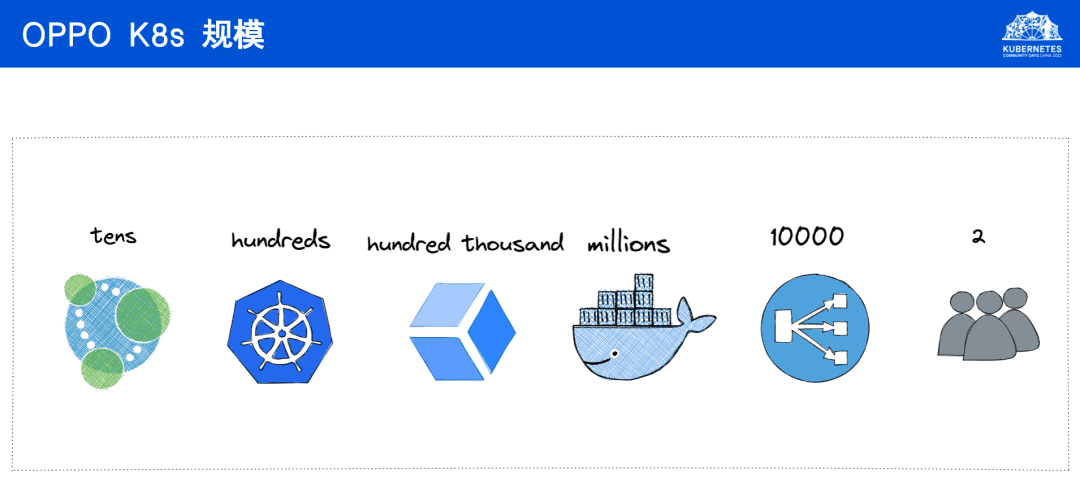

Then, two lecturers from OPPO shared “OPPO Middleware and Database Practice in the Cloud Native Era.” OPPO began to practice cloud native technology in 2017. Due to the high professional requirements of cloud native technology the progress was slow until more professionals joined in the second half of 2019. It took only half a year for OPPO businesses such as middleware and databases to have all been migrated to Kubernetes. Currently, the Kubernetes clusters used by OPPO’s cloud business are located in more than ten countries around the world, with dozens of computer rooms and hundreds of Kubernetes clusters, involving hundreds of thousands of physical nodes and millions of containers.

In order to better adapt to business needs and O&M management, OPPO has developed a set of cluster management models and has carried out many customized developments on Kubernetes. At the meeting, Yin Tongqiang, senior engineer of OPPO, shared many technical principles of Kubernetes customization and the achievements of middleware cloud-native. Afterward, Chen Wenyu, a senior engineer of OPPO, shared OPPO’s exploration practice in database cloud nativization. In his sharing, he mentioned that OPPO’s database cloud native research began last year, and successfully supported nearly 10,000 sets of clusters, hundreds of thousands of container nodes, nearly 100 million QPS, and dozens of PB-level storage. At the same time, he also introduced in detail based on the cloud native database, a self-developed OPPO distributed computing engine for relational databases and an audit function that adapts to OPPO’s business needs.

04 Explore multiple clusters Multi-active infrastructure

Practice in banking

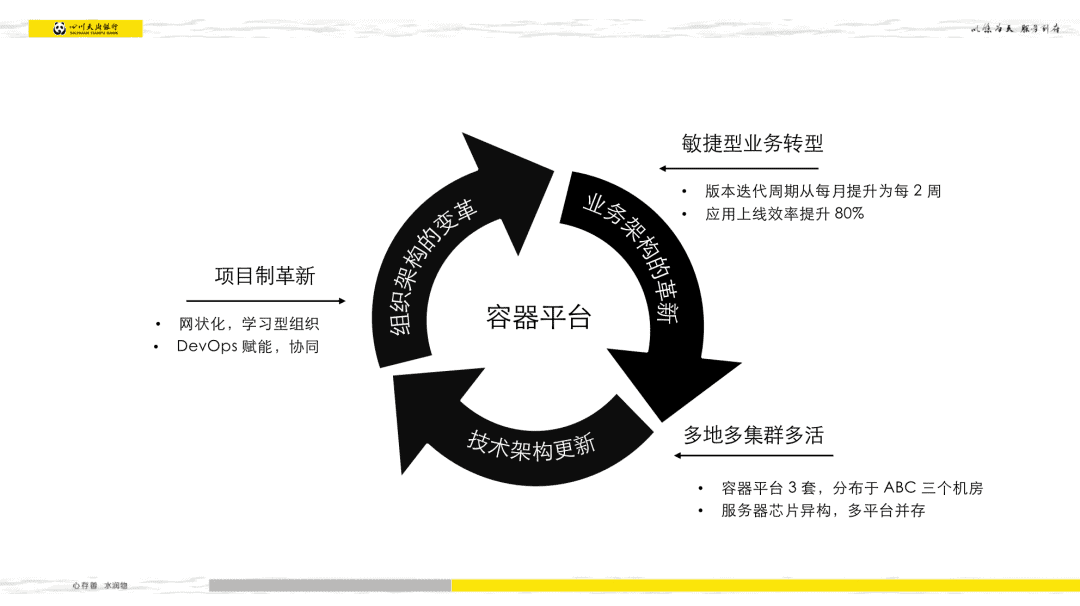

Ma Li, a department manager from the Science and Technology Operations Department of Sichuan Tianfu Bank, shared the content of Sichuan Tianfu Bank’s “Based on cloud native container platform-exploring multi-region, multi-cluster and multi-active infrastructure.” She introduced that as early as 2014, Sichuan Tianfu Bank paid attention to emerging fields and carried out small-scale attempts based on Docker. Then in 2019, they reached cooperation with DaoCloud, launched the container cloud project, and built a composite middle-end system based on Kubernetes that integrates microservice governance, DevOps, and security. In this way, a new IT architecture platform will be developed, escorting the rapid development of Internet financial services for enterprises, facilitating agile transformation, reducing costs and increasing efficiency, and establishing a system for improving business continuity. The introduction of cloud-native technology not only brings about the update of the technical architecture but also changes in the organizational and business architectures. She mentioned that the introduction of microservice governance and DevOps has improved collaboration efficiency and formed an “integrated system of development, operation and maintenance,” which has continuously promoted business transformation to agile, greatly improved the iteration speed of application versions, and the efficiency of online, and quickly respond to market changes. Later, Ma Li also introduced Xinchuang’s work based on cloud to cloud native-ize the loosely coupled relationship between multiple platforms, taking into account the convenience of underlying equipment maintenance, as well as the stability and multi-active form of upper-layer applications. Finally, Ma Li also shared two specific technical cases, introducing the exploration and practice of Sichuan Tianfu Bank in multi-center application scheduling and container persistent storage construction.

05 Powering the cloud-native edge safe implementation of computing scenarios

Focus on security

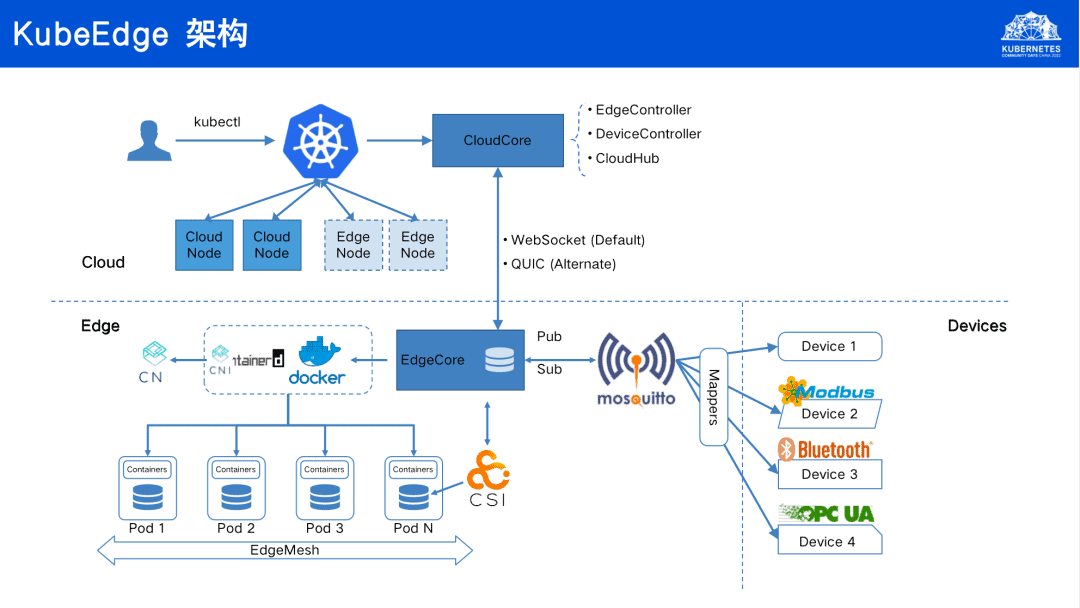

Security is an eternal topic, whether it is for a product or an open-source project, security is very important. In the edge computing scenario, due to the complexity and diversity of the network environment and devices, the security requirements are more stringent. Therefore, a complete security threat model modeling and security protection analysis is the basis for ensuring project security. As the core developer of KubeEdge, Guohui Lin, an edge computing technology expert from HUAWEI CLOUD, brought you the sharing of “Helping Cloud Native Edge Computing Scenarios Safely Land.” He introduced the shape, definition, and key challenges of edge computing, pointing out that in edge computing scenarios, cloud native has the advantages of decoupling software development to improve flexibility and maintainability, supporting multi-cloud to avoid vendor lock-in, avoiding intrusive customization, improving work efficiency and Resource utilization, these four core advantages. As a result, KubeEdge, the first cloud native edge computing open-source project, came into being. Then, he introduced KubeEdge’s architecture, core concepts, cloud-edge-device collaborative management, and other functions. The usage scenarios of KubeEdge are further oriented to larger-scale edges, and security has always been a part of the community’s continuous attention and investment. He introduced that KubeEdge is the first Asian project to complete the CNCF SLSA assessment, and it is also the first project to complete the CNCF fuzzing test plan. It has established a complete vulnerability management mechanism and established threats against internal and external attacks and

supply-chain security. Finally, he shared the application cases of KubeEdge in satellite computing, industrial interconnection, high-speed transportation, and automobile cloud-edge collaboration.

06 Introduction and Practice of Distributed Application Runtime

Sangfor Go engineer, Yang Dingrui joined hands with Microsoft senior technical consultant, Zhu Yongguang to share “Introduction and Practice of Distributed Application Runtime.” Distributed Application Runtime (Dapr) is a portable, event-driven, and used to build cross-cloud and a runtime for distributed applications at the edge. Dapr’s goal is to provide best-practice building blocks, extensible and pluggable components, and a consistent, portable, open API that can be adapted to any language or framework, while adopting popular standards and supporting any cloud and edge, maintaining neutrality driven by the community, and helping enterprise developers develop distributed applications more efficiently. Yang Dingrui introduced Dapr’s microservice building blocks, compatibility and adaptation to any language, framework, cloud or edge infrastructure, and functional features such as service invocation, state management, observability, and distributed tracing. Then, Zhu Yongguang introduced the use of Dapr in the self-hosted Docker mode and on top of Kubernetes based on actual cases. He pointed out that in the process of doing microservices based on Dapr, synchronous calls are commonly used by everyone, but in addition, asynchronous calls are also critical.

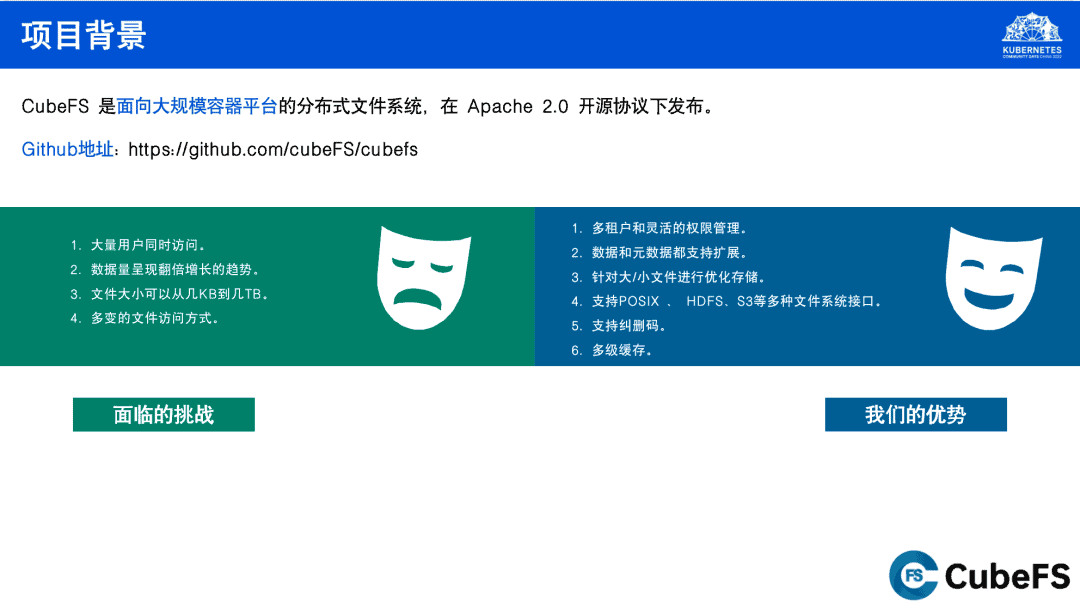

07 CubeFS on the machine learning platform

With the gradual deepening of cloud native applications in enterprises, the environment of cloud architecture has been completely changed. More and more enterprises are transferring their core business applications to the cloud-native container environment. In the face of the massive data generated by these applications, distributed storage, one of the core infrastructures of the container environment, is facing unprecedented challenges. He Chi, senior backend engineer of OPPO, introduced CubeFS, a distributed file system for large-scale container platforms open sourced by OPPO in 2019, with the theme of “Technical Practice of Cloud Native Storage CubeFS in Machine Learning Platform”. CubeFS has multi-tenant and flexible permission management, supports data and metadata extension, erasure code, multi-level cache, can optimize storage for large/small files, and adapts to various file system interfaces such as POSIX, HDFS, and S3. He Chi introduced the architecture, functional modules, and applicable scenarios of CubeFS, and took the machine learning application scenario as a case to share the cache acceleration scheme of CubeFS that can accelerate the performance of public cloud GPUs by 80%-360% under different circumstances. In terms of ecological construction, CubeFS has been ecologically integrated with projects such as Kubernetes, Prometheus, Vitess, MySQL, and Helm, and has been applied to related business scenarios by JD.com, Shell, and NetEase.

Resources:

- Project Address: https://github.com/cubeFS/cubefs

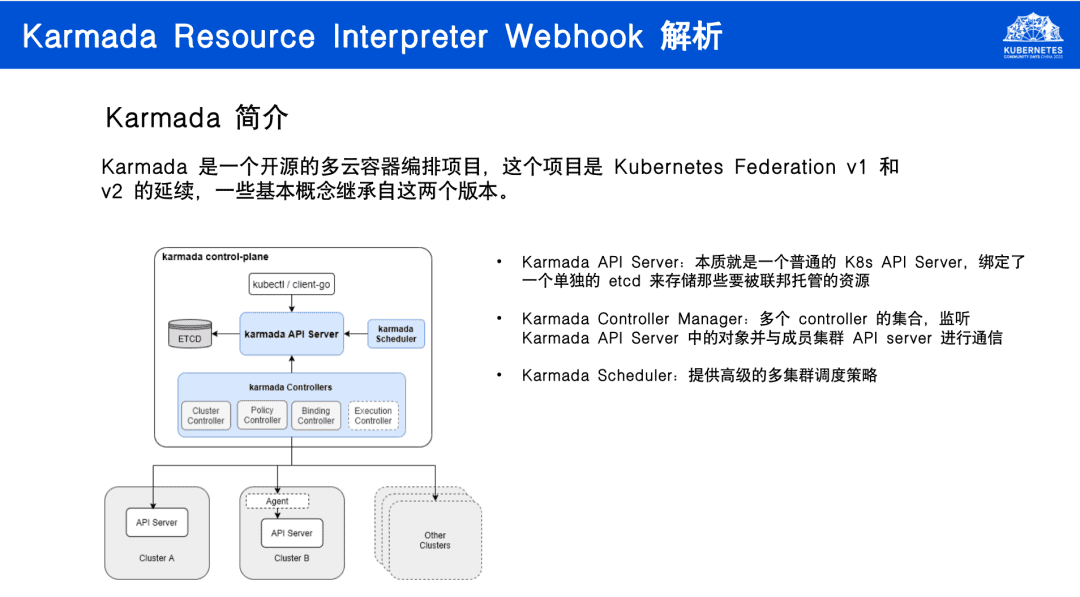

08 Karmada Resource Interpreter Webhook Analysis

As an open source multi-cloud orchestration project, Karmada has been applied to multi-cloud and hybrid cloud scenarios by more and more enterprises. QingCloud senior software engineer-Xinzhao Xu, with the title “Analysis of Karmada Resource Interpreter Webhook”, shared a new feature proposed by Karmada in terms of scalability – resource interpreter. Through resource interpreter, Karmada can now deeply support custom resource distribution. Xu Xinzhao took “creating an nginx application” as an example, and pointed out that Karmada cannot correctly modify its replica number when faced with a CRD function similar to deployment when replica number scheduling is required. As a result, Karmada introduced the Resource Interpreter Webhook to achieve complete custom resource distribution capabilities by intervening in the stages from ResourceTemplate to ResourceBinding to Work to Resource. Then, he introduced the structure of Resource Interpreter Webhook, InterpretReplica hook, ReviseReplica hook, Retain hook, AggregateStatus hook, etc., and called on developers interested in Karmada to join the community for discussion, use, and contribution.

Resources:

09 Group Discussion

Challenges in the cloud-native era

Five lecturers from DaoCloud, Huawei, OPPO, and Sangfor held a group talk on the theme of “What challenges does cloud native bring to you and your field,” and answered questions from participants. I am very grateful to all the lecturers for their wonderful sharing. The participants expressed that they have learned a lot and look forward to seeing you next time.

– End –

Videos of presentations can be found here: https://space.bilibili.com/1274679632/channel/seriesdetail?sid=2818322.