Guest post originally published on ByteDance’s blog by Jun Zhang

KubeGateway is a seven-layer load balancer specially customized by ByteDance for kube-apiserver traffic characteristics. It completely solves the problem of kube-apiserver load imbalance. For the first time in the community, it realizes complete governance of kube-apiserver requests, including request routing, shunting, rate limiting, downgrade etc, which significantly improves the availability of Kubernetes cluster.

Project address: https://github.com/kubewharf/kubegateway

Background

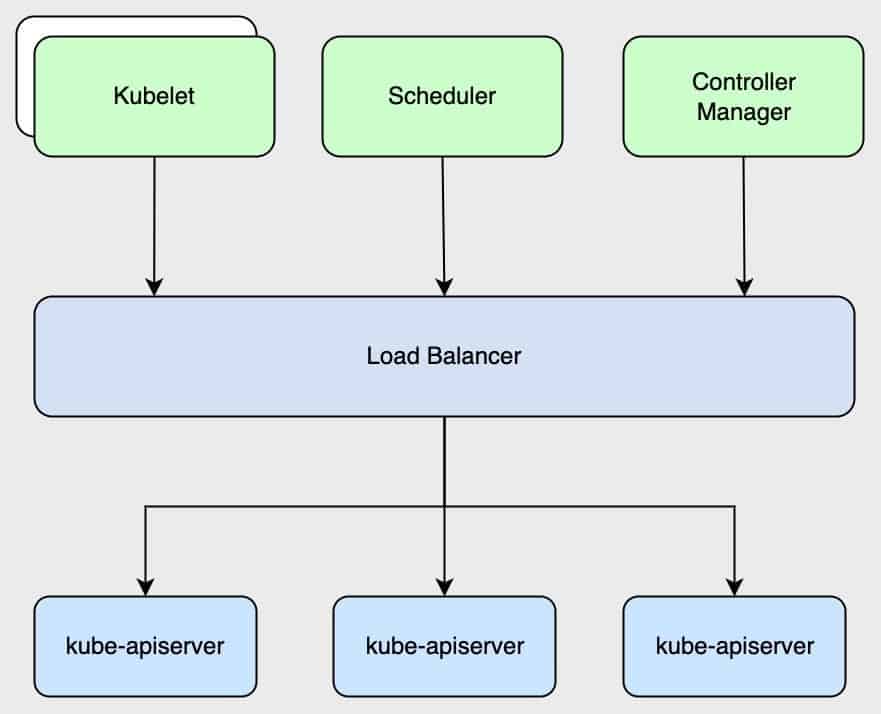

In the Kubernetes cluster, kube-apiserver is the entry of the entire cluster. Any user or program to add, delete and change the resources needs to communicate with the kube-apiserver. So the high availability of the entire cluster depends on the availability of kube-apiserver. In order to achieve its high availability, multiple kube-apiserver instances are usually deployed behind a Load Balancer(hereinafter referred to as LB) because kube-apiserver is essentially a stateless server.

In order to ensure the security of the cluster, kube-apiserver authenticates and authorizes the API requests, where authentication is to identify the user and authorization is to grant permission to access. Kubernetes supports multiple authentication strategies , such as Bootstrap Token, Service Account Token, OpenID Connect Token , mTLS authentication, etc.

Currently, mTLS is the most commonly used authentication policy. TLS authentication requires client certificate in request correctly pass through LB to kube-apiserver, but the traditional seven-layer LB can not do this, certificate will be dropped in the forwarding process, resulting in kube-apiserver authentication failing .

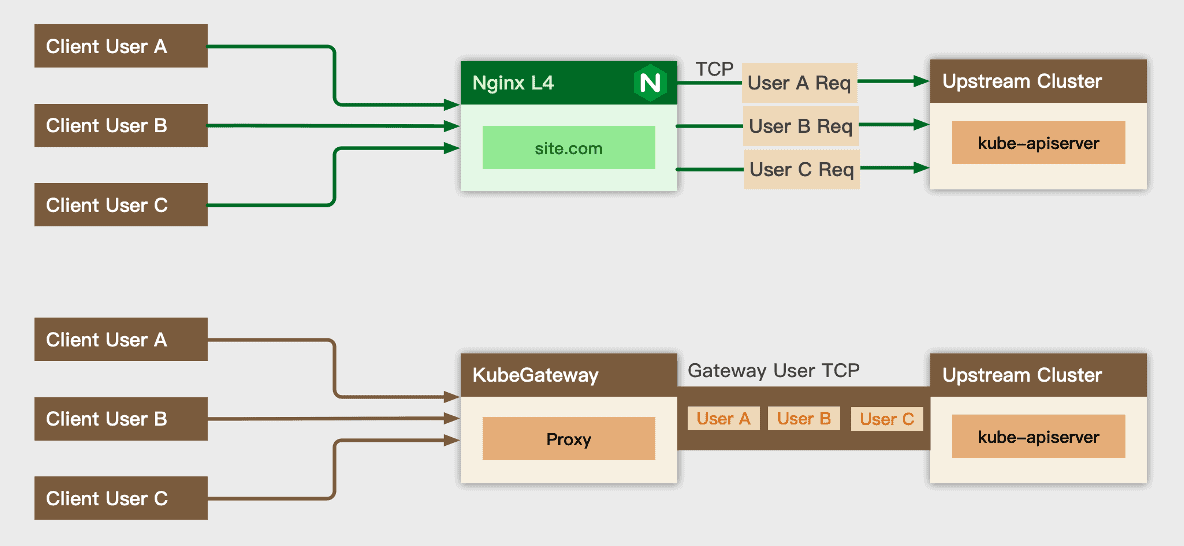

Therefore , kube-apiserver load balance is generally a four-layer solution, such as LVS , public cloud SLB or four-layer load balance of nginx/haproxy.

Four-layer load balancer works in the fourth OSI layer – transport layer, using NAT technology for proxy forwarding

Seven-Layer load balancer works in the seventh OSI layer – application layer, generally based on the request URL the address of the proxy forwarding.

But using four layers LB raises additional problems, as follows:

- Load imbalance: since kube-apiserver and client are communicating with each others by the HTTP2 protocol, multiple requests will reuse the same underlying TCP connection and not disconnect for a long time. When a kube-apiserver instance is restarted, it is easy to cause load imbalance. The late startup kube-apiserver instance will have only a small number of requests over a long period of time . In extreme cases, instances with higher loads will OOM and even cause avalanches.

- Lack of flexibility in request governance: four-layer load balance working at the transport layer, it is only responsible for message delivery, but cannot handle the information of the application layer’s HTTP protocol, so it lacks “flexibility” and “intelligence” for request governance compared to seven-layer load balance. It can not develop flexible load balance and routing policies based on the request content, such as verb , url and other fields, nor can set a rate limit on the request level.

There are some related efforts in the community to try to solve the above problems, but none of them have cured the problem :

| Method | Description | Question |

| GOAWAY Chance Support in kube-apiserver | The kube-apiserver with a probability (say 1/1000) randomly sends GOAWAY to the client, closes the connection, and triggers the client to reconnect to load balancer. | This method can alleviate the kube-apiserver load imbalance problem to some extent, but the convergence time is long, and will trigger the client’s reconnectionDoes not address the inflexibility of request governance |

| kube-apiserver and LB Group | By grouping kube-apiserver and LB and treating kube-apiserver and LB through different priority policies, the service is differentiated SLO guaranteed. | The need to maintain multiple sets of LB and kube-apiserver increases operation and maintenance costs. |

With Cloud Native technology development, more than 95% of ByteDance’s business is currently running on Kubernetes clusters, which put forward higher requirements for high availability. In the production environment, we have also encountered many incidents caused by kube-apiserver load imbalance or lack of request governance capabilities. Facing the above problems, we developed a seven-layer load balancer specially customized for kube-apiserver traffic characteristics named KubeGateway.

Architecture Design

KubeGateway is a seven-layer load balancer to forward kube-apiserver requests. It has the following characteristics:

- Completely transparent for clients : Client can access kube-apiserver through KubeGateway without any modification.

- Support proxy multiple K8S clusters of requests at the same time: different K8S clusters are distinguished by domain name or virtual address.

- Request level load balance achieves fast and efficient load balance, completely solving the problem of kube-apiserver load imbalance.

- Flexible routing policies: KubeGateway routes according to request information, including but not limited to resource/verb/user/namespace/groupversion, etc. Provides basic capabilities for kube- apiserver grouping and implements it at low operation and maintenance cost, improves cluster stability.

- Cloud Native configuration management: It provides configuration in K8s standard API form and supports hot updating.

- Support rate limit, downgrade, dynamic service discovery, elegant exit, upstream anomaly detection and other gateway and general capabilities.

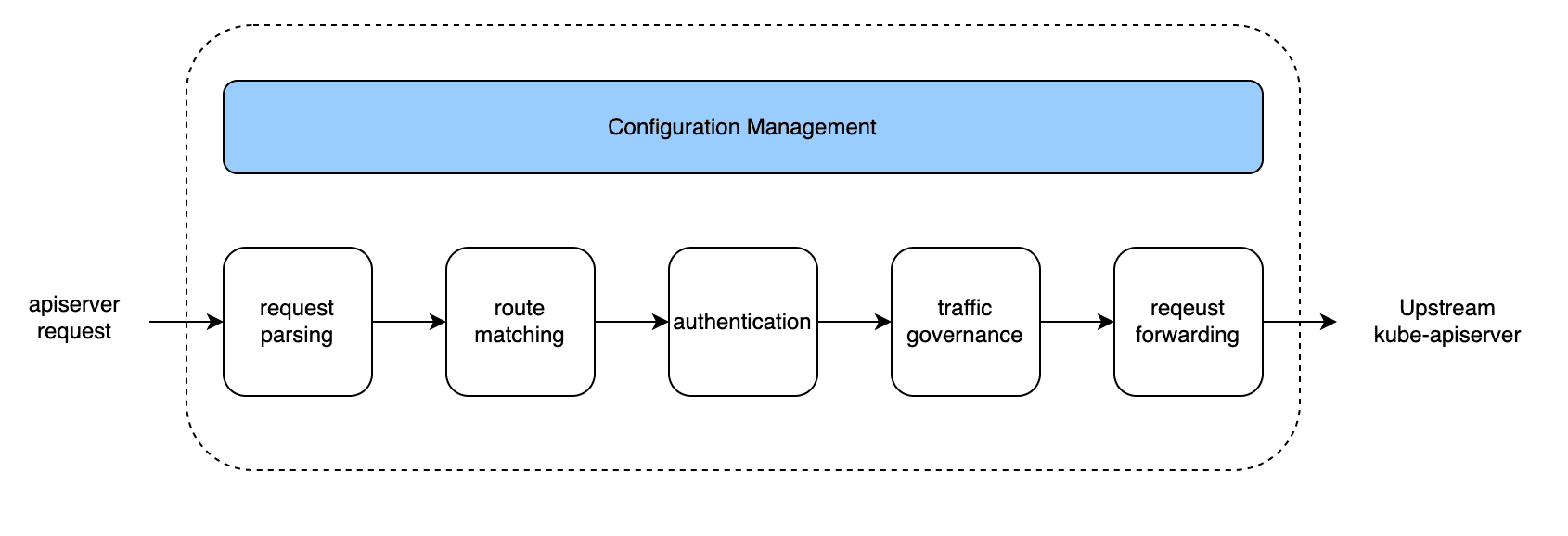

KubeGateway provides configuration management in K8s standard API form, mainly providing management for routing forwarding rules , upstream cluster kube-apiserver address , cluster certificates , flow control and other request governance configuration information. Its proxy flow, as shown in the figure below, is mainly divided into five steps: request parsing, route matching, authentication, traffic governance and request forwarding.

Ce contenu n’est pris en charge que dans Feishu Docs

Request Parsing

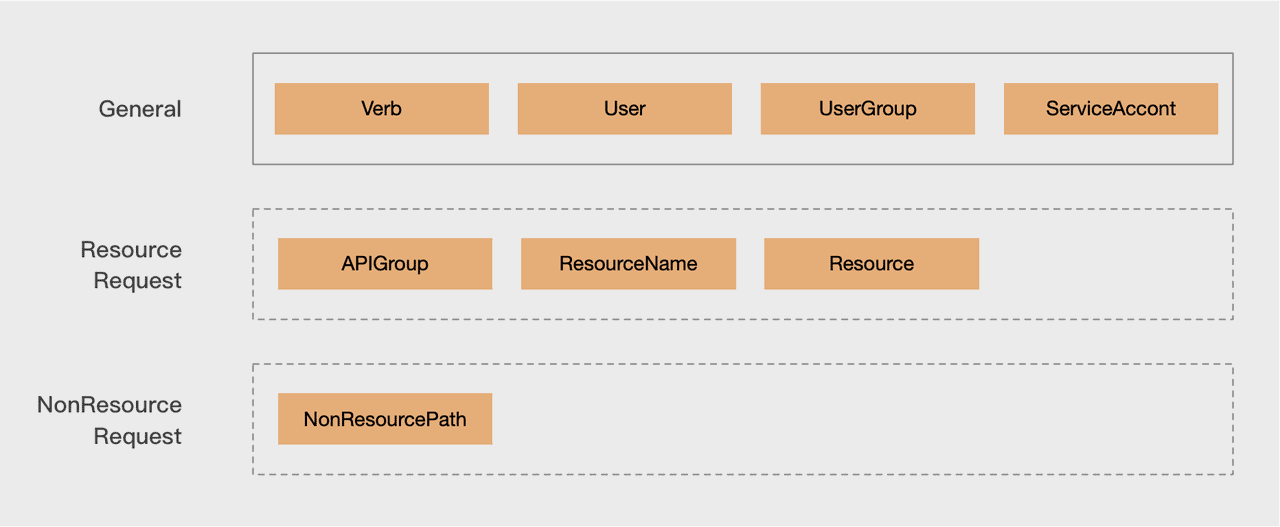

KubeGateway can deeply understand the kube-apiserver request model and parse more information from it. It divides kube-apiserver requests into two types

- Resource requests, such as pods as CRUD (add, delete, change)

- Non-resource requests, such as access to

/healthzview the health of kube-apiserver, access/metricsto view exposed metrics, etc

For resource requests, the following fields can be parsed from the request’s URL and headers:

| request section | Field | Meaning |

| resources | APIGroup | resource group |

| Resource | Resource type | |

| ResourceName | Resource name | |

| operation type | Verb | including create、delete、deletecollection、update、patch、get、list、watch |

| user info | UserGroup | user group |

| User | user name |

Finally, a request can parse out multi-dimensional routing fields, as shown in the figure below, which will serve as the basis for routing.

Route Matching

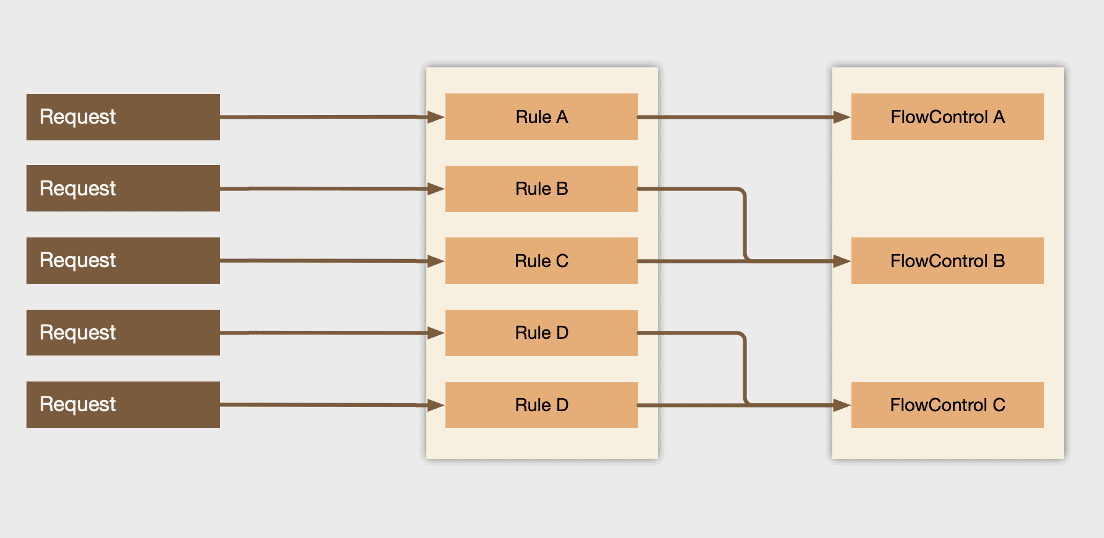

After parsing the multi-dimensional routing fields from the request, you can easily combine very powerful routing rules to distinguish different API requests, such as

- Through Verb and Resource, we can directly match to all list pod requests

- Through User, UserGroup, ServiceAccount, etc., we can match the request of the core control components’ reqeusts such as kube-controller-manager, kube-scheduler, etc

After matching different requests through routing rules, we can do more refined shunting, rate limiting and other flow control.

Matching rules can be directly modified by KubeGateway’s configuration management service API — UpstreamCluster and it takes effect in real time.

Authentication

In order to properly proxy the seven-layer traffic of the kube-apiserver, so that the request can be authenticated and authorized correctly in the upstream kube-apiserver after being proxied, KubeGateway needs to transmit the user information to kube-apiserver transparently, which requires KubeGateway to also authenticate the user information in original request.

KubeGateway classifies the authentication methods supported by kube-apiserver into the following categories

- Based on x509 Client certificate authentication: KubeGateway parses the client certificate into the user and user group information according to CA certificate in UpstreamCluster.

- Bearer Token-based authentication: KubeGateway sends a TokenReview request to the upstream kube-apiserver, requiring it to authenticate the Bearer Token, thereby obtaining the corresponding user information.

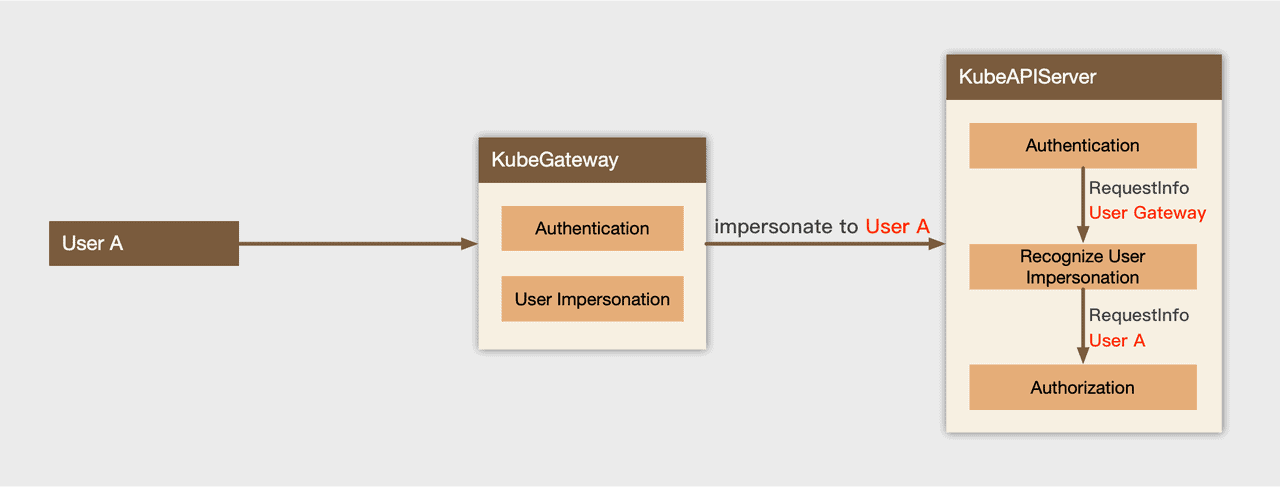

After identifying the user, KubeGateway forwards requests through the impersonation mechanism (aka user acting) provided by kube-apiserver, the details will be introduced in 2.5.1 section. In addition, KubeGateway will only authenticate the request, and will not authorize the request. The authorization operation is carried out by upstream kube-apiserver.

Request Governance

As a seven-layer gateway, KubeGateway has rich traffic governance capabilities, including:

Load Balance Strategy

After the requesting cluster is identified, the server endpoints are also determined. KubeGateway follows the Load Balance Policy to select one of the upstream server endpoints for request forwarding. A good Load Balance strategy optimizes resource efficiency, maximizes throughput, and reduces latency and fault tolerance.

Currently KubeGateway supports Round Robin and Random Load Balance strategies, which are simple and effective and can meet the needs of most scenarios. In addition, KubeGateway supports flexible Load Balance policy extensions, which can quickly implement Algorithms such as Least Request to meet the needs of more scenarios.

APIserver health monitoring

KubeGateway will periodically access the kube-apiserver’s /healthz interface for health monitoring. Proxy traffic will only be forwarded to healthy kube-apiserver; unhealthy kube-apiserver will be temporarily blocked, and new traffic will only be added when it is restored to health.

Rate limiting

KubeGateway provides the ability to rate limit, which can effectively prevent upstream kube-apiserver from overloading in certain situations. The rate limiting configuration compared to Kubernetes its own APF (API Priority and Fairness), is easier to understand and configure. After the request info is parsed and route matched, KubeGateway determines the rate limiting rule for this request.

For example, we want to restrict the list pod the QPS but also to control components (such as controller-manager, scheduler ) exempt. We can distinguish between two types of users in the route matching and then configure rate limiting rules separately for them.

KubeGateway provides two strategies for rate limiting:

- Token bucket: Token bucket is a commonly used rate limiting method. It can effectively limit the request QPS, and to a certain extent allow burst requests.

- Max Requests Inflight: It is a stricter limit strategy than the token bucket. It limits the maximum number of requests that can be executed at a certain time, usually used to limit some more time-consuming requests, such as listing all pods in a large cluster. This request may last for several minutes, and it will consume kube-apiserver a large number of resources. On the other hand, the token bucket will put too many requests, may cause kube-apiserver OOM.

Circuit Breaker

KubeGateway supports rejecting all requests to deal with abnormal cluster governance surfaces.

In the kube-apiserver or ETCD failure, it may cause an avalanche. In this situation, some requests will succeed, some will fail. The client keeps retrying, which can easily lead to unexpected behaviors, such as node offline, a large number of pods evictions and deletions.

In this case, the developer can through the KubeGateway downgrade operation to reject all traffic. In the state, the cluster is equivalent to being frozen,which can ensure that the existing pods remain alive to avoid business impact. After the kube-apiserver returns to normal , first release restrictions allowing node to report heartbeat, and then restore the cluster’s other traffic.

Reverse Proxy

KubeGateway has the following key technical points in the reverse proxy part:

User Impersonation

When forwarding, KubeGateway impersonates users by adding the following user information in the headers to the upstream kube-apiserver.

Impersonate-User: Client Name

Impersonate-Group: Client GroupImpersonation is a mechanism provided by kube-apiserver. It allows a user to act as another user to perform API requests . Before using this mechanism, we need to configure Impersonate permissions for KubeGateway clients in upstream kube-apiserver RBAC. The impersonation process is as follows :

- kube-apiserver authenticates KubeGateway users and recognizes the impersionation behavior

- Kube-apiserver ensures KubeGateway has Impersonate permission

- Kube-apiserver identifies the user name and user group impersonated by KubeGateway based on the HTTP Headers, and then replaces the request executor with the impersonated user

- Perform authorization verification for the impersonated user to check whether it has the permission to access the corresponding resource

Kube-apiserver supports the impersonation mechanism very well, and the audit log is also compatible with Impersonation.

Finally, KubeGateway relies on authentication and impersonation to complete the pass-through of original user information, solving the problem of traditional seven-layer LB cannot proxy kube-apiserver requests. And during the proxy process, the client is completely transparent, and without any modification.

TCP Multiplexing

KubeGateway uses the HTTP2 protocol by default , based on the multiplexing capability of the HTTP2 protocol, on a single connection default supports 250 streams, that is, 250 concurrent requests are supported on a single connection, which means TCP connection numbers on a single kube-apiserver can be reduced by two orders of magnitude.

Forward & Exec requests

Since some requests such as port forward, exec, etc need to pass through HTTP connection established by http/1.1 to use other protocols (such as SPDY, WebSocket, etc.). KubeGateway forwards such requests by disabling htp2 to support hijacking connection.

Landing effect

KubeGateway performance is excellent in benchmark testing. The request delay increases in about 1ms through the proxy. At present, KubeGateway has smoothly taken over all Kubernetes clusters in Bytedance, achieving 35w+ QPS . With KubeGateway, the R&D team completely solved the problem of traffic imbalance of the kube-apiserver cluster, and greatly enhanced the governance capabilities of kube-apiserver requests, effectively improving the stability and availability of the cluster.

At the same time, we have also made a lot of optimizations in KubeGateway elegant upgrade, multi-cluster dynamic certificate management, and observability. The specific technical details will be introduced more in the future, so stay tuned.

Future Evolution

Currently, KubeGateway has been open sourced on GitHub https://github.com/kubewharf/kubegateway , and it will continue to evolve in the following aspects:

- Provide more complete seven-layer capabilities, such as blocklist and allowlist, cache, etc;

- Continuously improve observability, quickly locate the problem in abnormal situations, auxiliary troubleshooting;

- Explore a new transparent federation based on KubeGateway