Guest post originally published on Elastisys’ blog

In this post, we take a closer look at confidential computing (CC). What is it? What does it promise? Does it live up to its hype? And can companies finally trust their data to cloud providers and actually trust that CC removes the need to worry about the cloud provider as a sub-processor with access to the data?

Big US cloud providers, like AWS, Azure and Google, all tout how, thanks to confidential computing features, their clouds are now more secure than ever. However, we in the EU don’t care only about security.

We also care about privacy. Indeed, the European Data Protection Board (EDPB) made it clear in 2020 (via Recommendation 01/2020) that they are unimpressed by big words such as “encryption at rest”, “encryption in transit” and “encryption in use”. What they really care about is who can (and can’t) get access to the data.

What is the difference between privacy and security?

To start with, let’s make sure we clearly understand the difference between privacy and security. This is key to understanding the benefits and limitations of CC.

Security means protecting data from attackers. In other words, the confidentiality, integrity and availability (commonly and jokingly abbreviated “CIA”) of data should be protected against an adversary. Adversaries may include so-called “script kiddies”, who steal data and take down systems for fun, to sophisticated attackers who have a budget, and finance their operations by collecting ransom, or even state sponsors. They are even thought to have roadmaps, work in sprints and use JIRA.

Privacy means protecting data from unauthorized use from foreign government access, AdTech or organizations trying to influence elections such as Cambridge Analytica. Indeed, we now know that certain industries – AdTech being perhaps the biggest violator – cannot discipline themselves against monetizing personal data (see court decisions: Google, Meta, Microsoft). They could do what they are doing given the user’s consent, but who would consent?

Furthermore, we now know that governments see great value in using collected personal data for law enforcement: sometimes for good and sometimes for bad. Problems appear when one nation prioritizes national security over the privacy of another nation’s citizens. Which leads to the next point about privacy vs. legislation that impacts it.

How does privacy relate to the US CLOUD Act and FISA?

The US made it clear that they prioritize national security and intelligence over the privacy of EU citizens. We blogged about the timeline in details, but long story short:

- US CLOUD Act and US FISA allow the US government to formally request data on EU citizens with little or no court oversight.

- The US government can force US cloud providers to stay silent, via so-called gag orders.

- US cloud providers were ruled at US CLOUD risk, even if they store data in data centers geographically located in the EU.

Note that none of this has changed as an effect of the 2023 EU-US Data Privacy Framework. Instead, the adequacy decision makes it clear that US violations against EU privacy will occur and aims to regulate it, rather than prohibit it.

Can US cloud services be used if one just encrypts all data?

In a word: no.

Encryption-in-transit won’t help. Personal data will be decrypted when it reaches your cloud provider. For example, AWS can easily provide data behind an Elastic Load Balancer for official access, since that is where data is encrypted/decrypted. Whether the encryption key is provided by the cloud provider, or whether it is a so-called “Customer-Managed Encryption Key” doesn’t matter. In both cases, the US cloud provider can decrypt the encrypted data.

Encryption-at-rest won’t help either. It’s true that data will be encrypted when stored on disk. But guess who will decrypt it for you before it reaches your Virtual Machine or container? You guessed it, the US cloud provider! Again, it doesn’t matter how the encryption key was generated, whether it’s managed by the cloud provider or “Customer-Managed”.

In both cases, data is encrypted-at-rest and in-transit, but it won’t be encrypted-in-use. In fact, processing data in encrypted form is a sort-of holy grail in cloud computing. Homomorphic encryption provides a theoretical foundation for encryption in-use, however, at the time of this writing, it’s at least 100x slower than processing unencrypted data. So far, this has yet to see practical implementations.

Organizations have to ask themselves if it is financially defensible to pay 100x more on your cloud provider bill, just to keep using a US cloud provider?

How does Confidential Computing work, in a nutshell?

Don’t trust the cloud, trust the hardware manufacturer. But can you trust the hardware manufacturer?

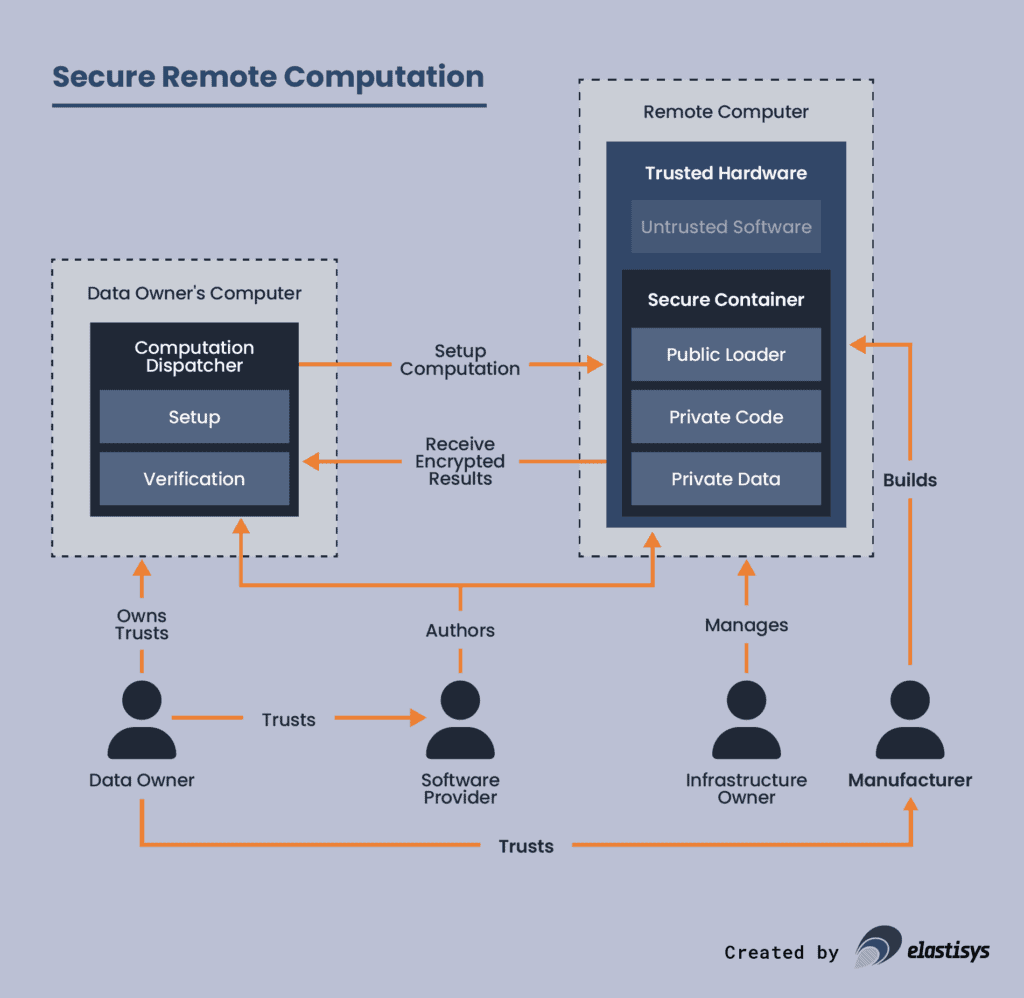

We are over-simplifying a bit, but here is “jargon-removed-but-still-kinda-accurate” technical description of confidential computing:

- The hardware enforces separation. Application code and data (i.e., containers or VMs) runs in so-called “enclaves”. The CPU makes sure that “cloud provider code and data” (i.e., hypervisor) cannot access code and data in enclaves. The hardware also encrypts/decrypts data of an enclave as it moves to/from memory.

- The hardware provides remote attestation. It proves to the application developer (via code hashing and public cryptography) that the developer truly talks to their code and that the code truly runs in an enclave. The hardware stores the keys, both for encryption and remote attestation. The hardware pinky promises to not have a security bug or backdoor to release the keys. The keys are part of a certificate chain, which can be followed up to a root certificate. The hardware and the root certificate is part of your root of trust.

In the case of AWS, the so-called Nitro System is the hardware that enforces separation, provides remote attestation and stores various keys. In the case of Azure and Google Cloud, they use AMD processors with AMD SEV-SNP technology.

We have to stop here for one second. Making hardware both fast and secure is really hard. At the time of this writing, 8 security bugs were found against Intel SGX, the technology fueling some CC implementations. Security bugs have also been found against AMD SEV-SNP. Let’s turn a blind eye and analyze the merits of CC assuming no security bugs.

Why does confidential computing fail to protect data against US CLOUD Act and FISA?

Alternatively: “Does Confidential Computing (CC) protect my data from the US Cloud Act and US FISA when my data is stored, with CC, on a US Cloud?”

It is a simple “yes” or “no” question. Unfortunately, the answer is NO! But why?

Let’s talk about two things: root of trust and disk encryption.

Root of Trust

Even in confidential computing, you need to trust something. Otherwise, how can you trust the measurements needed for remote attestation?

In the case of AWS, the root of trust is the so-called AWS Nitro system. That’s right! You need to trust the same AWS who a French court ruled as being at US Cloud Act risk.

What about Azure? In their case, the root of trust is AMD, the processor manufacturer based in Santa Clara, California, US. So whether you run Confidential VMs or Confidential Containers, you need to trust that AMD will violate US law and reject requests from US authorities to help them hand over your data.

If I sound skeptical, it’s because I am. Lavabit’s suspension and gag order, as well as the Apple–FBI encryption dispute are evidence on how hard it is to refuse such requests.

Disk Encryption

Let us move from the legal elephant in the room to the technical one: Where do you store the disk encryption key?

Remember that we wrote above how the European Data Protection Board (EDPB) doesn’t care if data is encrypted, what matters is who can use the encryption key. In CC, memory is encrypted with the encryption key stored in the AMD processors or the AWS Nitro System. (See why the root of trust is so important?)

But what about the encryption key to the disk? We definitely need to encrypt the disk, otherwise Azure can comply with government access by just dumping the content of the VM’s disk.

If I understand the Azure fine-print correctly, the disk encryption key is with Azure. Indeed, if you want to be “as confidential as it gets” from Azure, you would need to store the disk encryption keys yourself, and only hand them to your Confidential VM or Container after remote attestation.

(🤓 Geeky detail: The Confidential VM can generate an ephemeral private-public key-pair and send you the public key as part of remote attestation. This would allow you to securely send the disk encryption key to your code. So, theoretically, you could implement Azure-proof disk encryption on Azure, but that’s not how it currently works.)

Takeaways

Hugo Landau, an OpenSSL developer, said the following (emphasis added):

A cryptosystem is incoherent if its implementation is distributed by the same entity which it purports to secure against.

Let those words sink.

As a summary:

- Confidential Computing greatly improves security by reducing the number of things and people you need to trust.

- Confidential Computing makes it a bit more complicated for governments to get access to your data … but it is NOT impossible!

- But there is no way that US companies will break US law for you.

At the end of the day, if you really care about keeping your data safe from US government access, there is but one solution: Pick a provider in your jurisdiction.

On that note, Elastisys is a fully EU owned and operated managed Kubernetes platform service provider. We enable companies within the EU to accelerate innovation, developing their applications at speed without compromising security or data privacy.