Member post originally published on Nirmata’s blog by Jim Bugwadia and Khaled Emara

About Kyverno

Kyverno is a policy engine designed for Kubernetes and cloud native workloads. Policies can be managed as Kubernetes resources, and no new language is required to write policies. Policy reports and exceptions are also Kubernetes resources. This approach allows using familiar tools such as kubectl, git, and kustomize to manage policies and results. Kyverno policies can validate, mutate, generate, and clean up Kubernetes resources, as well as verify image signatures and artifacts to help secure the software supply chain. The Kyverno CLI can be used to test policies and validate resources as part of a CI/CD pipeline.

Since Kyverno operates as an admission controller, it needs to be very secure, highly scalable, and reliable, as high latency or other performance issues can impact cluster operations. In this post, we will detail some of the key performance improvements we have made to Kyverno, with the help of our customers and community, and how we test for load and performance for each new release of Kyverno.

Prior Performance

The Nirmata team was approached by a large multinational e-commerce company that was replacing Pod Security Policies with Kyverno policies. They operate some of the world’s largest clusters and have extremely stringent load and performance requirements. While Kyverno worked well in normal conditions, they were seeing poor performance under high loads. Increasing memory and CPU resources helped a bit, but performance would quickly degrade when further load was applied.

Around the same time, a platform team at a leading automotive company was also evaluating Kyverno and one of the engineers contributed a load testing method they used with K6. This load testing tool allows writing API tests in Javascript.

The teams simulated real-world scenarios to evaluate the system’s behavior under different levels of stress. This methodology provided valuable insights into Kyverno’s scalability, uncovering performance thresholds and potential pain points that required addressing. The load testing phase became instrumental in ensuring Kyverno’s robustness in handling varying workloads.

K6 has multiple executors, the most popular among which is the shared-iterations executor. This executor creates a number of concurrent connections called virtual users. The entirety of the number of iterations is then distributed among these virtual users.

We created a test wherein we installed Kyverno policies to enforce the Kubernetes pod security standards using 17 policies. Then, we developed an incompatible Pod test and measured how long Kyverno takes to reject the admission request.

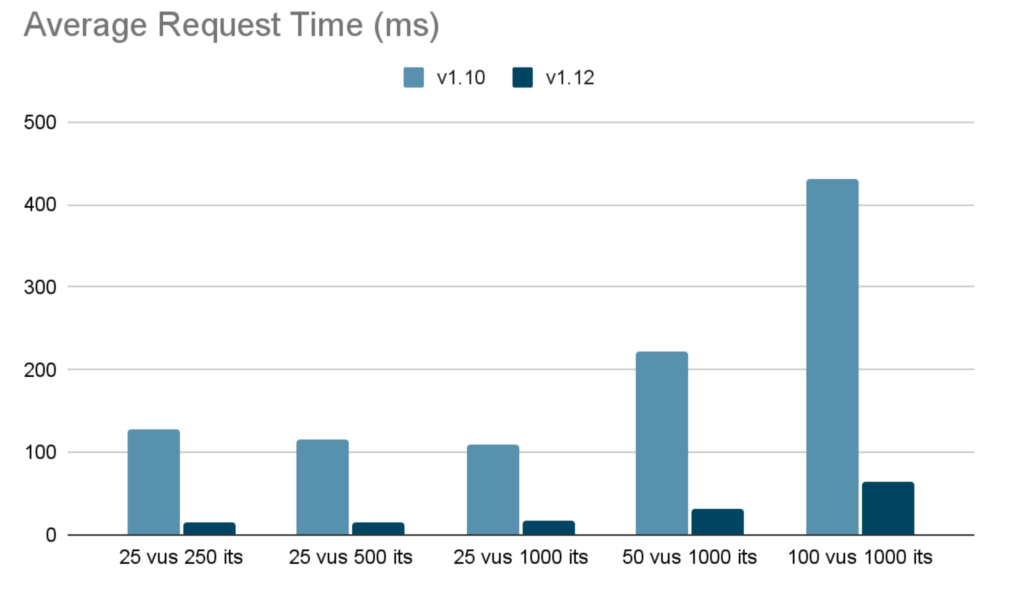

With Kyverno 1.10, after applying the test above using 25vus and 250 iterations, we initially got the following results:

http_req_duration..........: avg=127.95ms min=65.63ms med=124.75ms max=245.33ms p(90)=177.64ms p(95)=190.64msFor 25vus, 500 iterations:

http_req_duration..........: avg=116.47ms min=60.68ms med=111.5ms max=284.21ms p(90)=158.41ms p(95)=175.03msFor 25vus, 1000 iterations:

http_req_duration..........: avg=110.36ms min=55.69ms med=105.11ms max=292.46ms p(90)=146.54ms p(95)=161.88msFor 50vus, 1000 iterations:

http_req_duration..........: avg=222.59ms min=54.26ms med=211.25ms max=497ms p(90)=326.33ms p(95)=351.39msFor 100vus, 1000 iterations:

http_req_duration..........: avg=430.43ms min=98.94ms med=382.43ms max=2.07s p(90)=652.73ms p(95)=782.91msProfiling using pprof in golang

To gain deeper insights into Kyverno’s performance bottlenecks, the team employed the powerful pprof tool in Golang, by profiling the application allowed for a comprehensive analysis of CPU and memory usage, aiding in the identification of critical areas that required attention.

This data-driven approach played a crucial role in steering the performance optimization efforts, enabling the team to make informed decisions on where to focus their efforts for maximum impact.

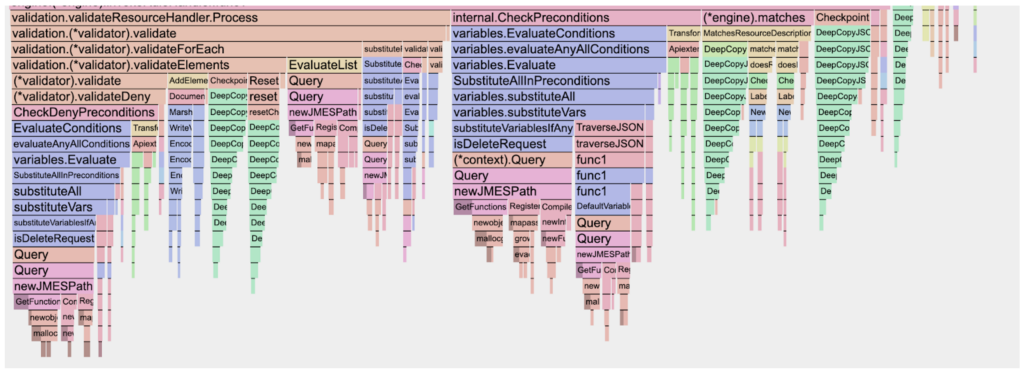

After enabling pprof in Kyverno and generating a flamegraph, we got the following:

From the graph, it was clear that checking preconditions and copying JSON takes a large amount of time.

After investigating, it appears that it uses the internal “Context” to store information about the request rule and process variable information. The Context was stored in a JSON structure, and for each query, a marshal and unmarshal operation was required.

It was also found that each request created a new JMESPath Interpreter, which was allocated a large function map for custom JMESPath filters, which is wasteful as these do not change over the life of the application.

Finally, we also watched the goroutine profile, and every time we did the test, it would increase to 4000 goroutines and decay at a languid pace of 20 goroutines per second. This caused a spike in memory. After further investigations, it appeared to be from the EventBroadcaster, which created a goroutine for each Event.

Changes Made

A number of changes were made to improve Kyverno’s overall performance. These changes are tracked in issue #8260, and the main ones are discussed below:

In-memory Golang Map for Context

One significant enhancement involved replacing the existing JSON structure used for storing context with an in-memory Golang map. The previous approach involved repetitive marshaling and unmarshaling, contributing to unnecessary overhead. By adopting an in-memory map, Kyverno reduced these operations, leading to a more efficient and performant context management system.

jsoniter Implementation

Recognizing the need for optimized JSON handling, the team transitioned from the standard Golang library to jsoniter. This lightweight and high-performance JSON library proved to be a strategic choice, significantly improving the efficiency of JSON-related operations within Kyverno. The adoption of jsoniter contributed to a more streamlined execution of tasks involving JSON structures.

Optimizing event generation

In our quest to enhance event processing efficiency on our Kubernetes cluster, we encountered a bottleneck with the default EventBroadcaster from client-go. The creation of a goroutine for every event led to memory spikes and frequent controller shutdowns, disrupting the stability of our applications.

To address this challenge, we opted for a customized solution, implementing a tailored Watcher function using client-go. This approach provided us with greater control over event creation and processing, allowing us to circumvent the issues associated with excessive goroutine generation.

The results were transformative – the optimized event processing solution alleviated memory spikes, eliminating controller shutdowns and enhancing the overall stability and reliability of our Kubernetes environment. This journey highlights the significance of tailoring solutions to specific Kubernetes requirements, showcasing the impact of a well-designed Watcher function on the dynamic orchestration landscape.

Optimizing policy matching

Kyverno manages an in-memory cache of policies and matches them to each incoming admission request. One area of optimization was to restructure the match logic so that more straightforward comparisons are performed first and more complex match or exclude checks are deferred. This reduces the time taken per request, and although it’s a small amount for each request, this processing time can add up under high loads.

Optimizing webhook configurations

Kyverno automatically manages webhook configurations to instruct the Kubernetes API on which API requests should be forwarded. The webhook configuration is done per-policy and in a fine grained manner to reduce the number of requests Kyverno needs to handle. An optimization made was to enable configuration and propagation of admission operations, e.g., CREATE, UPDATE, DELETE, or CONNECT from the policy rules to webhook configurations. This way, if a policy rule should only be applied for CREATE and UPDATE operations, Kyverno will not be invoked for a DELETE or CONNECT.

Current Performance

The culmination of these changes brought about a substantial improvement in Kyverno’s overall performance. Metrics and benchmarks revealed notable reductions in response times, decreased resource consumption, and enhanced system stability. The optimizations made to context storage, JSON handling, and EventBroadcaster significantly contributed to a more responsive and reliable Kyverno, offering an improved experience for end-users and administrators.

http_req_duration..........: avg=15.52ms min=6.87ms med=15ms max=36.81ms p(90)=22.84ms p(95)=25msAs you can see, that’s almost an 8X improvement in average and p95 latency. Furthermore, since we resolved the OOMKill issue, we have been able to obtain stable performance results from higher iterations.

http_req_duration..........: avg=15.41ms min=6.37ms med=14.98ms max=31.02ms p(90)=21.44ms p(95)=23.4ms25vus and 1000 iterations:

http_req_duration..........: avg=16.77ms min=6.81ms med=16.02ms max=47.92ms p(90)=24.04ms p(95)=26.61ms 50vus and 1000 iterations:

http_req_duration..........: avg=31.39ms min=7.16ms med=28.83ms max=96.98ms p(90)=49.58ms p(95)=57.7ms100vus and 1000 iterations:

http_req_duration..........: avg=64.34ms min=13.06ms med=51.61ms max=236.42ms p(90)=120.99ms p(95)=160.19msPlotting the Average Request Duration we get:

As the results indicate, even under high load Kyverno’s average processing time stays well under 100 milliseconds.

Automating Tests

Recognizing the importance of maintaining performance gains, the Kyverno team implemented robust automated testing processes. These automated tests ensured that any future code changes, updates, or additions would undergo thorough performance validation. By automating the testing pipeline, the team established a proactive approach to identifying and addressing potential regressions, guaranteeing the longevity of the performance improvements achieved.

Our GitHub Actions workflow incorporates a custom K6 script that deploys pods intentionally violating Kubernetes Pod Security Policies (PSPs). These bad pods encounter Kyverno’s policies, triggering policy evaluation and enforcement. By varying the number of concurrent pods, we observe how Kyverno scales, ensuring its effectiveness even when handling large deployments.

But our testing continues beyond there. We didn’t only create tests that do simple validation – we created tests that delve into Kyverno’s diverse capabilities. We run additional scenarios to test mutating policies: K6 deploys pods with specific attributes, triggering Kyverno policies that modify resource configurations. This ensures seamless mutation and its impact on performance. Evaluate policy generation: We simulate scenarios where Kyverno automatically generates resource-specific policies based on pre-defined templates. K6 tests the efficiency and accuracy of this dynamic policy generation.

The benefits of this K6-powered approach are multifold:

- Early detection of scalability issues: We catch performance bottlenecks before they impact production environments, ensuring a smooth and reliable Kyverno experience.

- Confidence in Kyverno’s robustness: Rigorous testing instills confidence in Kyverno’s ability to handle large-scale deployments with grace and efficiency.

- Continuous improvement: K6 data feeds into our CI/CD pipeline, enabling us to refine and optimize Kyverno’s performance continuously.

By integrating K6 with GitHub Actions, we’ve empowered Kyverno to scale gracefully and handle complex policy enforcement scenarios with confidence. This proactive approach ensures our Kubernetes environments remain secure and compliant, even as our infrastructure grows and evolves.

Conclusion

In conclusion, Kyverno’s journey toward performance optimization showcases the project’s dedication to delivering a high-performing and reliable solution for Kubernetes policy management. We are grateful to our customers and community for their support and assistance.

In addition to its unified governance solutions, Nirmata provides an enterprise distribution of Kyverno (called N4K) with long term support for Kyverno and Kubernetes releases, SLAs for CVEs and critical fixes, and 24×7 enterprise support required by enterprises since Kyverno constitutes an critical component for production environments.

These changes were initially implemented into N4K v1.10 and have now all been contributed back to the upstream OSS distribution for the upcoming v1.12 release!

The challenges faced, insights gained through profiling, and the strategic changes implemented collectively contribute to Kyverno’s evolution. As the project continues to scale, these experiences serve as valuable lessons, shaping the ongoing commitment to providing a secure and efficient policy engine within the CNCF ecosystem.

If you have not tried out Kyverno, get started at https://kyverno.io.

If you would like to manage policies and governance across clusters with ease, check out what Nirmata is building at https://nirmata.com/.