Member post by Rakesh Girija Ramesan Nair, Senior Technology Architect, and Sherni Liz Samuel, Technology Architect, Infosys Limited

Abstract:

This blog brings forth the key focus areas & challenges in the Telecom domain related to policies and how that can be addressed with Open Policy Agent (OPA), a graduated project in the Cloud Native Computing Foundation (CNCF) landscape. Further, it showcases practical examples on how similar issues have been addressed with OPA & key observations while doing the same.

Introduction

Digital transformation has been a key focus area for Telco, and this has driven each Communication Service Provider (CSPs) in their journey to modernize infrastructure, operations, and services to meet the evolving demands of the multivendor and heterogenous Network Technology ecosystem. One of the fundamental aspects in this digital transformation journey is to turn cloud native. Original Equipment Manufacturers (OEMs), Telco and service providers who have embarked on this journey have highlighted many challenges and practical issues which need to be addressed as a collaborative activity.

CNCF and its associated projects play a critical part in bringing together the key participants of the Telecom industry and supporting in building this cloud native ecosystem. CSP’s are moving towards cloud native architecture and its eminent that the expectation from OEM’s is to provide solutions complying with the cloud native principles. Assessing from an infrastructure perspective, Kubernetes is in the core as the de-facto runtime environment for running all network workloads and applications.

In this comprehensive transformation journey, there are multiple touchpoints where policies and regulations need to be enforced. The strategy is to call out the key touchpoints in the Telecom domain and leverage CNCF project Open Policy Agent for accelerating the implementation process, thereby improving the time to market.

Policy Driven Areas in Telecom

This section looks at the critical areas where policy and rules play a vital role in the Telecom Domain

- Security

As mentioned in the previous section with networks moving digital & more cloud native, security becomes crucial. Security is spread across all the layers covering infrastructure, clusters, development, and workloads. Different Industry forums like the Center for Internet Security, National Institute of Standards & Technology have published checklist, guidelines, and counter measures for securely configuring and implementing cloud native principles into the Telco stack.

- Orchestration

Deploying workloads (network functions, applications etc) involves multiple systems like the domain managers, element management systems & the domain orchestration systems. Similarly, fulfilling an end-to-end customer service or any B2B service involves driving configuration across network domains and multi-vendor devices/functions. Policy driven orchestration is the key to having a fully on-demand/dynamic orchestration across the various layers.

- Data

Telcos across the globe are adopting new technologies to aid their transformation. Artificial Intelligence (AI) in particular is embraced to improve their services, elevate customer experience & generate more revenue. To excel in this data driven world, telecom providers are trying to leverage data analytics and create data stores collecting variety of data (Structured, Semi-structured, Un-structured etc) across domain & devices. A major challenge in achieving desired outcomes like better analytical insights and improved productivity (self-heal) is the quality of data.

- Integration

In any major transformation program, simplification of Integration across the various systems is fundamental. Standard Industry bodies like Tele Management Forum (TMForum), European Telecommunications Standards Institute (ETSI) etc are maximizing their effort in standardizing the various interfaces across the network landscape. The aim is to avoid any vendor-lock ins and to expose/consume data in a standardized manner. Network as a Service (NaaS) is gaining momentum providing network with the required agility to unlock more revenue streams & use cases. Validating the contractual agreement involved in any integration is a very important ask.

Introduction of Open Policy Agent (OPA)

Open Policy Agent (OPA) is an open-source, general purpose policy engine that helps in authoring, managing, and enforcing policies across various parts of the entire technology stack. OPA provides a high-level declarative language called Rego that defines the rules for governing the system/application.

The key features of OPA are as follows: –

Versatility: – OPA can be considered as a policy-as-code tool which provides the flexibility to enforce policies across multiple applications/cloud networks etc. It can be used from simple data validation/ API authorization to even cloud-native deployment based on promotion policies.

Reusability: – Generic policies defined once in OPA can re-used and enforced across multiple platforms thus reducing duplication. This is in addition to saving development time, reducing errors, and simplifying policy maintenance when the platform or infrastructure scale up.

High Performance Policy Decisions: – OPA has the capability to parallelize policy evaluation in certain use-cases, thereby optimizing performance while dealing with huge & complex datasets and policies.

Simplified policy management: – OPA decouples the policy logic from the application code, thus making it easier and more efficient to manage policies. Unified policy management can be achieved by using OPA as the core to define & maintain policies across the entire infrastructure and technology stack.

Practical Examples of leveraging OPA in Telecom Domain

- Extraction, Validation & Transformation Framework for Networks: –

Quality of continuous data enables unlocking the value of the data store and in turn helps get the maximum out of the network data. It empowers the Telcos to run advanced analytics, delivering real-time insights and ML-driven predictions.

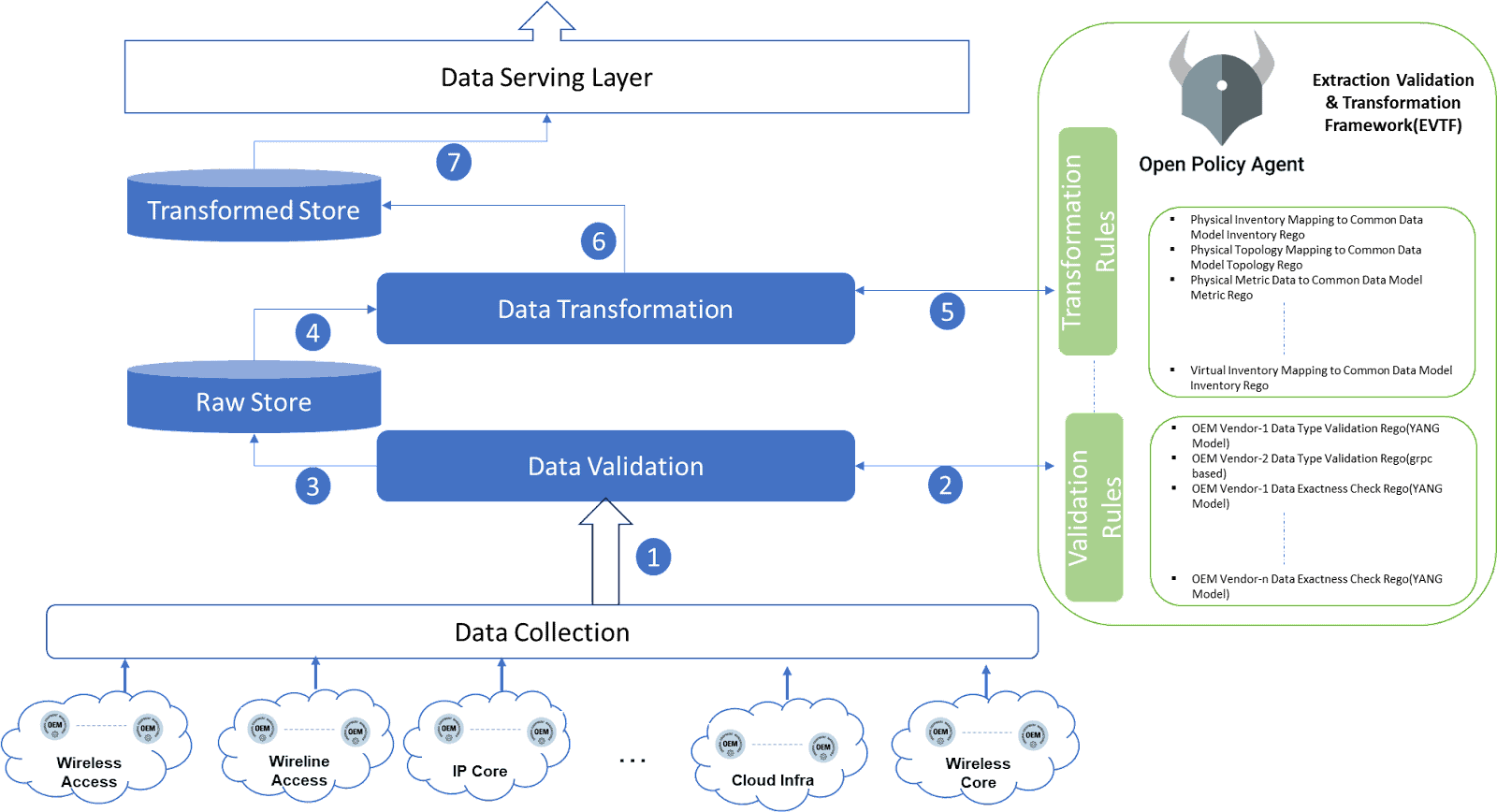

The diagram below is that of an extraction validation & transformation framework (EVTF) that we setup for a network data store propelled by OPA.

The overall flow of this framework is:

- The Data Collection layer connects to various network data sources across domains (e.g. Network Management Systems, Element Management Systems, actual devices etc) using various protocols (e.g. Simple Network Management Protocol-SNMP, gRPC Network Management Interface -GNMI etc) and collects different network data (e.g. Inventory, Topology, Alarms, Telemetry) which is then pushed to message bus for consumption.

- The data is then consumed and passed through various validation stages configured based on the device type, domain, and vendor. The extraction validation & transformation framework (EVTF) utilizes OPA to enforce policies defined in Rego.

Some of validation rules that can be defined using OPA are:

- Data Type Checks: Ensure data adheres to expected types (e.g., integer for port number, string for IP address).

- Completeness Checks: Ensure that data does not have missing values or miss data records.

- Range Checks: Validate data that falls within a specific range (e.g., valid IP address range).

- Custom Validation Rules: Implement more complex validation logic using Rego functions.

An abstraction layer handling all specific Telco use cases/scenarios was developed as a custom microservice. This acts as the façade for any orchestrator system to interact with OPA. Tailor made APIs with Swagger documentation driving network device model validations and transformations were created as part of this service. A sample Validation REST API endpoint is provided below which took 4 parameters as input.

- Domain: To identify the specific network area the data originates from

- Device Type: Specifies the type of device that generated the data (e.g., router, switch, firewall).

- Vendor: Provides information about the Original Equipment Manufacturer

- Input Message: This is the actual data payload to be validated.

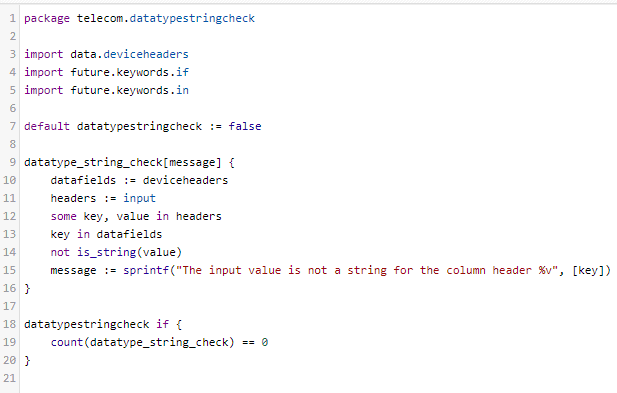

Every validation rule is configured in OPA leveraging the out of the box APIs provided. A sample Rego policy for performing datatype validation is shown below: –

In this example, the datatype_string_check Rego rule checks as to whether the input values align to agreed data types or not. The Rego policy is used to generate the result and have custom messages configurable for error scenarios. Rego provides flexibility in importing external data files. Configurable data in external files is referenced in the above Rego policy and utilized e.g. data.deviceheaders.

The key idea of this framework was to have a low code approach aligning with OPA making it easier for domain SMEs to configure the required rules with minimal technical dependency.

- On successful validation, data is persisted to the raw store. Invalid data is notified to respective source systems and quarantined in the error store for reference.

- Transformation scripts pull the validated /data quality checked data from raw store.

- In the transformation component, EVTF with underlaying OPA is used again to define the various transformation rules needed to transform the raw data to a common modelling format. Following are some of the transformation rules that are defined: –

- Data Mapping: Convert network-specific data formats to a common data model aligning to any industry standard.

- Field Renaming: Rename fields for consistency with the common model.

- Data Enrichment: Add additional information based on pre-defined rules or external sources.

A similar approach detailed in step 2 is followed here as well, showcasing the flexibility of EVTF based on OPA. Single solution approach addressing two different areas.

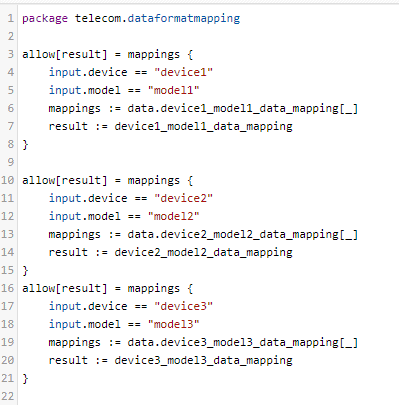

For e.g. The above Rego policy shows a simple transformation mapping logic based on source and target. In the above example, the device type is the source and the data model type to be mapped is the target. The actual mapping is configured under the external data.json file and imported into Rego policy.

- Transformed data is aligning to a common data model is pushed to a Transformed Store.

- Data serving layer pulls transformed data from Transformed store and produces them to different consumers as product models aligning to various use cases.

- Correlation & Orchestration Policy Engine for Network: –

Network Assurance is of utmost importance to Telcos ensuring availability of network to end customers. Orchestration help Telco’s achieve better time to market for rolling out new products at ease. Assurance and Orchestration go hand in hand to achieve autonomous networks.

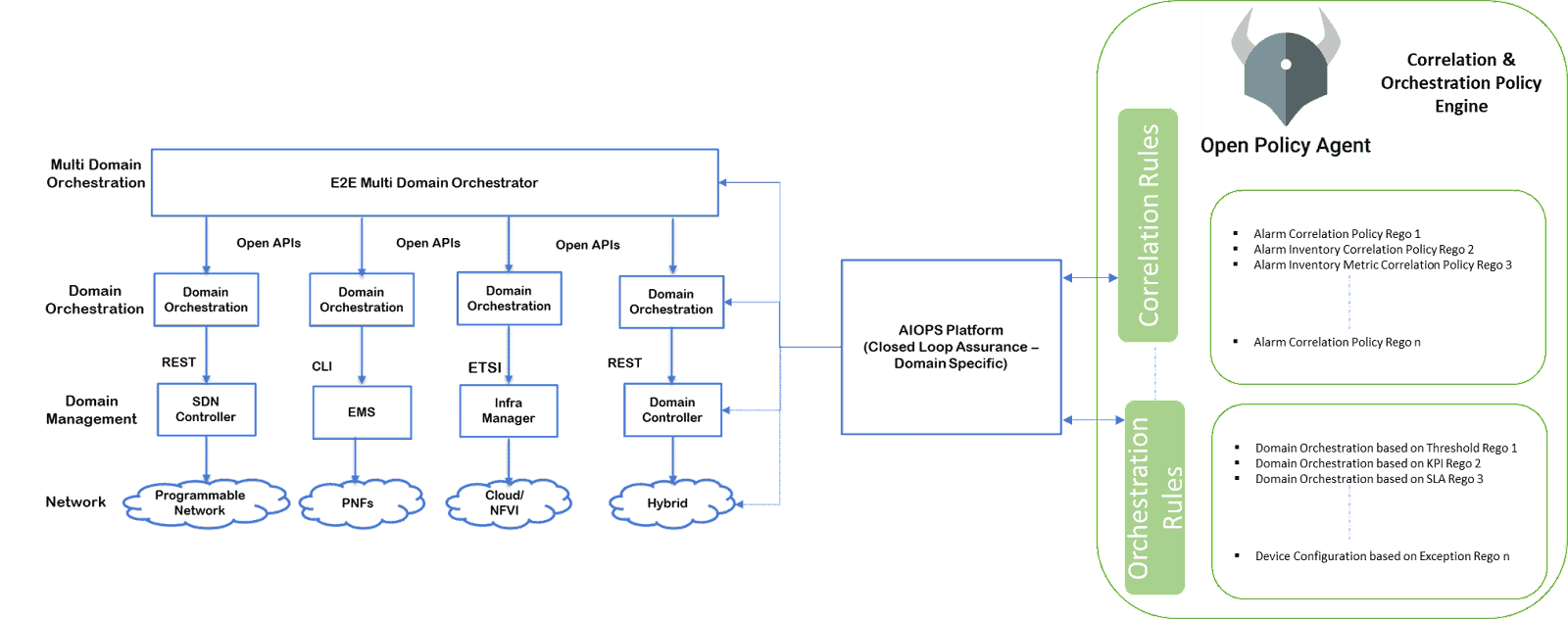

Below diagram shows how an Artificial Intelligence for Operations (AIOPS) platform is integrated with various network domain orchestrators & systems that leverages Correlation & Orchestration Policy Engine (COPE) driven by OPA.

AIOPS platform unearths network anomalies and patterns leveraging their AI/ML capability. These are reviewed by Operator admins, to add, modify & approve them to be stored as correlation policies. These policies are then updated to COPE which is the utilized at runtime to proactively resolve network problems.

The Correlation & Orchestration Policy Engine (COPE) driven by OPA has a custom service which acts as the intermediary for any interfacing applications. All network level complexities are handled in this microservice while the engine remains OPA.

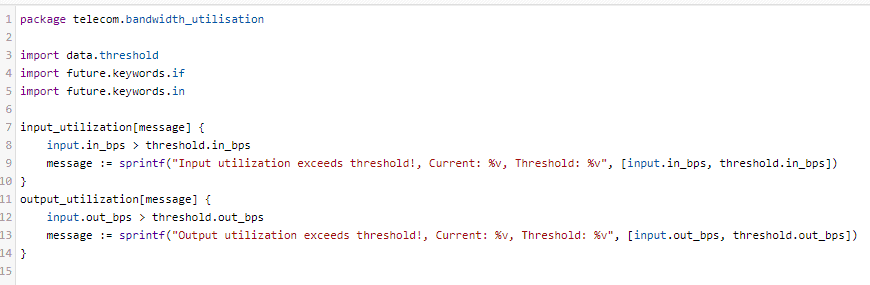

A sample Rego policy for configuring utilization based & correlation policy is shown below: –

Configure an action if the network utilization bandwidth goes beyond a defined threshold. Rego policy can be defined that specifies the condition for identifying whether the network input and output traffic is more than the threshold.

In the above example, we have two function input_utilization and output_utilization. The threshold can be dynamically calculated via spark streaming and configured. The custom microservice layer leverages this information to enrich the data and validate the same against defined Rego policies.

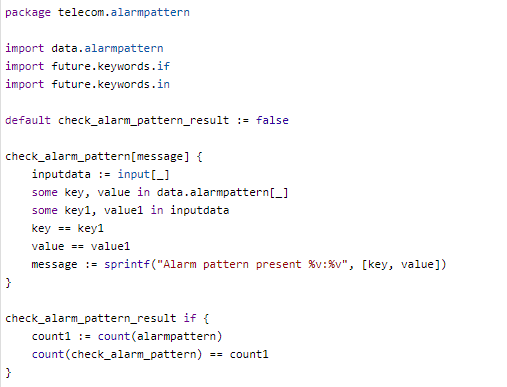

Sample OPA Policy to correlate the alarms patterns is shown below: –

In the above example, the Rego policy checks an array of input alarms and validates the alarm set with preconfigured alarm pattern array. Alarm patterns are identified via AI/ML or configured based on experience for proactive assurance.

Inference

OPA provides a jump start with much required flexibility for performing rule & policy-based checks. As explained in the examples above OPA has lot of out of the box (OTB) capabilities and which with some minor customizations can be applied across multiple use cases covering the length and breadth of the Telco stack. OPA acts as the backbone for any rule or policy driven solution.

OTB

- OPA exposes REST API endpoints with CRUD operations.

- OPA Rego Policies has been written in a natural language, so can be picked up very quickly compared to other Rule engines like Drools. No specific skillset required for any future enhancements or implementations.

Customizations

- Configuration driven solution approach can be achieved with OPA with minor enhancements. It helps avoid any IT/human dependencies enabling self-service & improving response times.

Tools like Rego playground help us develop and test Rego policies on the go. Along with this we also have a widespread active community for OPA making it easy for use.

There is widespread applicability for OPA in telecom domain. Explore, Apply and Transform your network landscape with OPA.

References

| Official OPA Documentation | https://www.openpolicyagent.org/docs/latest/policy-language/ |

| Rego Playground | https://play.openpolicyagent.org/ |

| Github Community Discussions | https://github.com/open-policy-agent/community/discussions |

| Github OPA Issues / Feature requests | https://github.com/open-policy-agent/opa/issues |