Member post originally published on Bouyant’s blog by Scott Rigby

In the world of Kubernetes, network policies are essential for controlling traffic within your cluster. But what are they really? And why, when and how should you implement them?

Whether you’re an existing Kubernetes user and want to better understand networking, or a traditional network engineer trying to map your knowledge to Kubernetes, you’ve come to the right place.

This guide is for anyone interested in learning more about policy-based controls for your Kubernetes network traffic. You will learn about the different types of policies and why they matter, the pros and cons of each, how to define them, and when to combine them.

What are network policies?

Network policies help define which traffic is allowed to enter (ingress), exit (egress), and move between pods in a Kubernetes cluster.

Just like other resources in Kubernetes, network policies are declarative configurations that components within the cluster will use to enforce which traffic is allowed or denied and under what circumstances.

But once you decide what policies you want to enforce, and it comes time to define them in a Kubernetes resource manifest, you’re met with several options. There is the Kubernetes native NetworkPolicy resource, but there are also other custom resources defined by other tools in the ecosystem, such as Cilium, Calico, Istio, and – our focus in this article – Linkerd. Rather than comparing and contrasting the individual tools and options, we’re going to take a step back and explain the two main categories that each of these different policy definitions fall into.

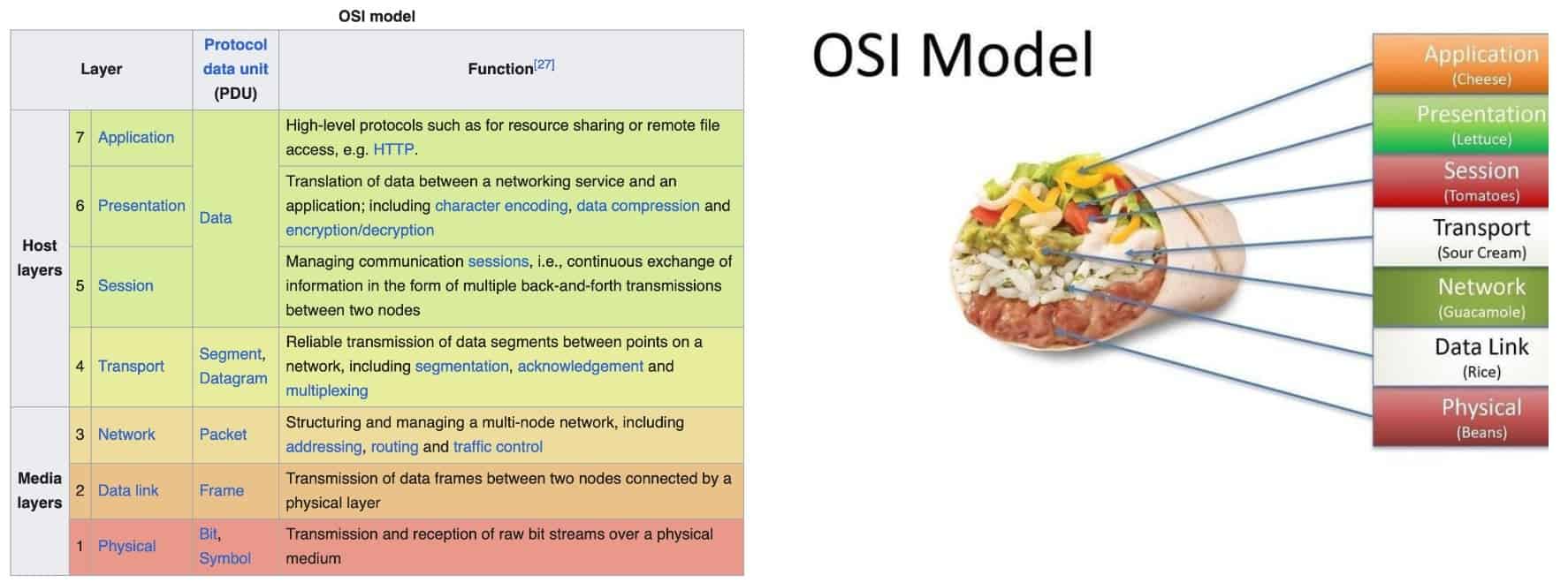

Broadly, network policies can be categorized into Layer 4 (L4) and Layer 7 (L7) policies. What does this mean? I know what you’re thinking, the 7-layer burrito! Close, but no. These refer to the seven layers of The Open Systems Interconnection (OSI) model.

(Fun fact: the 7-layer burrito’s inspiration was the 7-layer dip, which was widely popular in the 1980s, but made its debut less than a year after the OSI model was published in 1980. Coincidence? I’ll leave the reader with evidence from internet history, including the original OCI is a sham rant, an IEFT networking RFC proposal, and this small nod hidden in xkcd).

The OSI model breaks down networking communication into 7 layers. While the OSI model might be somewhat dated – it has been stated many times that the internet doesn’t really work this way – layers 4 and 7 remain a useful reference for our purposes.

The term “L4” refers to the fourth layer of the OSI model – the transport layer, encompassing protocols like TCP. “L7” corresponds to the (you guessed it!) seventh OSI layer – application-level communication, which includes HTTP, gRPC, and other application-specific protocols. In the context of Kubernetes network policies, L4 policies operate at the cluster level, while L7 policies application level. Much like the OSI layers themselves, L4 and L7 network policies are intended to work in tandem.

This guide will explore both types, covering how they are defined, their advantages and limitations, and their role in achieving a zero-trust security model.

L4 network policies

Layer 7 network policies operate at the application layer, understanding protocols such as HTTP and gRPC. Unlike L4 policies, L7 policies allow for more granular control, such as permitting “Service A to call the /foo/bar endpoint of Service B,” or “Service B will only talk to mTLS’d Services.”

How L4 network policies work

L4 policies are implemented by CNI plugins to configure the kernel’s packet filter – for example using Netfilter/iptables or eBPF – targeting IP addresses and port numbers corresponding to the pods in different services. For example, if you want to implement a policy where “Service A cannot call Service B on port 8080,” the system manages these rules dynamically as services and their corresponding pods change. This is done by targeting namespaces or pods by their label, using podSelector.

Here is a simple L4 network policy example, defining that only TCP traffic from service-a is allowed to port 8080 on pods in service-b. As soon as a NetworkPolicy with “Ingress” in its policyTypes is created that selects pods in service-b, those pods are considered “isolated for Ingress”. This means that only inbound traffic matching the combination of all ingress lists will be allowed. Reply traffic to those allowed connections is implicitly allowed. There are more options including explicit egress rules outlined in the Network Policies concept page in Kubernetes docs, but we only need this simple example to explain the overall function of L4 policies in Kubernetes:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: service-a-to-b

namespace: default

spec:

podSelector:

matchLabels:

app: service-b

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

app: service-a

ports:

- protocol: TCP

port: 8080Pros and cons of L4 network policies

Pros:

- Built into most CNI plugins, making them readily available.

Cons:

- Limited to expressing policies in terms of pod labels and ports, lacking granularity for specific routes or service names.

- Limitated in the types of rules they can express—for example, cannot require all traffic go through a common gateway.

- No logging of security events is possible.

- Vulnerable to “split brain” scenarios, where the mapping between pods and IP addresses can diverge, potentially allowing unauthorized access.

- IP addresses and ports can be spoofed, leading to potential security breaches. Modern security paradigms have moved beyond these simple constructs.

When should I use L4 network policies?

L4 network policies have the advantage of being simple to understand and widely available in Kubernetes. However, given the (minor, but not insignificant) security weaknesses, lack of expressivity, and inability to log security events, L4 policies fall into the category of “necessary but not sufficient” controls, and should ultimately be combined with other controls (e.g. L7 network policies) as your security posture matures.

L7 network policies

Layer 7 network policies operate at the application layer, understanding protocols such as HTTP and gRPC. Unlike L4 policies, L7 policies can make use of the service name directly, and allow for policies such as “Service A is allowed to talk to Service B”, or “Service B will only talk to mTLS’d Services”. These policies also allow for more granular control, such as permitting “Service A to call the /foo/bar endpoint of Service B.”

Implementing L7 policies with Linkerd

In Kubernetes, L7 policies are typically implemented via a service mesh like Linkerd, and represented as Custom Resources such as Server.policy.linkerd.io. Linkerd proxies HTTP and gRPC calls, understanding these protocols, and enforces policies accordingly. For more details, refer to Linkerd’s server policy documentation.

How L7 policies work

Linkerd enforces policies on the server side, meaning the proxies for Service A protect access to it, regardless of the caller. These policies are based on the service identities established during mutual TLS (mTLS), ensuring that IP addresses are ignored in favor of cryptographic properties of the connection. This method is designed to work seamlessly in distributed systems, similar to the open internet where coordination between systems is unreliable.

It’s important to note that Linkerd has two types of policies: Default and Dynamic.

Default policies are set when installing or upgrading Linkerd. These policies let you allow or disallow traffic depending on whether the clients are within your service mesh, or within the same cluster (this last check is useful if, for example, you have a mesh that spans multiple clusters). There is also a policy to deny all traffic. Unless one of these policies are specified, all traffic is allowed (so the world doesn’t break when you install Linkerd!). You may also override this policy per namespace, and workload if desired. You can read more about default policies here, but for this article it’s enough to know these exist and work in tandem with Dynamic policies.

Dynamic policies, unlike Default policies, allow you to change policy behavior on the fly by updating the Custom Resources that control these policies. Dynamic policies are also called “fine-grained” policies, because they can control traffic for specific services, ports, routes, and more. In contrast to the single L4 NetworkPolicy.networking.k8s.io resource, Linkerd Dynamic policies are represented by multiple CRDs in order to allow finer grained rules, and less repetition through reuse for multiple, related policies.

One set of CRDs allow you to specify the destination for traffic you want to target (either a Server, or a subset of it’s traffic called HTTPRoute). Another set of CRDs represent authentication rules (either mesh identities with MeshTLSAuthentication, or a set of IP subnets that clients must be part of called NetworkAuthentication), which must be satisfied as part of a policy. And finally there is a CRD representing the AuthorizationPolicy itself, which maps a Custom resource from the first set of CRDs (a traffic target to be protected), to one of the second type of CRDs (the authentication required before access to the target is allowed).

Here are the example Custom Resources for a Linkerd L7 equivalent to the single L4 example resource above – a Server, a MeshTLSAuthentication, and the AuthorizationPolicy that references them:

The traffic destination:

apiVersion: policy.linkerd.io/v1beta1

kind: Server

metadata:

name: service-b

namespace: default

spec:

podSelector:

matchLabels:

app: service-a

port: 8080

proxyProtocol: "HTTP/2"The authN rules:

apiVersion: policy.linkerd.io/v1alpha1

kind: MeshTLSAuthentication

metadata:

name: service-a

namespace: default

spec:

identityRefs:

- kind: ServiceAccount

name: service-aThe authZ policy:

apiVersion: policy.linkerd.io/v1alpha1

kind: AuthorizationPolicy

metadata:

name: service-a-to-b

namespace: default

spec:

targetRef:

group: policy.linkerd.io

kind: Server

name: service-b

requiredAuthenticationRefs:

- name: service-a

kind: MeshTLSAuthentication

group: policy.linkerd.ioThere are many more options you can see in Linkerd’s Authorization Policy docs page, but this gives you a sense of the granularity, flexibility, and reusability of Linkerd’s L7 policy equivalent of the earlier L4 NetworkPolicy example.

Pros and cons of L7 network policies

Pros:

- Allows for fine-grained policies, such as “only allow encrypted connections with mTLS” and “only allow Foo to call /bar.”

- Not susceptible to split-brain scenarios.

- Combines with mutual TLS to also provide authentication and encryption.

- Can provide logging of security events such as (attempted) policy violations.

Cons:

- Requires running a service mesh, as it is not built into Kubernetes natively.

- Policies will not apply to traffic sent to unmeshed workloads (even if the source workloads are meshed).

- Policies will not apply to UDP or other non-TCP traffic.

Combining L7 and L4 policies

Some use cases are best solved by L4 policies, while others are best solved by L7 policies. Luckily you don’t need to choose one or the other! You can implement both types of policies in your cluster at the same time. The pros and cons above will help you determine when to use each type of policy.

As mentioned above – when you need to enforce policy about communication to any unmeshed resource, you would choose an L4 policy. Similarly, you would use L4 if you need to enforce cluster-wide policies regardless of individual workloads meshed status.

You may also have requirements not supported by the current version of your installed service mesh. Linkerd introduced support for IPv6 in version 2.16 and earlier versions don’t support IPv6-specific policies. Some requirements may not be supported by any version of your service mesh yet. For example, Linkerd policies do not support UDP or non-TCP traffic at all yet, because a clear use case for this has not yet been established. Please let us know if you want to contribute to defining this use case. You may also want to follow that issue for updates. In the meantime, an L4 policy is a good option here.

L7 policies on the other hand allow for more complex scenarios that require more granular control, opening up many more use cases. You can create allow or deny rules for specific clients, for access to individual workload endpoints, checks for whether or not there is an encrypted mTLS connection, for specific network authentication, for whether clients are meshed, or meshed within the same cluster, and many other options.

Finally, there is a use case for combining L7 and L4 policies with overlapping functionality, in order to create a more robust security framework. This belt-and-suspenders approach can leverage the strengths of both layers to enhance security. Using the examples above, you can explore combining L4 and L7 policies by defining BOTH the Linkerd Server Authorization policy AND the Kubernetes NetworkPolicy to restrict all network communication with the same service (service-b) to only your specified client service (service-a). Combining network policies across multiple layers like this is an example of the defense in depth security strategy, which recommends providing redundancy in the event of one security control failing.

Conclusion

Network policies are a fundamental aspect of securing Kubernetes clusters. L4 policies provide basic control over traffic based on IP addresses and ports, while L7 policies offer granular control over application-layer traffic based on strong cryptographic identity. By combining both types of policies and leveraging a service mesh like Linkerd, you can implement a robust, zero-trust security model that addresses modern security challenges.

Want to give Linkerd a try? You can download and run the production-ready Buoyant Enterprise for Linkerd in minutes. Get started today!