Member post originally published on ngrok’s blog by Mike Coleman

MicroK8s is a lightweight, efficient, and easy-to-use Kubernetes distribution that enables users to deploy and manage containerized applications. ngrok, on the other hand, provides a secure and scalable universal gateway that enables secure access to your workloads regardless of where they are hosted.

Both MicroK8s and ngrok excel in the arena of edge computing. This blog post examines how leveraging MicroK8s alongside ngrok can simplify and accelerate edge computing use cases.

What is MicroK8s?

MicroK8s is a lightweight Kubernetes distribution from Canonical. MicroK8s is extremely simple to set up. According to the documentation, it can be up and running in as little as 60 seconds. Additionally, it’s optimized to run in low-resource environments requiring as little as 540MB RAM; however, 4G of RAM is recommended for running actual workloads. Because of its low overhead, MicroK8s is especially well suited to operating on edge compute devices, including point-of-sale systems, controllers, and lightweight hardware.

Despite its ability to run in low-resource environments, MicroK8s is a full-featured Kubernetes distribution. It provides several advanced features, such as GPU support, automated HA configurations, and strict confinement to provide additional isolation between workloads and the underlying resources they run on.

What is ngrok?

ngrok is a unified ingress platform that provides access to workloads running across a wide variety of infrastructure. By combining a lightweight agent with a powerful global network, ngrok makes it extremely easy to bring workloads online, including APIs, services, devices, and applications. With ngrok, you get a single platform that can replace multiple disjointed services, including reverse proxy, API gateway, firewall, and global load balancer.

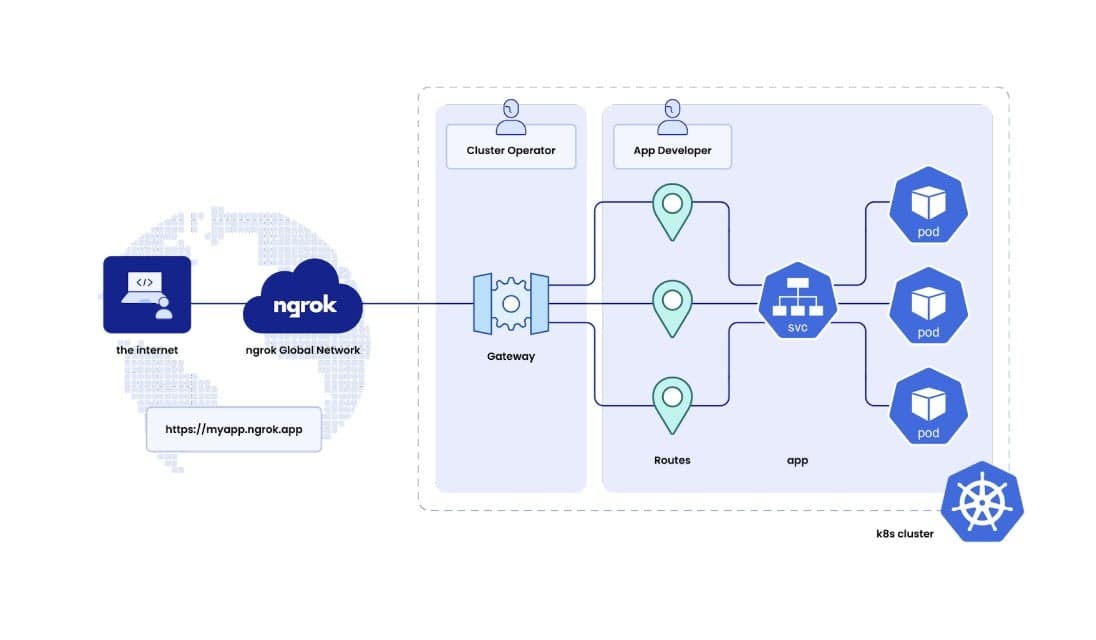

By automating and standardizing network access, ngrok simplifies providing edge access to edge computing workloads. Specific to MicroK8s ngrok features a Kubernetes operator that can provide access to cluster-based workloads. The ngrok Kubernetes operator provides both a traditional ingress controller as well as a gateway API implementation.

MicroK8s and ngrok are powerful combinations for hosting and accessing edge-based workloads, but what makes those workloads challenging to manage?

How can MicroK8s and ngrok help with edge computing?

Edge computing presents several challenges, including managing and orchestrating distributed infrastructure, ensuring security and data privacy, and addressing variability in network connectivity and latency. Additionally, edge computing environments often require specialized hardware and software, which can be costly and difficult to maintain. Furthermore, edge applications require low-latency processing and real-time decision-making, making it essential to optimize performance and minimize downtime. Moreover, the edge ecosystem is highly fragmented, with diverse technologies and standards, making interoperability a significant challenge. Finally, edge computing also raises concerns about data management, including data processing, storage, and analytics, which must be addressed to unlock the full potential of edge computing.

As mentioned before, MicroK8s is a full-featured, lightweight Kubernetes distribution. This combination allows MicroK8s to run on edge devices while handling intensive workloads such as AI inference and data analytics. By having MicroK8s deployed at the edge, latency can be greatly reduced. An additional benefit is reducing the unnecessary flow of data, which helps to provide additional layers of security.

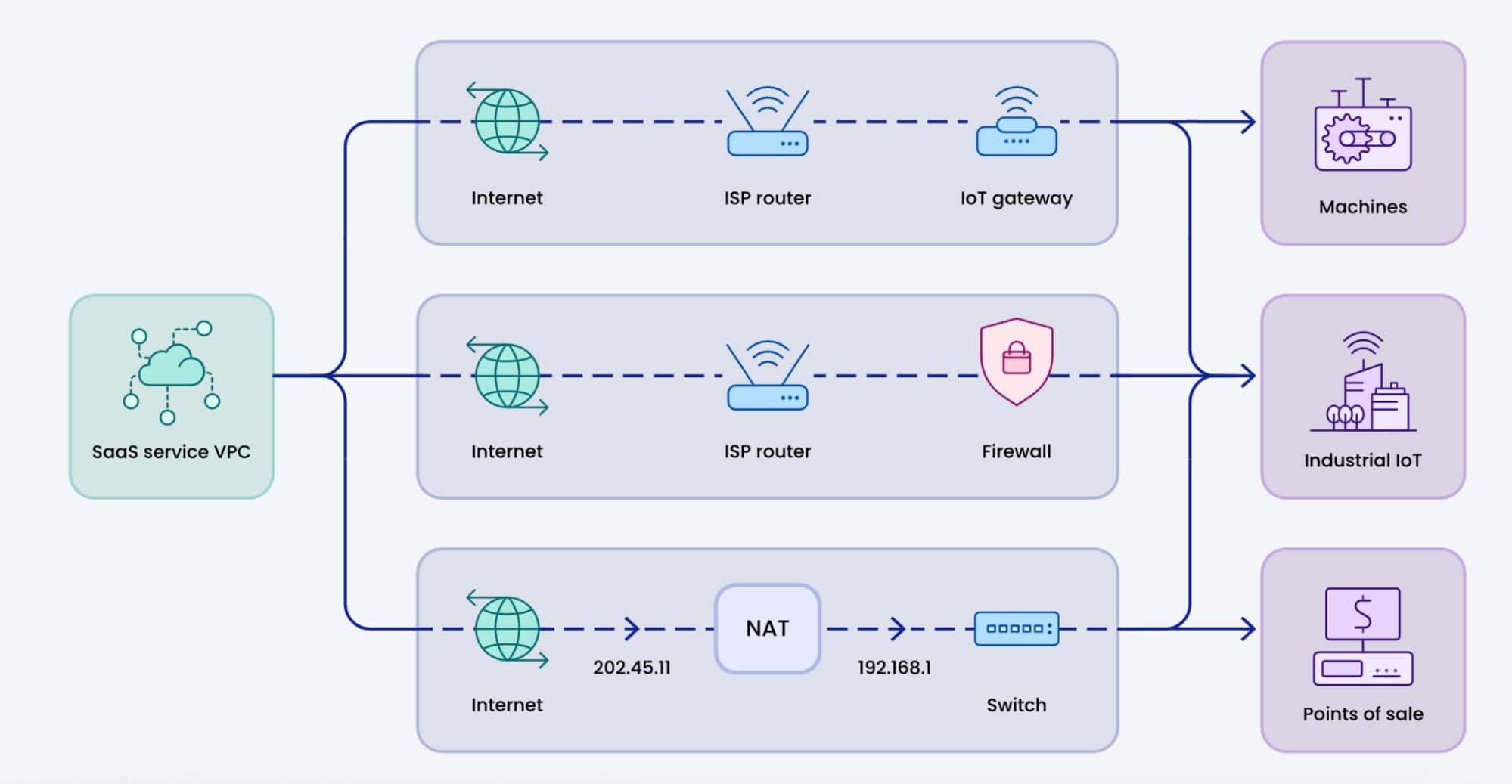

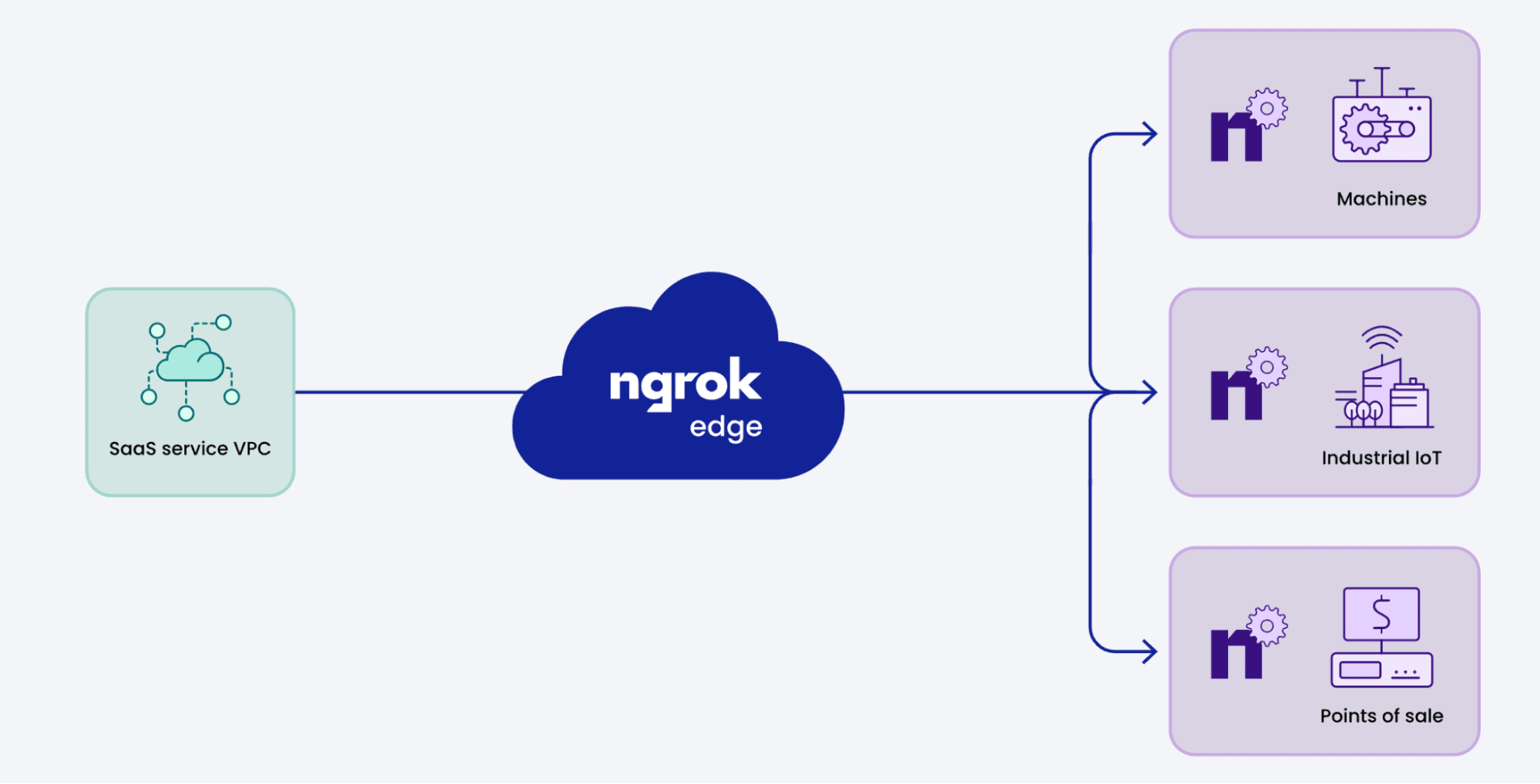

With MicroK8s handling the processing duties, ngrok can focus on managing access to the running workloads. In traditional environments, a mishmash of hardware and software provides access to running workloads. This results in a high amount of friction in deploying and managing access and security concerns.

ngrok provides a standardized platform that removes the complexity of providing access to edge-based workloads. ngrok standardizes connectivity into external networks hosting IoT devices without requiring support from partners or changes to their network configurations. ngrok does all this while working with any operating system or platform. Additionally, ngrok supports security policies to ensure compliance with necessary security requirements. Finally, ngrok’s global network helps guard against networking failures by providing multiple points of presence with automated failover.

What else can you do with MicroK8s and ngrok?

In addition to edge computing, MicroK8s, ngrok, and the ngrok Kubernetes Operator can be combined to enable other use cases, including:

- Secure access to development services for remote teams

- Scalable and secure API gateways for cloud-native applications

- Secure exposure of services for testing and debugging purposes

Ultimately, deploying and managing edge computing workloads can be challenging. However, by leveraging ngrok, our Kubernetes Operator, and MicroK8s these issues can be greatly reduced. Getting started is simple ngrok has a generous free tier (sign up here!), and you can follow our ngrok and MicroK8s integration guide to get started today.