Community post originally published on Medium/IT Next by Giorgi Keratishvili

Introduction

Over the last five years, GitOps has emerged as one of the most interesting implementations of using GIT in the Kubernetes ecosystem and when people hear about Argo they immediately associate it with Argo CD, but not many people know that there is more than just GitOps tools. The Argo project itself is a tool suite developed as a cloud and Kubernetes-native solution to help with accelerating SDLC and automatization of full DevOps solutions. Alongside of ArgoCD there are great tools such as Argo Workflow, Argo Rollout and Argo Events which are extensively covered in this exam and gives real-world examples of how to effectively utilize them. If you are planning to take the CAPA exam, then this blog will be interesting for you as we will dive into domains that are asked during this exam and how to prepare for them as part of my exam preparation journey.

The Argo Project is a suite of open source tools for deploying and running applications and workloads on Kubernetes. It extends the Kubernetes APIs and unlocks new and powerful capabilities in application deployment, container orchestration, event automation, progressive delivery, and more also there is very interesting blog from Argo Project creator that explains why they created it. Argo Workflow was the first tool before Argo CD.

Who should take this exam?

The CAPA is an associate-level certification designed for engineers, data scientists, and others interested in demonstrating their understanding of the Argo Project ecosystem.

Those who earn the CAPA certification will demonstrate to current and prospective employers they can navigate the Argo Project ecosystem, including when and why to use each tool, understanding the fundamentals around each toolset, explaining when to use which tool and why, and integrating each tool with other tools.

CAPA will demonstrate a candidate’s solid understanding of the Argo Project ecosystem, terminology, and best practices for each tool and how it relates to common DevOps, GitOps, Platform Engineering, and related practices. Learn more about the exam and sign up for updates on the Linux Foundation Training and Certification website.

Compared to other certifications I wouldn’t say it’s the easiest, but it still falls under the pre-professional level of difficulty and is a great way to test your knowledge of Argo Project. For me, the order of difficulty felt like this: KCNA/CGOA/CAPA/CKAD/PCA/KCSA/CKA/CKS. One thing to keep in mind is that I had already passed the CGOA before taking the CAPA and when I was preparing for the exam, there were no tutorials or blogs to refer to, only some suspiciously scam-like dumps, so don’t fall for them. Below I will mention all the new courses and materials that should help in preparation.

Regarding persons who would benefit SysAdmins/Dev/Ops/SRE/Managers/Platform engineers or any one who is doing anything on production should consider it as knowing basic GitOps is always good thing as we are managing multiple clusters it is handy skill.

Format and PSI Proctored Exam Tips

So we are ready to patch every security whole in our cluster, kick out hackers from our production system and make hard them to compromise your cluster? Then, we have a long path ahead until we reach this point. First, we need to understand what kind of exam it is compared to CKAD, CKA and CKS. This is exam where the CNCF has adopted multiple-choice questions and compared to other multiple-choice exams, this one, I would say is not an easy-peasy. However it is still qualified as pre-professional, on par with the KCNA/PCA/CGOA/KCSA.

This exam is conducted online, proctored similarly to other Kubernetes certifications and is facilitated by PSI. As someone who has taken more than 15 exams with PSI, I can say that every time it’s a new journey. I HIGHLY ADVISE joining the exam 30 minutes before taking the test because there are pre-checks of ID and the room in which you are taking it needs to be checked for exam criteria. Please check these two links for the exam rules and PSI portal guide

You’ll have 90 minutes to answer 60 questions, which is generally considered sufficient time, passing score is >75%. Be prepared for some questions that can be quite tricky. I marked a couple of them for review and would advise doing the same because sometimes you could find a hint or partial answers in the next question. By this way, you could refer back to those questions. Regarding pricing, the exam costs $250, but you can often find it at a discount, such as during Black Friday promotions or near dates for CNCF events like KubeCon, Open Source Summit, etc.

The Path of Learning

At this point, we understand what we have signed up for and are ready to dedicate time to training, but where should we start? Before taking this exam, I had a good experience with Kubernetes, Flux CD and Argo Project ecosystem and had passed CGOA exam, but yet I still learned a lot from this exam preparation.

At first glance, this list might seem too simple and easy but however, we need to learn the fundamentals of GitOps, CICD and SDLC first in order to understand higher-level concepts such as Branching Strategy, Event Driven Architecture and many more

Let break down Domains & Competencies

**Argo Workflows 36%**

Understand Argo Workflow Fundamentals

Generating and Consuming Artifacts

Understand Argo Workflow Templates

Understand the Argo Workflow Spec

Work with DAG (Directed-Acyclic Graphs)

Run Data Processing Jobs with Argo Workflows

**Argo CD 34%**

Understand Argo CD Fundamentals

Synchronize Applications Using Argo CD

Use Argo CD Application

Configure Argo CD with Helm and Kustomize

Identify Common Reconciliation Patterns

**Argo Rollouts 18%**

Understand Argo Rollouts Fundamentals

Use Common Progressive Rollout Strategies

Describe Analysis Template and AnalysisRun

**Argo Events 12%**

Understand Argo Events Fundamentals

Understand Argo Event Components and Architecture

ARGO PROJECT

The Argo Project is headlined by Argo CD and Argo Workflows, two mainstay powerhouses that have become the defacto tools in their respective spaces. Joining Argo CD and Workflows are Argo Events and Rollouts with large successful followings of their own. One of the brilliant decisions made early on was that these tools which have their own uses could be useable with or without the rest of the Argo suite of tooling.

Argo CD is the world’s most popular and fastest growing GitOps tool. It allows users to define a git source of truth for an application and keep that in sync with a destination Kubernetes cluster. This powerful tool gets even more powerful when combined with tools like Argo Rollouts, which handles progressive delivery, and other open source tools like Crossplane for managing infrastructure, or OPA and Kyverno for security policy.

Argo Workflows provides a powerful workflow engine built for Kubernetes where each step operates in its own pod. This provides for massive scale and flexible multi-step workflow tasks. Argo Workflows has been especially popular for data pipelines as well as Kubernetes-native CI/CD pipelines. Workflows becomes especially powerful when paired with Argo Events, a Kubernetes-native event engine. It can be used to detect events in Kubernetes and trigger actions, either in Argo Workflows, or other services, as well as provide a general interface for webhooks and api-calls.

What is Argo Worflows?

Argo Workflows is an open source container-native workflow engine for orchestrating parallel jobs on Kubernetes. Argo Workflows is implemented as a Kubernetes CRD.

- Define workflows where each step in the workflow is a container.

- Model multi-step workflows as a sequence of tasks or capture the dependencies between tasks using a graph (DAG).

- Easily run compute intensive jobs for machine learning or data processing in a fraction of the time using Argo Workflows on Kubernetes.

- Run CI/CD pipelines natively on Kubernetes without configuring complex software development products.

Why Argo Workflows?

- Designed from the ground up for containers without the overhead and limitations of legacy VM and server-based environments.

- Cloud agnostic and can run on any Kubernetes cluster.

- Easily orchestrate highly parallel jobs on Kubernetes.

- Argo Workflows puts a cloud-scale supercomputer at your fingertips!

Argo Workflows: An Introduction and Quick Guide

An in-depth guide on Argo Workflows, covering its basics, core concepts, and a quick tutorial. It explains how Argo Workflows orchestrates parallel jobs on Kubernetes, details on defining workflows with containers and DAGs, and offers practical steps for installing and running Argo Workflows. It also highlights the integration of Argo Workflows with Codefresh for CI/CD pipelines, emphasizing ease of use and cloud-native capabilities. For more details, visit Argo Workflows: The Basics and a Quick Tutorial

Setting Up an Artifact Repository for Argo Workflows

This Pipekit explains how to configure an artifact repository for Argo Workflows. It provides step-by-step instructions on setting up and managing artifact repositories, enabling efficient handling and storage of artifacts generated during workflow executions. This guide is valuable for ensuring smooth and effective workflow operations in Kubernetes environments. For more information, visit How to Configure an Artifact Repo for Argo Workflows (pipekit.io).

Work with DAG (Directed-Acyclic Graphs)

Directed Acyclic Graph (DAG) — A Directed Acyclic Graph (DAG) is a finite graph with directed edges where no cycles exist. It’s used in computer science to model various systems, including task scheduling, data flow, and dependency management. DAGs facilitate efficient algorithms like topological sorting and are central to concepts in computational complexity and data structures. Directed acyclic graph

Overview and Use Cases of Directed Acyclic Graphs (DAGs) — Directed Acyclic Graph (DAG) is a structure consisting of vertices and directed edges, representing activities and their order. Each edge has a direction, and there are no cycles, meaning you cannot return to a vertex once you leave it. DAGs are useful in modeling data processing flows, ensuring tasks are performed in a specific sequence without repetition. Visit Directed Acyclic Graph (DAG) Overview & Use Cases

Directed Acyclic Graphs (DAGs) in Argo Workflows

The Argo Workflows DAG guide explains how to create workflows using directed-acyclic graphs (DAGs). It covers defining task dependencies for enhanced parallelism and simplicity in complex workflows. Examples include a sample workflow with sequential and parallel task execution and features like enhanced dependency logic and fail-fast behavior for error handling. Visit DAG — Argo Workflows

What is Argo CD?

Argo CD follows the GitOps pattern of using Git repositories as the source of truth for defining the desired application state. Kubernetes manifests can be specified in several ways:

- kustomize applications

- helm charts

- jsonnet files

- Plain directory of YAML/json manifests

- Any custom config management tool configured as a config management plugin

Argo CD automates the deployment of the desired application states in the specified target environments. Application deployments can track updates to branches, tags, or pinned to a specific version of manifests at a Git commit. See tracking strategies for additional details about the different tracking strategies available.

Argo CD is implemented as a Kubernetes controller which continuously monitors running applications and compares the current, live state against the desired target state (as specified in the Git repo). A deployed application whose live state deviates from the target state is considered OutOfSync. Argo CD reports & visualizes the differences, while providing facilities to automatically or manually sync the live state back to the desired target state. Any modifications made to the desired target state in the Git repo can be automatically applied and reflected in the specified target environments.

Argo CD: The Basics

The article “Argo CD Fundamentals” by Nandhabalan Marimuthu introduces the basics of Argo CD, a declarative continuous delivery tool for Kubernetes. It covers key concepts such as GitOps, application management, synchronization, and automation workflows, providing practical insights and examples for effectively utilizing Argo CD in DevOps environments. Understand The Basics — Argo CD

Understand The Basics

Argo CD — Argo CD is a declarative, GitOps continuous delivery tool for Kubernetes. It automates application deployments, allowing users to manage Kubernetes resources through Git repositories. By monitoring and syncing application states, Argo CD ensures that the desired state in Git matches the live state in clusters. Understanding Argo CD

Gaining Insight into Argo CD

Explore Argo CD with Codefresh: A comprehensive guide to deploying applications using Argo CD, a GitOps continuous delivery tool. Learn about its features, benefits, and how to set up automated application deployment pipelines seamlessly integrating with Kubernetes. Master the power of GitOps for efficient, scalable software delivery.

Synchronize Applications Using Argo CD

Synchronize Applications Using Kubectl with Argo CD — The link provides guidance on syncing Kubernetes resources with Argo CD using kubectl. It highlights kubectl’s compatibility with Argo CD for synchronizing and managing Kubernetes resources. Both imperative and declarative methods are supported, ensuring seamless integration and efficient resource management within Kubernetes clusters. Sync Applications with Kubectl — Argo CD.

Synchronization Choices in Argo CD: Declarative GitOps Continuous Delivery for Kubernetes — Argo CD’s synchronization options streamline Kubernetes deployments. With features like automatic sync, manual sync, and hooks, it ensures seamless application updates. Customize sync policies, including pruning and sync wave limitations, for efficient resource management. Monitor sync status and history effortlessly for comprehensive deployment control. Sync Options — Argo CD — Declarative GitOps CD for Kubernetes

Use the Argo CD Application

Setting Up Declaratively with Argo CD — The link provides a comprehensive guide to Argo CD’s synchronization options. It details various strategies for syncing Kubernetes resources, including automated and manual methods. Users can learn about hooks, resource filtering, and customization, ensuring efficient management and deployment of applications within Kubernetes clusters. Declarative Setup — Argo CD

What is Argo Rollouts?

Argo Rollouts is a Kubernetes controller and set of CRDs which provide advanced deployment capabilities such as blue-green, canary, canary analysis, experimentation, and progressive delivery features to Kubernetes.

Argo Rollouts (optionally) integrates with ingress controllers and service meshes, leveraging their traffic shaping abilities to gradually shift traffic to the new version during an update. Additionally, Rollouts can query and interpret metrics from various providers to verify key KPIs and drive automated promotion or rollback during an update.

Why Argo Rollouts?

The native Kubernetes Deployment Object supports the RollingUpdate strategy which provides a basic set of safety guarantees (readiness probes) during an update. However the rolling update strategy faces many limitations:

- Few controls over the speed of the rollout

- Inability to control traffic flow to the new version

- Readiness probes are unsuitable for deeper, stress, or one-time checks

- No ability to query external metrics to verify an update

- Can halt the progression, but unable to automatically abort and rollback the update

For these reasons, in large scale high-volume production environments, a rolling update is often considered too risky of an update procedure since it provides no control over the blast radius, may rollout too aggressively, and provides no automated rollback upon failures.

Controller Features

- Blue-Green update strategy

- Canary update strategy

- Fine-grained, weighted traffic shifting

- Automated rollbacks and promotions

- Manual judgement

- Customizable metric queries and analysis of business KPIs

- Ingress controller integration: NGINX, ALB

- Service Mesh integration: Istio, Linkerd, SMI

- Metric provider integration: Prometheus, Wavefront, Kayenta, Web, Kubernetes Jobs

Argo Rollouts: Progressive Delivery for Kubernetes

Argo Rollouts” is a progressive delivery tool for Kubernetes. It enables advanced deployment strategies like Canary and Blue-Green deployments, ensuring smooth and controlled updates of applications. With features like traffic shifting and analysis, it empowers teams to deliver software with confidence and reliability in Kubernetes environments. Argo Rollouts and https://argo-rollouts.readthedocs.io/en/stable/

Argo Rollouts: A Brief Overview of Concepts, Setup, and Operations — Argo Rollouts: Quick Guide to Concepts, Setup & Operations

Use Common Progressive Rollout Strategies

Progressive Delivery Controller for Kubernetes — Argo Rollouts simplifies Kubernetes deployments by offering advanced deployment strategies like blue-green, canary, and experimentation. Automated rollback and rollback analysis, ensure reliability. This documentation introduces its concepts, empowering users to leverage its powerful features for seamless, controlled application deployments in Kubernetes environments. Kubernetes Progressive Delivery Controller

Executing Progressive Deployment Strategies Using Argo Rollouts — Progressive Deployment Strategies using Argo Rollout

Describe the Analysis Template and Analysis Run

Analysis Template — https://docs.opsmx.com/opsmx-intelligent-software-delivery-isd-platform-argo/user-guide/delivery-verification/analysis-template

Creating Analysis Runs for Rollouts — https://argo-rollouts.readthedocs.io/en/release-1.5/generated/kubectl-argo-rollouts/kubectl-argo-rollouts_create_analysisrun/

What is Argo Events?

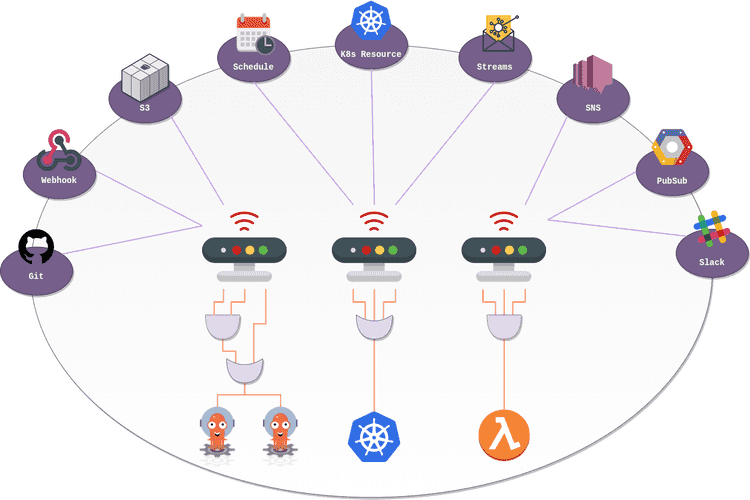

Argo Events is an event-based dependency manager for Kubernetes which helps you define multiple dependencies from a variety of event sources like webhook, s3, schedules, streams etc. and trigger Kubernetes objects after successful event dependencies resolution.

Features

- Manage dependencies from a variety of event sources.

- Ability to customize business-level constraint logic for event dependencies resolution.

- Manage everything from simple, linear, real-time dependencies to complex, multi-source, batch job dependencies.

- Ability to extends framework to add your own event source listener.

- Define arbitrary boolean logic to resolve event dependencies.

- CloudEvents compliant.

- Ability to manage event sources at runtime.

Argo Events- An Introduction and Quick Guide

Explore Argo Events on Codefresh: Discover event-driven automation for Kubernetes. Learn to build resilient, scalable workflows triggered by events from various sources. Harness the power of Argo CD, Events, and Workflows to streamline your CI/CD pipelines and optimize Kubernetes deployments. Empower your DevOps with seamless automation. Argo Events: the Basics and a Quick Tutorial

Argo Events: A Dependency Manager for Kubernetes Based on Events

The Argo Events tutorial introduces Argo, an open-source workflow engine, focusing on event-driven architecture. It details installation, configuration, and usage, emphasizing event-driven workflows for Kubernetes. Learn to automate tasks, trigger workflows based on events, and streamline Kubernetes operations for efficient, scalable development and operations. Argo Events — The Event-Based Dependency Manager for Kubernetes

Understand Argo Event Components and Architecture

Argo Events- The Event-Driven Dependency Manager for Kubernetes — Argo Events, part of the Argo project, orchestrates Kubernetes-native event-driven workflows. Its architecture leverages triggers, gateways, and sensors to detect events from various sources like Pub/Sub systems or HTTP endpoints, initiating workflows. This decentralized, cloud-native system enables scalable, resilient event processing and automation within Kubernetes clusters. Argo Events — The Event-Based Dependency Manager for Kubernetes

Event-Driven Architecture on Kubernetes — Discover the dynamic world of event-driven architecture on Kubernetes with Argo Events. This blog delves into orchestrating microservices, leveraging Kubernetes’ power, and streamlining workflows with Argo Events. Explore seamless event handling and scalable solutions, unlocking the potential for efficient, responsive systems in the modern tech landscape. Event-Driven Architecture on Kubernetes with Argo Events

Key Learning Materials:

You can explore and learn about CAPA Certification and related topics freely through the following materials which I have used and of course Argo Project documentation is our best friend

- https://trainingportal.linuxfoundation.org/courses/introduction-to-gitops-lfs169

- https://training.linuxfoundation.org/training/devops-and-workflow-management-with-argo-lfs256/

- https://killercoda.com/argo

- https://argoproj.github.io/

- https://akuity.io/what-is-argo/

- https://codefresh.io/learn/argo-cd/what-is-the-argo-project-and-why-is-it-transforming-development/

- https://ravikirans.com/capa-certified-argo-project-associate-study-guide/

- https://kloudsaga.com/learning-path-certified-argo-project-associate-exam/

- https://argo-workflows.readthedocs.io/en/latest/quick-start/

At this moment there is not many courses as it is a new exam and I would highly advise not to click on every course which will pop up from Google search as it is a new exam there are plenty of scams.

I hope in the near future we will see more courses from bigger platforms such as kodekloud or killersh.

Conclusion

The exam is not easy. Among other certs I would rank it in this order KCNA/CGOA/CAPA/CKAD/PCA/KCSA/CKA/CKS. After conducting the exam in 24 hours you will receive grading and after passing the exam it feels pretty satisfying overall. I hope it was informative and useful 🚀