Member post by John Matthews, and Savitha Raghunathan, Red Hat

Migrating legacy software to modern platforms has long been a challenging endeavor for businesses. Companies often need to move decades-old systems to newer technologies without causing disruptions. Konveyor is an open source project aimed at supporting enterprises with the modernization of their applications, especially in cloud-native environments. It provides a suite of tools and services that enable organizations to migrate and adapt their workloads to Kubernetes and cloud-native platforms. Now, with Konveyor AI (Kai), the focus expands to using AI-driven solutions to make the modernization journey even smoother and more efficient, and that is what we will explore in this blog post.

What Kai is and How It Works

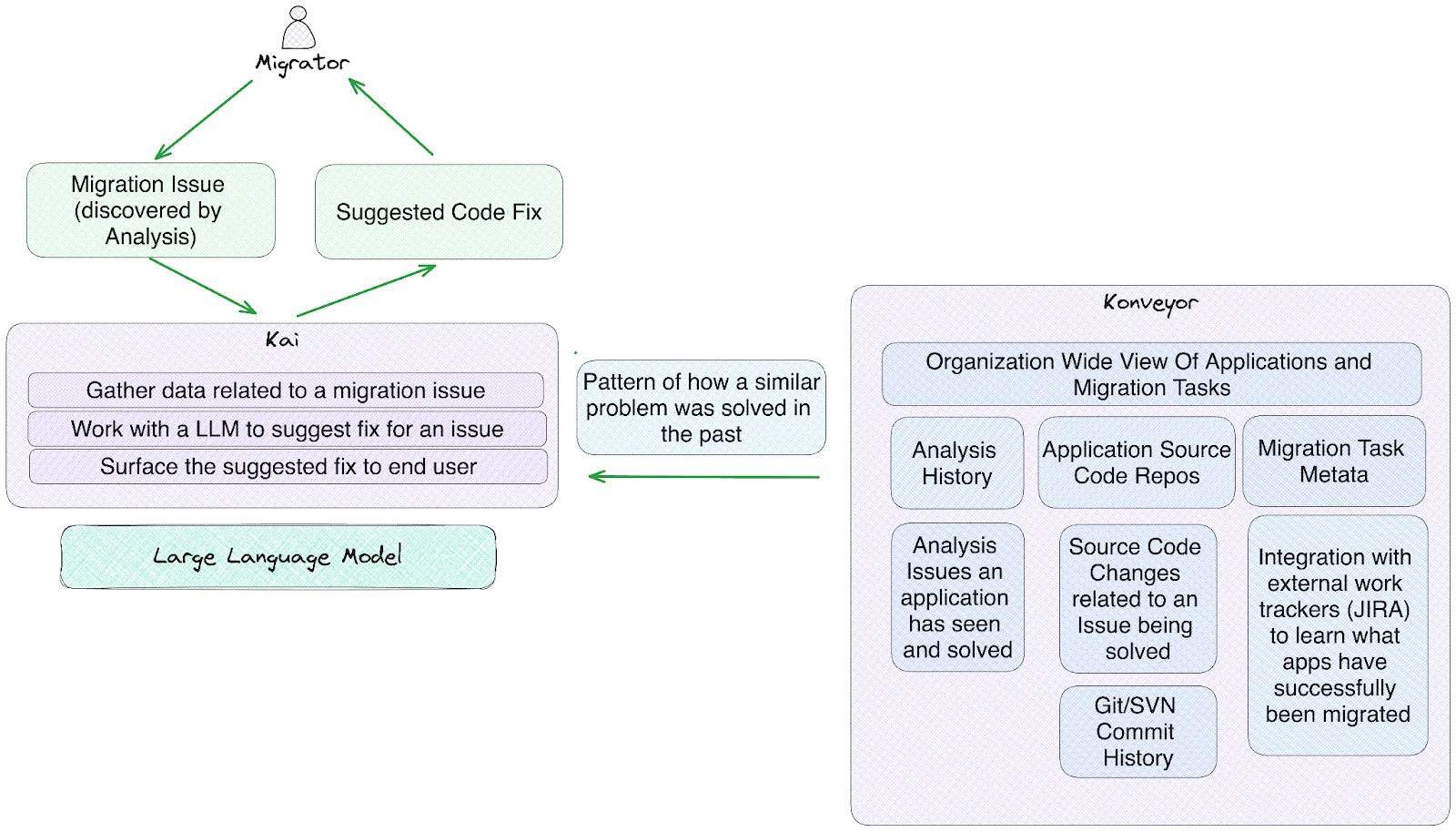

Konveyor AI, or “Kai,” is aimed at assisting with these migrations. By applying Generative AI methods, Kai helps organizations seamlessly transition legacy codebases to modern platforms through tailored code suggestions.

Kai uses the Retrieval Augmented Generation (RAG) approach, combining two sources of intelligence: static code analysis and past migration examples within an organization. This enables Kai to offer highly relevant code suggestions based on how similar migration challenges have been tackled before, avoiding the need for extensive AI retraining.

- Static Code Analysis: This data is provided by analyzer-lsp, which performs a detailed analysis of a codebase to identify parts of the code that need updating.

- Solved Examples: These are prior solutions to similar migration issues that have already been encountered and resolved by the organization. Kai incorporates these examples to provide context for its code suggestions.

- Model Agnostic: Kai follows the “Bring Your Own Model” approach. It allows organizations to use their preferred LLMs, ensuring flexibility and adaptability.

By combining these data sources, Kai can generate context-aware suggestions that help developers modernize their applications while staying consistent with how their organization typically solves problems.

The Workflow: Step-by-Step

Kai’s workflow integrates seamlessly into the development process:

- Static Code Analysis: The tool starts by running analyzer-lsp on the codebase, identifying specific migration issues based on predefined or custom rulesets.

- Locate Solved Examples: Kai searches through the organization’s codebase to find prior instances where similar issues have already been solved.

- Generate Code Suggestions: Using the static analysis data and the relevant solved examples, the LLM creates suggestions for how to resolve the migration issues.

- Display in IDE: The generated code suggestions are surfaced in the developer’s IDE (Integrated Development Environment), allowing them to implement the changes directly.

This process allows developers to efficiently address migration issues without having to manually comb through old projects or reinvent solutions from scratch.

To better understand how Kai works, we put together a Kai demo, where we showcase its capabilities in facilitating the modernization of application source code to a new target. We focus on how Kai can handle various levels of migration complexity, ranging from simple import swaps to more involved changes such as modifying scope from CDI bean requirements. Additionally, we look into migration scenarios that involve EJB Remote and Message Driven Bean(MBD) changes. We also concentrate on migrating a partially migrated JavaEE Coolstore application to Quarkus, a task that involves not only technical translation but also considerations for deployment to Kubernetes.

Addressing Key LLM Challenges with Kai

Kai is designed to address several key challenges that are common in large-scale modernization projects leveraging generative AI.

1. Handling Limited Context in LLMs

LLMs can only process a limited amount of data at a time, referred to as their context size. Since most legacy codebases are too large to be processed in a single request, Kai uses the results from static code analysis to narrow down the problem to specific areas of the code that need attention. This helps to avoid overwhelming the LLM and ensures that it remains focused on solving manageable, well-defined issues.

2. Cascading Changes Across a Repository

One of the challenges in application modernization is dealing with changes that affect multiple files across a repository. For example, changing a method’s signature may require updates in other files that call that method. While Kai currently focuses on file-specific changes, future iterations will tackle repository-wide changes, drawing inspiration from Microsoft’s CodePlan research which will allow Kai to propagate changes automatically across related files.

3. Customize code suggestions to an organization’s past behavior

Many organizations use custom frameworks and technologies that are not widely known or supported by existing AI models. Kai addresses this by incorporating examples of how an organization has handled similar migration problems in the past. This few-shot prompting technique provides the LLM with additional context to help it generate relevant code suggestions, even when dealing with proprietary or unfamiliar frameworks.

4. Adapting to Rapidly Changing LLM Capabilities

Kai is designed to be model agnostic. As LLMs continue to evolve, Kai remains adaptable, allowing organizations to switch between different models as needed, whether using public, private, or local AI services.

5. Iterating on Code Suggestions

Kai includes an agent that iteratively refines code suggestions by checking the validity of the initial output and providing feedback to the LLM. This iterative process helps improve the quality of the final code solution, ensuring that it addresses the problem effectively.

Next Steps for Kai

Maintainers of Kai are actively working on development, with a heavy focus on addressing cascading changes across large codebases and improving the IDE experience. We regularly produce early evaluation builds that adopters can test and use.

How to Get Involved

For those interested in contributing to Kai or learning more about its development, there are several ways you can get involved:

- Join the Konveyor community’s monthly calls to stay updated on the project’s progress.

- Contribute to the Kai GitHub repository: https://github.com/konveyor/kai.

- Engage with the developers and community members via Kubernetes Slack workspace in the #konveyor channel.

Konveyor AI aims to provide practical, efficient support for application modernization. By leveraging static code analysis and prior solved examples, it offers code suggestions that can help organizations migrate their legacy codebases without having to rebuild solutions from the ground up. With a flexible and adaptable design, Kai is poised to assist companies in meeting their modernization goals, regardless of the technology stack or the size of the codebase.