Accessing the Kubernetes API for your clusters from anywhere or across any network is a powerful lever. It’s even better if you can do so without shipping or extending more messy networks, like VPCs or VPNs.

Let me show you how open-source Kubernetes Operator makes that possible by letting you:

- Add ingress to your K8s API with a single CRD

- Still use kubectl for administrative tasks, not compromise with kube proxy and curl

- Use your existing RBAC/AuthN/AuthZ

- Add observability and traffic management without deploying even more services to your cluster

You’ll start by setting up token-based RBAC (if you don’t already have that), installing ngrok’s K8s Operator, applying the AgentEndpoint CRD, and then taking it to the next level.

Before you jump in: RBAC!

I assume you already have a K8s cluster you’d like to access remotely. If not, or if you want to test this functionality out in a proof-of-concept fashion, minikube is always a great place to start.

Speaking of authentication, proper authentication (AuthN) and authorization (AuthZ) are also necessary to access the Kubernetes API remotely from both functional and security best practices perspectives. The default RBAC configuration for most K8s clusters won’t allow you to access API resources externally, as it assigns you the system:anonymous role, which lacks the privileges to even view or list the state of your cluster.One way to bootstrap RBAC for remote access is a service account with the appropriate authorization.

kubectl create serviceaccount ngrokDive your new service account some privileges with a ClusterRoleBinding. For the sake of simplicity and setting up ngrok to its fullest, an admin ClusterRole is helpful—if you’re setting this up for others on your team, you may want to use edit or view.

kubectl create rolebinding ngrok-admin-binding --clusterrole=admin --serviceaccount=default:ngrok --namespace=defaultUpdate your Kubectl config file with this new service account user and a token.

kubectl config set-credentials ngrok --token $(kubectl create token ngrok)This isn’t the be-all-end-all for secure AuthN/AuthZ for your Kubernetes cluster, but it will get you through the basic setup.

Set up the ngrok Kubernetes Operator

Head over to ngrok and create an account for free if you don’t have one already, then reserve a domain—type in any string you’d like, then choose a TLD from the dropdown, like .ngrok.app. Keep note of this—I’ll refer to it as <YOUR_NGROK_DOMAIN> later. ngrok also generates certificates and terminates TLS for you at the agent

Now you can install ngrok’s open-source Kubernetes Operator, which drops the agent into your cluster to provision ingress, observability, and traffic management with a single CRD and native tooling.

Set up a few environment variables to make using Helm a little easier—grab your ngrok authtoken in the dashboard, then create a new API key for this deployment, and drop those into environment variables.

export NGROK_AUTHTOKEN=<YOUR_NGROK_AUTHTOKEN>

export NGROK_API_KEY=<YOUR_NGROK_API_KEY>Use Helm to install the ngrok Kubernetes Operator into the ngrok-operator namespace.

helm install ngrok-operator ngrok/ngrok-operator \

--namespace ngrok-operator \

--create-namespace \

--set credentials.apiKey=$NGROK_API_KEY \

--set credentials.authtoken=$NGROK_AUTHTOKEN \

--set useExperimentalGatewayApi=trueGive your cluster a few moments, then double-check you have both the ngrok-operator-manager and ngrok-operator-agent pods running and ready.

kubectl get pods -n ngrok-operatorNAME READY STATUS RESTARTS AGE

ngrok-operator-agent-576468c9f7-2crdm 1/1 Running 0 29s

ngrok-operator-manager-6d8bc67559-82zwt 1/1 Running 0 29sExpose your cluster’s K8s API with ngrok

Annotate the kubernetes service in the default namespace to declare the backend is an HTTPS upstream.

kubectl -n default annotate service kubernetes 'k8s.ngrok.com/app-protocols={"https":"https"}'Create a file called expose-k8s-api.yaml with the below YAML, replacing <YOUR_NGROK_DOMAIN> with the one you reserved earlier. This is our new AgentEndpoint CRD,

apiVersion: ngrok.k8s.ngrok.com/v1alpha1

kind: AgentEndpoint

metadata:

namespace: default

name: kubernetes-api-endpoint

spec:

url: https://<YOUR_NGROK_DOMAIN>

upstream:

url: http://kubernetes:443

Apply this CRD to your cluster.

kubectl apply -f expose-k8s-api.yamlEdit your config to connect kubectl to your external cluster

Your K8s API is technically ready for you to access remotely. If you curl the domain you reserved before, you’ll hit your cluster’s API—but won’t be allowed in thanks to Kubernetes’ built-in RBAC.

curl https://<YOUR_NGROK_DOMAIN>/api/v1

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/api/v1\"",

"reason": "Forbidden",

"details": {},

"code": 403

}

Also, you probably would rather just continue to use kubectl to view your cluster’s resources.

Make a backup of your existing config for safekeeping.

cp ~/.kube/config ~/.kube/config.bakUse kubectl to update the cluster’s server property.

kubectl config set-cluster <YOUR_CLUSTER_NAME> --server https://<YOUR_NGROK_DOMAIN>And to remove the certificate-authority property from your cluster config, if that exists, as you’ll use your token instead.

kubectl config unset clusters.<YOUR_CLUSTER_NAME>.certificate-authorityCreate a new context that uses your service account and token with the appropriate privileges based on the cluster role you set before, then switch to it.

kubectl config set-context ngrok --cluster <YOUR_CLUSTER_NAME> --user ngrok --namespace=default && kubectl config use-context ngrokVerify that you can access your cluster’s API remotely with kubectl get pods.

You’re set! But there is still plenty more functionality to get the most from external API access to your clusters.

View K8s API requests with Traffic Inspector observability

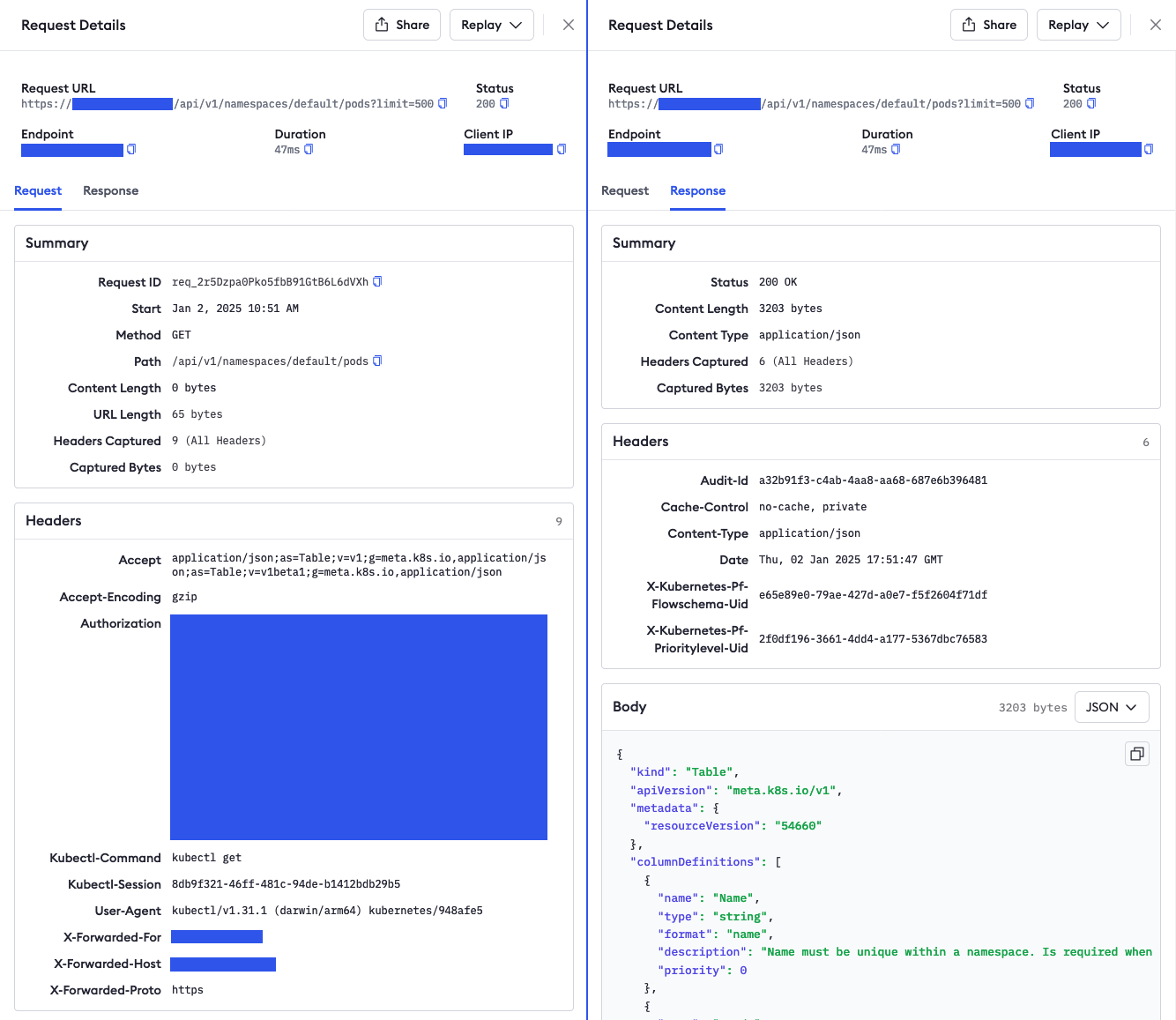

As I mentioned earlier, kubectl is a wrapper around the Kubernetes API, which means that when you run a command like kubectl get pods, you’re actually generating an HTTP request to https://<YOUR_NGROK_DOMAIN>/api/v1/namespaces/default/pods?limit=500. Since ngrok handles ingress through that public endpoint, you can also observe every API request through Traffic Inspector.

Here’s an example of what kubectl get pods actually looks like on the request level:

I’ve anonymized some of the details, but the request headers and response body contain valuable details about who is accessing your K8s APIs, using which user roles or service accounts and associated tokens, what actions they’re trying to perform, and how your cluster responds.

Traffic Inspector is built into every ngrok endpoint and doesn’t require you to deploy any other services to your cluster, like Prometheus, which then needs additional plumbing and AuthN protection.

Set access control with Traffic Policy

With this setup, you rely on ngrok for discoverability and ingress, and K8s’ RBAC for access control into your cluster. With ngrok, you can take a few other steps to control who is allowed to even attempt to fire off a request to your cluster.

ngrok has a configuration language called Traffic Policy that lets you filter, orchestrate, and take action on traffic as it passes through the network. If you connect to these clusters from static IPs, you could restrict ingress to only those IPs with the restrict-ips action—and reject all other requests before they even hit your cluster.

Your expose-k8s-api.yaml file gets a new entry—trafficPolicy—and some extra config to only allow requests from the 1.1.1.1 IP. You can use CIDRs and multiple IPs, in IPv4 and IPv6, to allow just the right access.

apiVersion: ngrok.k8s.ngrok.com/v1alpha1

kind: AgentEndpoint

metadata:

namespace: default

name: kubernetes-api-endpoint

spec:

url: https://<YOUR_NGROK_DOMAIN>

upstream:

url: https://kubernetes.default:443

trafficPolicy:

inline:

on_tcp_connect:

- actions:

- type: restrict-ips

config:

enforce: true

allow:

- 1.1.1.1/32

Reapply the CRD with kubectl apply -f expose-k8s-api.yaml and you’re set.

What’s next?

With ngrok and the Kubernetes Operator as your “pipe” between kubectl and the Kubernetes API, you can control your clusters independent of where you are or what networks you must traverse—while also adding additional security layers that you simply can’t get with the built-in options. This route is also more scalable, as you can deploy the same manifest multiple times, replacing only hostnames, to quickly add ingress to the K8s API across all your clusters.

Once you’ve run your first few kubectl commands over the ngrok network, check out our Traffic Policy docs for even more ways to manage K8s traffic, whether it’s API requests or production user-facing interaction. You can chain together multiple actions, or filter specific requests based on their properties, for fine-tuned control over your traffic.

We’d also love to hear about your experiences in the ngrok/ngrok-operator project on GitHub—feel free to send us your feature requests, bug reports, and contributions!