Powering digital loyalty transactions of some of the biggest banks in the UK with Linkerd

Challenge

Bink is a fintech company based in the UK. In 2016, Bink had built their own in-house Kubernetes distribution. But an unstable networking infrastructure led to huge amounts of random TCP disconnects, UDP connections going missing, and other random faults.

Solution

To help solve or, at best, mitigate these issues, the infrastructure team began looking into service meshes. After a little research, they decided to give Linkerd a try. “As soon as we started experimenting with Linkerd in our non-production clusters, network faults caused by the underpinning Azure instabilities dropped significantly. That gave us the confidence to add Linkerd to our production workloads where we saw similar results.” – Mark Swarbrick, Head of Infrastructure at Bink.

Impact

Linkerd significantly improved things from a technology and business standpoint. “We had just begun conversations with Barclays and needed to prove we could scale to meet their needs. Linkerd gave us the confidence to adopt a scalable cloud-based infrastructure knowing it would be reliable — any network instability was now handled by Linkerd. This in turn allowed us to agree to a latency and success-rate-based SLA. Linkerd was the right place in the stack for us to monitor internal SLOs and track the performance of our software stack” said Swarbrick.

By the numbers

SLA commitments

Committed to internal SLO metrics powered by Linkerd of 99.9% uptime

Efficiency

A team of 3 manages a platform supporting 500k+ users

Immediate results

Network faults disappear as soon as implemented

Building a fault-tolerant application stack on top of a dynamic foundation

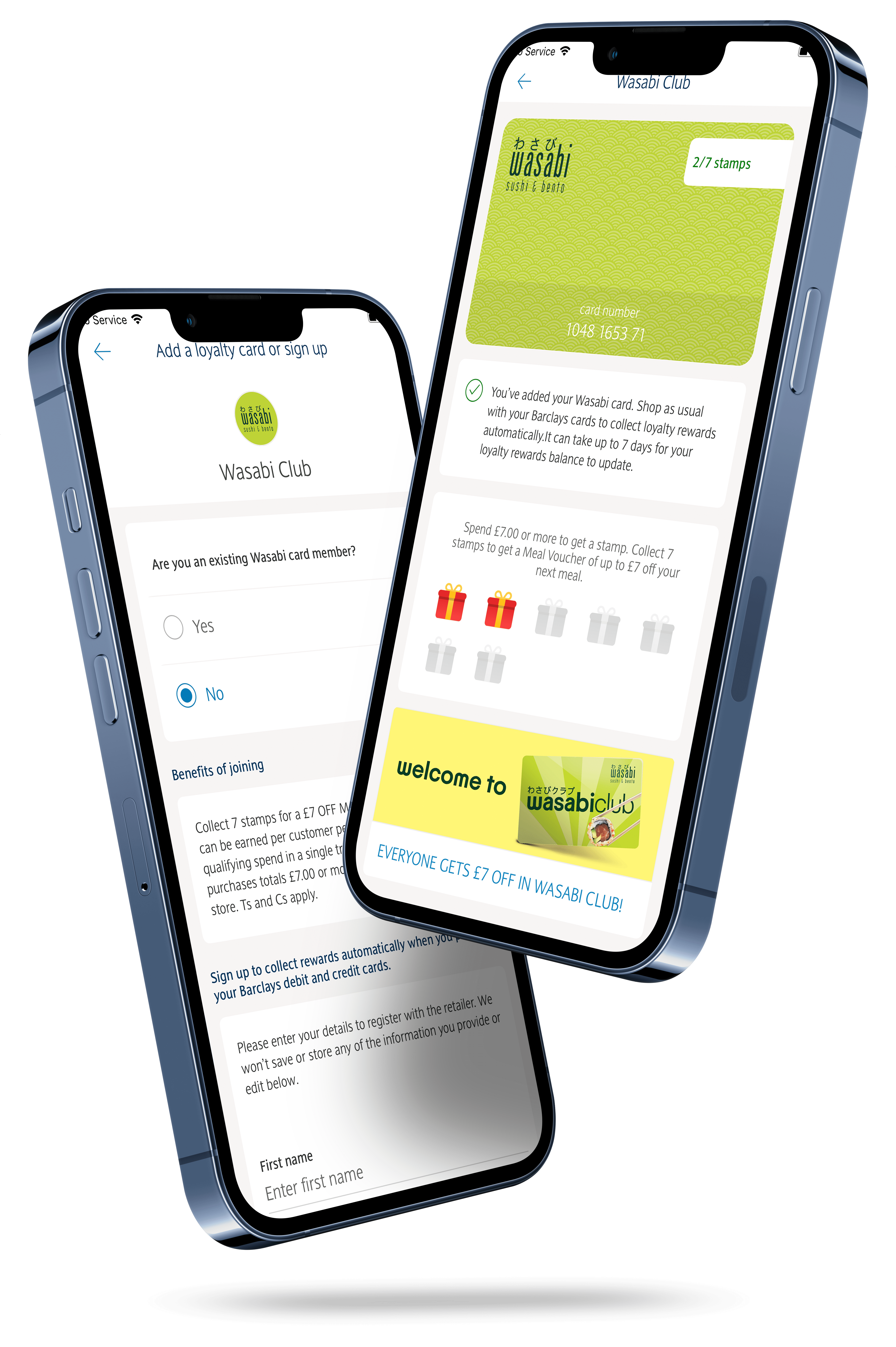

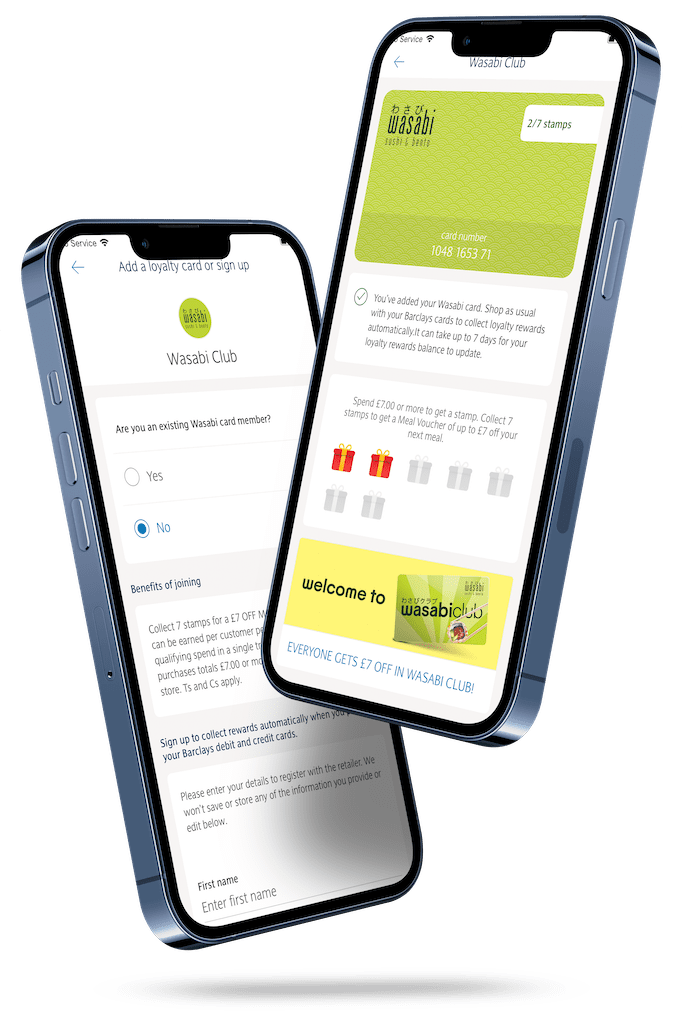

A fintech company based in the UK, Bink has made it its mission to reimagine loyalty programs — making them easier for everyone, including banks, shops, and customers. To achieve this, Bink has developed a solution that recognizes loyalty points every time people shop, connecting purchases with reward programs with a simple tap.

Underpinning the solution is an extensible proprietary platform that meets the criteria that banks have for security and accountability (the solution links customer payment cards to any loyalty program). With the luxury of a greenfield site, the Bink engineering team leveraged multiple CNCF projects including Kubernetes, Linkerd, Fluentd, Prometheus, and Flux to build a technology stack that is performant, scalable, reliable, and secure, while reducing application issues caused by transient network problems.

Loyalty programs that have a positive impact on retail

Everyone at Bink has years of first-hand experience in banking, retail, and loyalty programs. They understand banking opportunities and the transformative impact that loyalty programs have on retail.

In 2019, Barclays recognized the immense potential in Bink’s offering and committed to a significant investment in their business. Thanks to this partnership, Bink is now accessible to millions of Barclays’ customers in the UK.

Like most startups, the infrastructure was built mostly by one individual. Budgets were tight but they knew they had to build something that could grow with the company.

“Fast forward three years and a team of three is supporting our in-house built platform capable of processing millions of transactions per day — a true testament to the amazing technology of the cloud native ecosystem!”

Mark Swarbrick, Head of Infrastructure, Bink.

The Bink team, infrastructure, and platform

The Bink platform is now managed by a team of three: Mark Swarbrick, Chris Pressland, and Nathan Read. They operate six Kubernetes clusters — two of which are dedicated to production in a multi-cluster setup. Each cluster has approximately 57 different in-house-built microservices. All operations run in Microsoft Azure with multiple production and test environments.

Initially, Bink had three web servers on bare metal Ubuntu 14.04 instances running a handful of uWSGI applications load balanced behind NGINX instances — no automation of any kind was in place.

In 2016, Pressland was hired to begin converting Bink’s applications over to Docker containers, moving away from the existing approach of SFTPing code onto the production servers and restarting uWSGI pools. To enable this, they built a container orchestration utility in Chef which dynamically assigns host ports to containers and updates NGINX’s proxy_pass blocks to pass traffic through. This worked well enough until they realized that Docker caused many Kernel panics and other issues on their aging Ubuntu 14.04 infrastructure.

Around the same time, the team got formal approval for evaluating a migration from their data center to the cloud since their needs were far outgrowing what the data center could offer. As a Microsoft 365 customer, they decided to go with Microsoft Azure. “We quickly concluded that maintaining our own container orchestrator wasn’t sustainable in the long run and decided to move to Kubernetes,” explained Swarbrick. At the time, Microsoft didn’t have a Kubernetes offering that met their requirements, so they wrote their own with Chef.

Moving to Kubernetes and looking for a service mesh

The first few years of running their in-house Kubernetes distribution were painful, to say the least. This was mostly due to Microsoft’s, at the time, unstable networking infrastructure. This got considerably better over time but, for the first few years, they saw huge amounts of random TCP disconnects, UDP connections just going missing, and other random faults.

Around 2017, Swarbrick’s team began looking into service meshes hoping it could help solve, or at least mitigate, some of these issues. At the time, Monzo engineers gave a KubeCon + CloudNativeCon talk on a recent outage they experienced and the role Linkerd played. “Not everyone is transparent about these things and I really appreciated them sharing what happened so the community can learn from their failure — a big shout-out to the Monzo team for doing that!” said Swarbrick. “That’s when we began looking into Linkerd.” The maintainers were about to release a newer Kubernetes-native version called Conduit, soon to be renamed to Linkerd2.

They also briefly considered Istio as much of the industry seemed to be leaning towards Envoy-based service meshes. However, after a fairly short experiment, they realized Linkerd was really easy to implement. There was no need to write application code to deal with transient network failures and the latency was so small it was worth the additional hop in the stack. It also gave them invaluable traceability capabilities. In short, Linkerd was a perfect fit for their use case.

“As soon as we started experimenting with Linkerd in our non-production clusters, network faults caused by the underpinning Azure instabilities dropped significantly. That gave us the confidence to add Linkerd to our production workloads where we saw similar results.”

Mark Swarbrick, Head of Infrastructure, Bink.

The service mesh difference with Linkerd

Migrating their software stack onto a cloud native platform was a no-brainer. However, parts of the architecture weren’t as performant or stable as they had hoped. Linkerd enabled them to implement connection and retry logic at the right level of the stack, giving them the reliance and reliability they needed. Suddenly, the questions over whether they could use their software stack in the cloud without significant uplift had disappeared. Linkerd showed that placing the logic in the connection layer was the right approach and allowed them to focus on product innovation rather than worrying about network or connection instability. “That really helped reduce operational development costs and time to market,” stated Swarbrick.

Ultimately, Linkerd significantly improved things from a technology and business standpoint. “We had just begun conversations with Barclays and needed to prove we could scale to meet their needs. Linkerd gave us the confidence to adopt a scalable cloud-based infrastructure knowing it would be reliable — any network instability was now handled by Linkerd. This in turn allowed us to agree to a latency and success-rate-based SLA. Linkerd was the right place in the stack for us to monitor internal SLOs and track the performance of our software stack,” continued Swarbrick.

The Bink team didn’t want to grow an on-prem infrastructure or refactor their code to deploy retry logic. Linkerd provided the needed metrics to achieve this quickly while aiding them in tracking down platform bottlenecks in a cloud native environment.

The power of cloud native

Looking at the entire stack, cloud native technologies — and CNCF projects, in particular — enabled Bink to build a cloud-agnostic platform that scales as needed whilst allowing them to keep a close eye on performance and stability. “We’ve tested our platform at load and can perform full disaster recovery in under 15 minutes, recover easily from transitory network issues, and are able to perform root cause analysis of problems quickly and efficiently,” explained Swarbrick.

Ready to shake up UK banking and loyalty programs

Bink had to rapidly grow and mature their infrastructure to meet banking security, stability, and throughput requirements. Cloud native technologies enabled them to confidently support and monitor contractual SLA and internal SLO requirements. This positioned Bink well to capitalize on the retail space opening back up and helping retailers enable payment-linked loyalty in partnership with some of the biggest names in UK banking.