How Preferred Networks used K8s for a more user-friendly AI/ML platform

Challenge

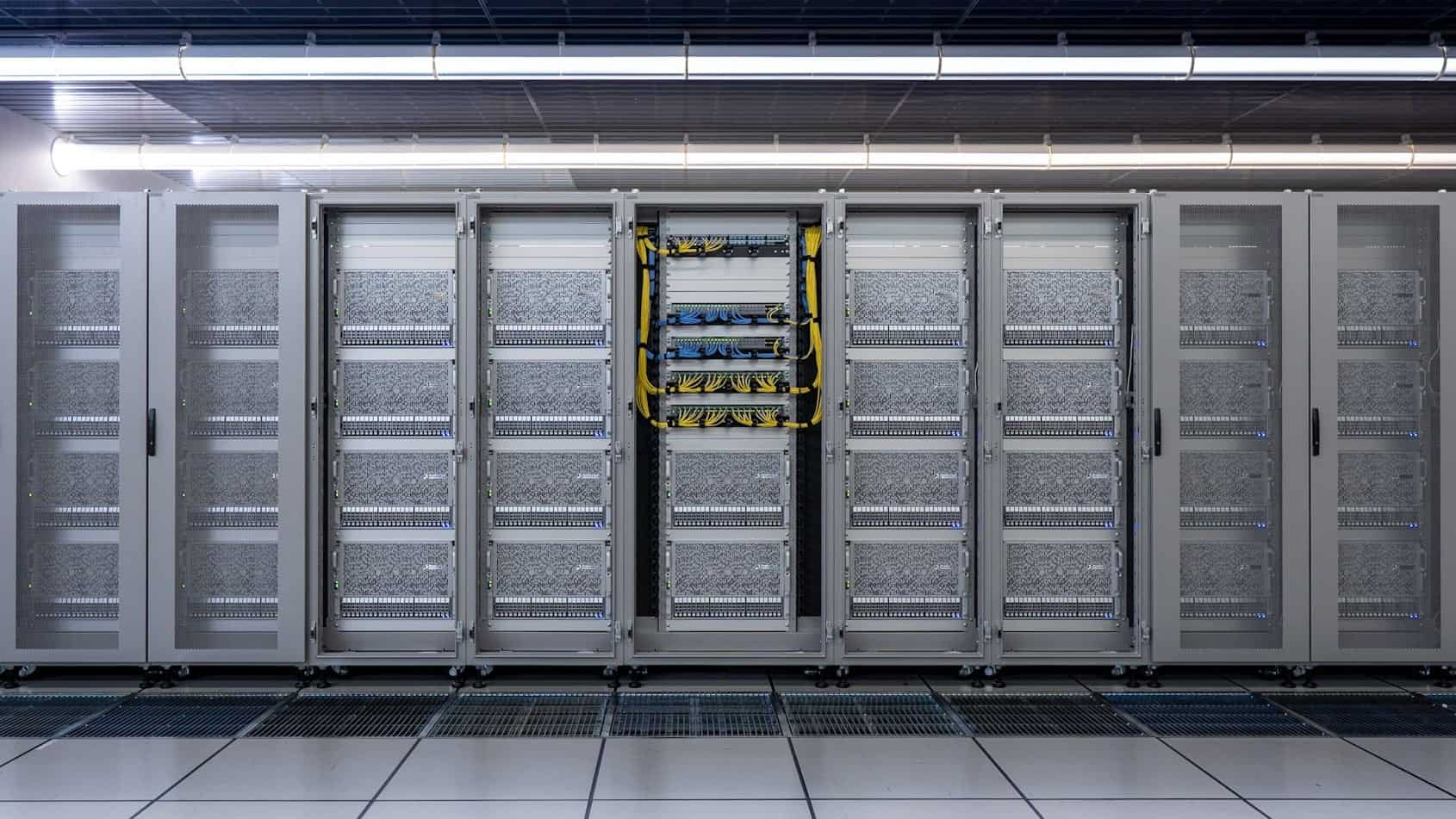

Preferred Networks is a company from Japan that leverages AI/ML technologies to deliver products and solutions. The company has numerous researchers and operates clusters across more than three locations to provide the necessary computational resources. Additionally, the company has developed and researched an AI/ML accelerator called MN-Core, which won the Green 500 in a row. They operate over 1500 GPUs and more than 200 MN-Cores. These clusters are heterogeneous, comprising multiple series of GPUs and MN-Cores.

Delivering these resources to researchers in a user-friendly, fair, and flexible manner is a significant challenge for the company. Furthermore, operations automation became an essential focus since the team managing this infrastructure had only eight engineers at the time.

Solution

To tackle these challenges, the team responsible for cluster management at Preferred Networks adopted container and cloud-native practices, utilizing Kubernetes for orchestration. They also extended Kubernetes scheduler to adapt to specific needs. Additionally, by using Kubernetes Cluster API, they automated everything from OS installation on nodes to joining them to the Kubernetes cluster.

Impact

By adopting Kubernetes, Preferred Networks achieved significant efficiency improvements and scalability, making their AI/ML platform more user-friendly. Since around 2018, the company has based its AI/ML platforms on Kubernetes, reaping the following benefits:

● MN-Core as a First-Class Citizen: By developing a device plugin for MN-Core, it became a first-class resource like CPU, memory, and GPU, accessible to researchers.

● Rapid Site Addition in 3 Months: Using Kustomize to standardize Kubernetes manifests across various sites, the company can add new sites within three months with three engineers.

● Quick Node Recreation in 30 Minutes: A node can be recreated within 30 minutes, from OS installation to provisioning and joining the Kubernetes cluster, making it easy for administrators to reset nodes.

● Fair Scheduling: The flexibility of the Kubernetes scheduler was utilized to develop an extended scheduler, allowing the specification of resource allocation for researchers, including the number of accelerators. It also supports nuanced job prioritization, such as preempting lower-priority jobs for higher-priority projects while keeping long-running jobs during off-peak hours.

● Scheduling for AI/ML: By creating a scheduler plugin for Kubernetes, Preferred Networks made unique enhancements for distributed learning, such as the “gang scheduling” method, enabling more efficient use of cluster resources. This scheduler is open source.

● GPU Selection Specification: By extending Kubernetes features, Preferred Networks added a custom specification syntax for resources, allowing researchers to specify particular GPUs, for example, preferred.jp/gpu-v100-24gb: 1.

By the numbers

1500 +

GPUs

200 +

MN-Core

3 +

Regions