Improving platform efficiency, reliability, performance in one week with Linkerd

Challenge

Salt Security is a pioneer in API security. When the company started to rapidly grow, its platform architecture and services began evolving quickly, changing the nature of messages exchanged between services. That’s when they started experiencing backward-compatibility issues.

Solution

To enable their teams to move fast and with confidence, Salt needed to find a way to ensure no change to an API call would break. They decided to adopt gRPC and to help with gRPC load balancing, they implemented Linkerd. “We started with Linkerd and it turned out to be so easy to get started that we gave up on evaluating the other two — we loved it from the get-go. Within hours of stumbling over it online, we had deployed it on our dev environment. Three more days and it was up and running on our new service running in production.” — Eli Goldberg, Platform Engineering Lead at Salt Security

Impact

Linkerd not only helped with gRPC load balancing, it also improved the efficiency, reliability, and overall performance of the Salt Security platform. “After only one week of work, we experienced tangible results. With Linkerd, we improved performance, efficiency, and reliability of the Salt Security platform. We can now observe and monitor service and RPC-specific metrics and take action in real-time. Finally, all service-to-service communication is now encrypted, providing a much higher level of security within our cluster.” — Omri Zamir, Senior Software Engineer at Salt Security.

By the numbers

Scale

Increased traffic by 10x without issues

Ease of implementation

Service mesh deployed in a matter of hours with tangible results in one week

High Availability

Minimized downtime of API security tool

Hunting API traffic anomalies with minimal downtime

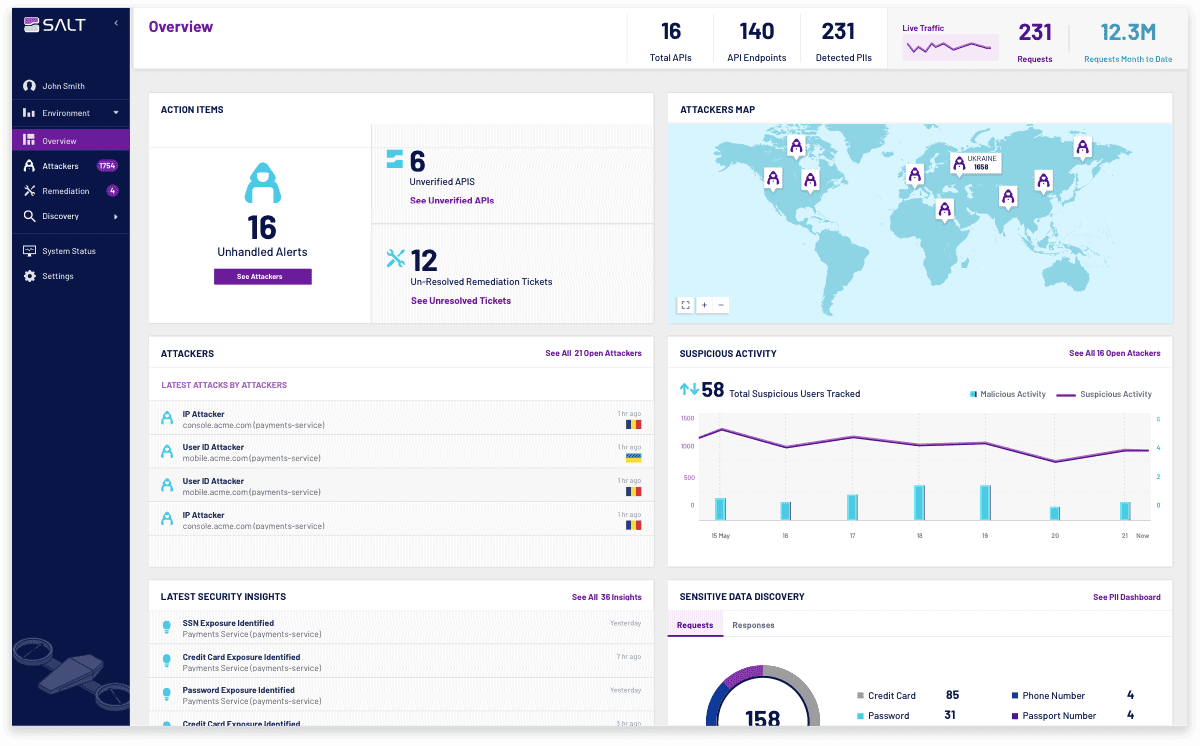

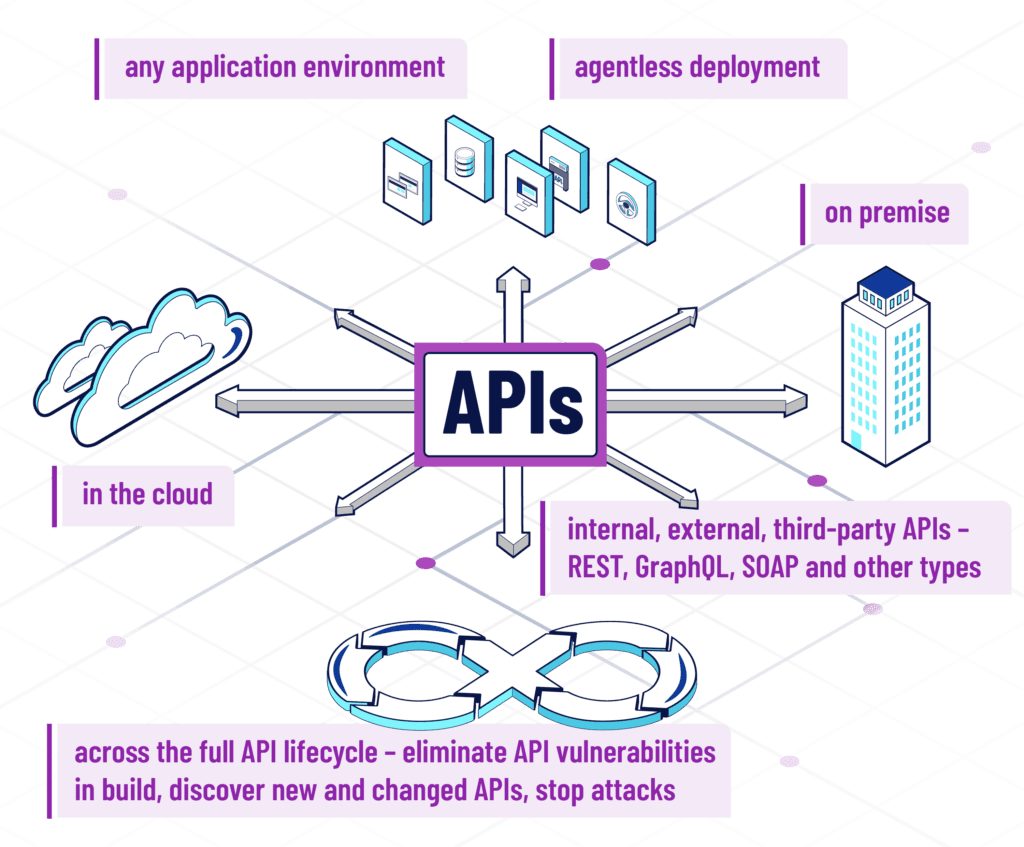

The Salt Security platform delivers the simplest, most comprehensive, and most effective API security by tapping AI and big data.

The platform works by continuously monitoring and learning from customer traffic, allowing it to identify anomalies and sophisticated API attacks quickly and easily in seconds. Salt’s customers include mid-size and Fortune 500 companies and include household names such as The Home Depot, AON, Telefonica Brasil, Equinix, Citi National Bank, KHealth, and more.

Minimizing downtime: a key requirement

At any given time, countless attacks are carried out across the internet. As gateways into a company’s most valuable data and services, APIs are an attractive target for malicious hackers.

Salt Security addresses these vulnerabilities by hosting customer API metadata and then running AI and ML against the data to find and stop attacks. Because customer traffic is constantly ingested, it’s imperative that the company minimizes downtime.

Consider a customer with traffic that averages around 20,000 requests per second (RPS). Downtime of one second represents 20,000 opportunities for malicious attacks — a significant risk. A data breach could last only a few seconds, but its consequences can be devastating since it creates a window of opportunity for a denial-of-service attack or a PII exposure.

The platform team, which includes Eli Goldberg and Omri Zamir, plus two other engineers, must ensure there is no service disruption. To do so, they built the platform running ~40 microservices in multiple Kubernetes clusters that span both Azure and AWS regions — if one provider experiences a substantial outage, the platform will immediately trigger a failover to ensure service availability.

gRPC: a single point of truth for messaging

In 2020, Salt started to grow quickly, and the platform needed to scale. This led to a few challenges that the platform team had to tackle fast. First and foremost, because the architecture and services started to evolve rapidly, the messages exchanged between services did too.

To enable Salt’s teams to move fast and with confidence, Goldberg and Zamir needed to find a way to ensure that no change to an API call would break. gRPC turned out to be the perfect candidate.

Why gRPC, or in Salt’s case, Protobuf specifically? Consider how data is serialized with Protobuf (Protocol Buffers) as opposed to JSON, or other serialization frameworks like Kryo. When a field is removed from a message (or an object), services may be introduced with these changes in an unsynchronized manner.

That, combined with tools such as Buf, allowed the platform team to build a single repository in which they declare all company-internal APIs, and keep it stable and error-free during compile time in a CI pipeline.

Moving to gRPC standardized Salt’s internal API and created a single point of truth for messages passed between the company’s services. In short, the main driver to adopt gRPC was ensuring backward compatibility.

That being said, gRPC has many other benefits, including strongly typed APIs, amazing performance, and a huge amount of support for more than 10 languages. But, as Goldberg and Zamir learned, it also came with its challenges. Primarily, load-balancing gRPC requests, which use HTTP/2 under the hood and thus cannot be effectively balanced by Kubernetes’ native TCP load balancing. Since all Salt’s microservices are replicated for load balancing and high availability, having no way to distribute the cross-service communication between replicas misses this mission-critical requirement.

Linkerd: the answer to gRPC’s load balancing

To solve the gRPC load balancing issue, the engineers researched several solutions. A decision was made to further investigate three options: Envoy, Istio, and Linkerd.

While the platform team was familiar with the term “service mesh,” they had no considerable production burn time with any of these solutions and set out to assess each tool to determine which was the best for Salt’s use case.

“Since Salt is a fast-growing company, one of the key things the team looked at when evaluating any technology was the level of effort needed to maintain it,” said Zamir. “We have to be smart about how we allocate our resources.”

“We started with Linkerd and it turned out to be so easy to get started that we gave up on evaluating the other two — we loved it from the get-go. Within hours of stumbling over it online, we had deployed it on our dev environment. Three more days and it was up and running on our new service running in production.”

Eli Goldberg, Platform Engineering Lead at Salt Security

The next step for the team was to begin migrating the company’s services to gRPC and adding them to the service mesh.

A new world of visibility, reliability, and security

Once services were meshed, the team realized they had something far more powerful than a load balancing solution for gRPC.

“Linkerd opened up a new world of visibility, reliability, and security — a huge a-ha moment,” said Goldberg.

“Service-to-service communication was now fully mTLSed, preventing potential bad actors from ‘sniffing’ our traffic in case of an internal breach. With Linkerd’s latest gRPC retry feature, momentary network errors now look like small delays, instead of a hard failure that triggered an investigation, just to find out it was nothing.”

“We’ve also come to love the Linkerd dashboard. We can now see our internal live traffic and how services communicate with each other on a beautiful map. Thanks to a table displaying all request latencies, we are identifying the slightest regression in terms of backend performance. Linkerd’s tap feature showed us live aggregations of requests per endpoint that were flagging excessive calls between services that we weren’t aware of.”

Omri Zamir, Senior Software Engineer at Salt Security

“We’ve also come to love the Linkerd dashboard,” said Zamir. “We can now see our internal live traffic and how services communicate with each other on a beautiful map. Thanks to a table displaying all request latencies, we are identifying the slightest regression in terms of backend performance. Linkerd’s tap feature showed us live aggregations of requests per endpoint that were flagging excessive calls between services that we weren’t aware of.”

A key benefit for the team is that Linkerd’s CLI is very easy to use. Said Goldberg: “The check command provides instant feedback on what is wrong with a deployment, and that’s generally all you need to know. For example, when resource problems cause one of the control plane’s pods to be evacuated, Linkerd check will tell you those pods are missing — that’s pretty neat!”

“We also quickly realized that Linkerd isn’t just a tool for production – it has the same monitoring and visibility capabilities as other logging, metrics, or tracing platforms,” said Zamir. “If we can see what’s wrong in production, why not use it before we get to production?”

Today, Linkerd has become a key part of Salt’s development stack. “It’s so simple to operate that we don’t need anyone to be “in charge” of maintaining Linkerd — it literally ‘just works’!” said Goldberg and Zamir.

Linkerd docs and the community

The team also appreciated Linkerd’s community of maintainers and contributors.

“The documentation is excellent and covers every use case we’ve had so far,” said Goldberg. “It also includes those we plan to implement soon, such as traffic splits, canary deployments, and more. If you don’t find an answer in the docs, Slack is a great resource. The community is very helpful and super responsive. You can usually receive an answer within a few hours. People share their thoughts and solutions all the time.”

Increased efficiency, reliability, and performance within one week!

“After only one week of work, we were already seeing tangible results,” said Goldberg. “With Linkerd, we improved performance, efficiency, and reliability of the Salt Security platform. We can now observe and monitor service and RPC-specific metrics and take action in real-time. Finally, all service-to-service communication is now encrypted, providing a much higher level of security within our cluster.”

In Salt’s business, scalability and performance are key. The company is growing rapidly, and so are the demands on its platform. More customers mean more ingested real-time traffic. Recently, the platform increased traffic almost 10x without any issues. That elasticity is critical to ensure flawless service for Salt’s customers.

Linkerd also helped the team identify excessive calls that had slipped under the radar leading to wasteful resource consumption. “Because we use Linkerd in development, we now discover these excessive calls before they go to production, thus avoiding them altogether,” said Goldberg.

“Finally, with no one really required to maintain Linkerd, we can devote those precious development hours to things like canary deployments, traffic splits, and chaos engineering, which makes our platform even more robust. With Linkerd and gRPC in place, we are ready to tackle those things next!”

The cloud native effect

Just a few years ago avoiding downtime would have been an insurmountable task for anyone. Today, cloud native technologies make that possible. Built entirely on Kubernetes, Salt’s microservices communicate via gRPC while Linkerd ensures messages are encrypted. The service mesh also provides deep, real-time insights into what’s happening on the traffic layer, allowing the platform team to remain a step ahead of potential issues.

“Although we set out to solve the load balancing issue with Linkerd, it ended up improving the overall performance of the platform,” said Goldberg and Zamir.

“For us, that means fewer fire drills and more quality time with family and friends. It also means we now get to work on more advanced projects and deliver new features faster — our customers certainly appreciate that.”